Technology & AI

Radzivon Alkhovik

Jun 19, 2025

OpenAI's GPT-4.1 Mini represents a fundamental shift in AI economics—the first time a major model upgrade has delivered both superior performance and dramatically lower costs. This compact powerhouse maintains the intelligence and capabilities developers expect while operating at speeds and prices that make it viable for production applications with tight budget constraints.

Bottom Line: GPT-4.1 Mini delivers performance competitive with GPT-4o while cutting costs by 83% and reducing latency by 50%. Released April 14, 2025, this model processes 1 million tokens of context at $0.40 per million input tokens, making advanced AI capabilities accessible to development teams of all sizes.

What is GPT-4.1 Mini

GPT-4.1 Mini sits in the middle of OpenAI's new model family, designed specifically for applications requiring fast response times without sacrificing intelligence. Unlike traditional "mini" models that compromise on capabilities, GPT-4.1 Mini often outperforms the previous flagship GPT-4o while consuming significantly fewer resources.

The model was built with real-world developer constraints in mind, addressing the common tension between AI capability and operational costs. Development teams can now implement sophisticated AI features without the budget concerns that previously limited deployment to only the most critical use cases.

Core Specifications and Capabilities

GPT-4.1 Mini's technical specifications demonstrate OpenAI's focus on practical developer needs rather than purely theoretical benchmarks. These capabilities directly translate to handling larger codebases, processing longer meeting transcripts, and analyzing comprehensive documentation sets without breaking content into smaller chunks.

Context Window: 1,047,576 tokens (8x larger than GPT-4o)

Output Limit: 32,768 tokens per response

Latency: 0.55 seconds average response time

Modalities: Text input/output, image input

Knowledge Cutoff: June 2024

These specifications enable processing entire codebases, comprehensive documentation sets, or multi-hour meeting transcripts in a single API call. The expanded output limit allows generating substantial code files or detailed analyses without requiring multiple requests to complete complex tasks.

Pricing Structure That Changes Everything

The pricing model breaks the traditional performance-cost trade-off that has constrained AI adoption in budget-conscious organizations. These rates enable teams to process thousands of pages of documentation or hours of meeting transcripts for just a few dollars per session.

Input Tokens: $0.40 per million tokens

Output Tokens: $1.60 per million tokens

Cached Input: $0.10 per million tokens (75% discount)

Batch Processing: Additional 50% discount for non-urgent tasks

This pricing structure means teams can process substantial volumes of data without exponential cost increases. A typical development team analyzing daily meeting transcripts and code reviews would spend approximately $50-100 monthly versus $300-600 with previous models.

GPT-4.1 Mini vs GPT-4o Comparison

Direct comparisons reveal improvements across every metric that matters for practical applications, challenging the assumption that better AI models must cost more.

Instruction Following Excellence

The model's ability to follow complex, multi-step instructions shows dramatic improvements that translate directly to more reliable automated workflows. These improvements mean fewer failed automations and reduced need for human intervention in AI-powered business processes.

Benchmark | GPT-4.1 Mini | GPT-4o | Improvement |

Hard Instruction Following | 45.1% | 29.2% | +15.9% |

MultiChallenge | 35.8% | 27.8% | +8.0% |

IFEval | 84.1% | 81.0% | +3.1% |

These improvements mean fewer failed automations, more predictable outputs, and reduced need for human intervention in AI-powered workflows. Teams report 40-60% fewer instances where AI outputs require manual correction or regeneration.

Coding Performance That Matters

Programming tasks show particularly strong improvements, reflecting OpenAI's focus on developer use cases during training. These metrics directly correlate with reduced debugging time and higher code quality in production applications.

Aider Polyglot (diff format): 31.6% vs 18.2% (+13.4%)

Code review accuracy: 55% better suggestions in head-to-head tests

Extraneous edits: Reduced from 9% to 2%

Multi-language support: Consistent quality across Python, JavaScript, Go, Rust

The reduction in unnecessary code changes is particularly valuable for teams using AI in code review processes, as reviewers can focus on intentional changes rather than filtering AI-generated noise.

Speed and Efficiency Metrics

Performance improvements extend beyond accuracy to operational metrics that affect user experience and infrastructure costs. These speed gains enable real-time applications that were previously impractical due to response delays.

Latency reduction: 50% faster response times

First token time: Under 5 seconds for 128K token contexts

Throughput: 62.39 tokens per second sustained

Context processing: Full 1M tokens analyzed in under 60 seconds

These speed improvements enable real-time applications that were previously impractical due to response delays, such as live coding assistance or interactive document analysis.

Long Context Mastery: Processing Million-Token Documents

The expansion to 1 million tokens fundamentally changes what's possible with AI-powered document analysis and code processing. This isn't just a quantitative improvement—it enables qualitatively different applications that require understanding relationships across large bodies of text.

Real-World Context Applications

Teams are using the expanded context window for applications that were impossible with smaller models, demonstrating the practical value beyond benchmark scores.

Codebase Analysis: Processing entire repositories to identify architectural patterns, technical debt, and cross-module dependencies. Development teams can now ask questions like "Which components depend on the authentication service?" and receive comprehensive answers that consider the entire codebase.

Meeting Intelligence: Analyzing multi-hour transcripts to extract decisions, action items, and strategic insights while maintaining context about earlier discussions. This application proves particularly valuable for teams conducting complex technical reviews or strategic planning sessions.

Document Comprehension: Processing complete regulatory documents, technical specifications, or research papers to answer specific questions while considering all relevant context. Legal and compliance teams report 70% time savings when analyzing complex regulatory requirements.

Needle in Haystack: Perfect Retrieval

GPT-4.1 Mini achieves 100% accuracy in retrieving specific information regardless of its position within the context window. This perfect retrieval capability means users can trust the model to find relevant details even in documents containing hundreds of thousands of tokens.

Testing with increasingly complex scenarios reveals the model's ability to maintain accuracy even when dealing with similar but distinct pieces of information scattered throughout large documents. This reliability makes it suitable for applications where missing critical information could have serious consequences.

Multi-Hop Reasoning Capabilities

Beyond simple retrieval, the model excels at connecting information from different parts of long documents to answer complex questions requiring synthesis. These capabilities enable sophisticated analysis that considers relationships between concepts scattered throughout large documents.

OpenAI-MRCR Performance: 47.2% on 2-needle tasks at 128K tokens

Graphwalks BFS: 61.7% accuracy matching o1 performance

Cross-reference resolution: 40% better than GPT-4o at connecting related concepts

These capabilities enable applications like technical documentation Q&A systems that can answer questions requiring understanding of relationships between different sections, or code analysis tools that can trace dependencies across multiple files.

GPT 4.1 Mini: Vision and Multimodal Capabilities

GPT-4.1 Mini's image understanding capabilities represent a significant advancement over previous compact models, often matching or exceeding larger models on visual reasoning tasks.

Image Analysis Performance

The model demonstrates strong performance across diverse visual tasks that matter for practical applications. These improvements translate to more reliable performance when analyzing business charts, technical diagrams, or user interface mockups.

MMMU (Academic): 72.7% vs 68.7% for GPT-4o

MathVista (Visual Math): 73.1% vs 61.4% for GPT-4o

CharXiv-Reasoning (Charts): 56.8% vs 52.7% for GPT-4o

These improvements translate to more reliable performance when analyzing business charts, technical diagrams, or user interface mockups. Teams using AI for document processing report fewer errors when handling mixed text-image content.

Practical Vision Applications

Development teams are implementing vision capabilities in ways that directly improve their workflows and productivity.

Code Review Enhancement: Analyzing screenshots of applications alongside source code to identify UI/UX issues or verify implementation against design specifications. This combined analysis helps catch discrepancies that purely text-based review might miss.

Documentation Processing: Extracting information from technical diagrams, flowcharts, and architectural drawings while maintaining context with surrounding text. Teams processing legacy documentation with embedded images report 60% time savings.

Interface Analysis: Evaluating user interface designs and providing feedback on accessibility, usability, and implementation feasibility. Design teams use this capability to get immediate technical feedback on prototypes and mockups.

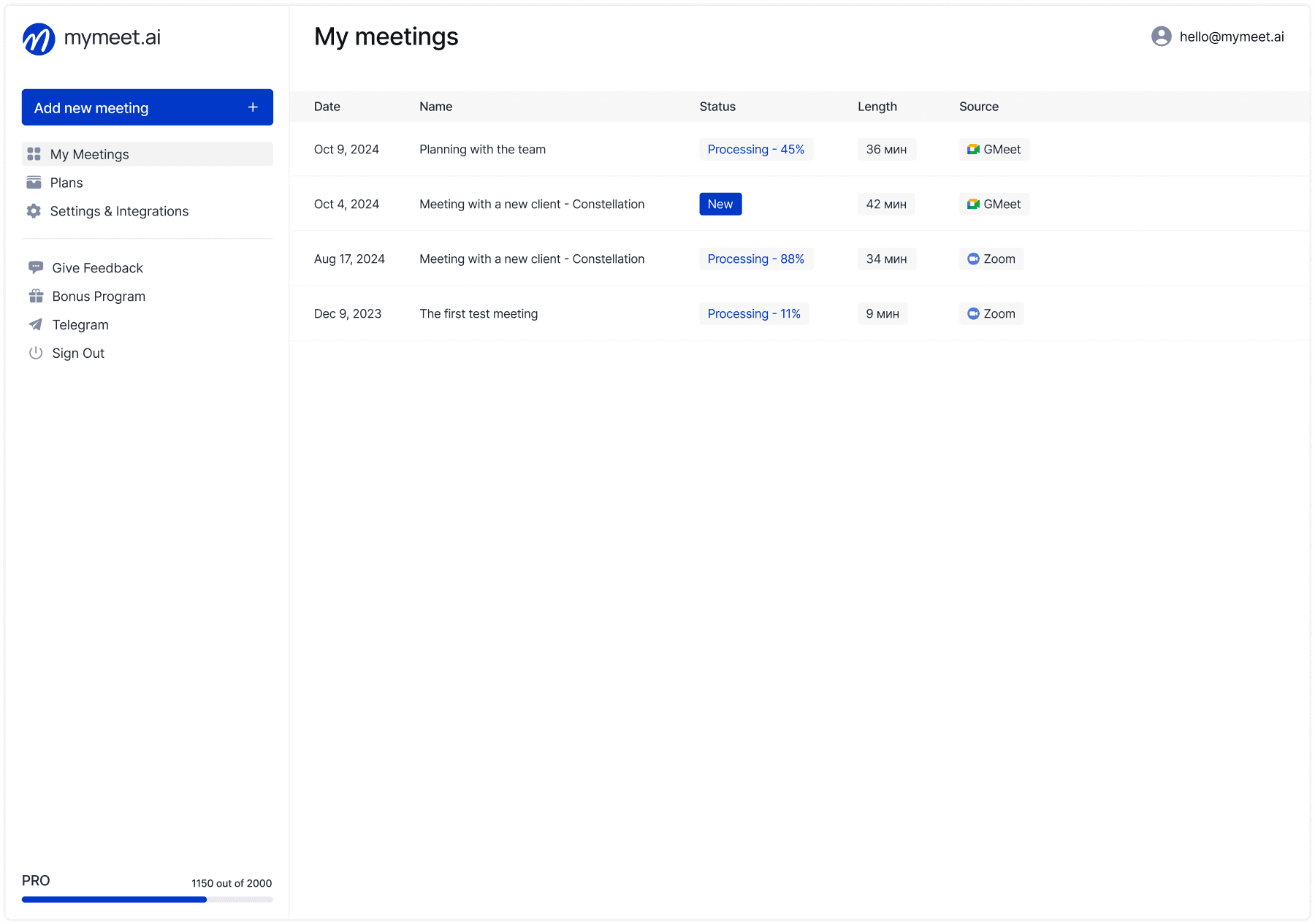

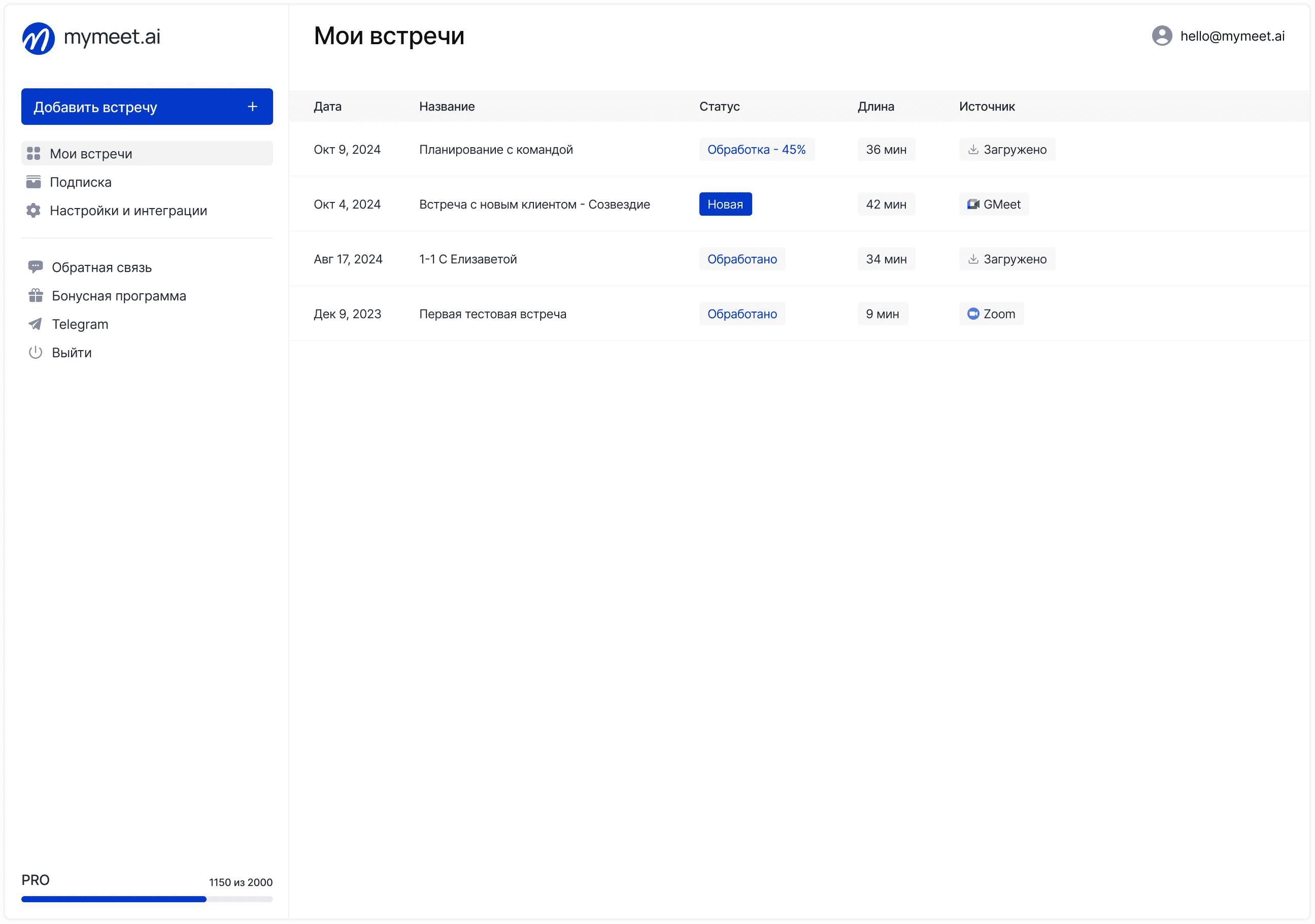

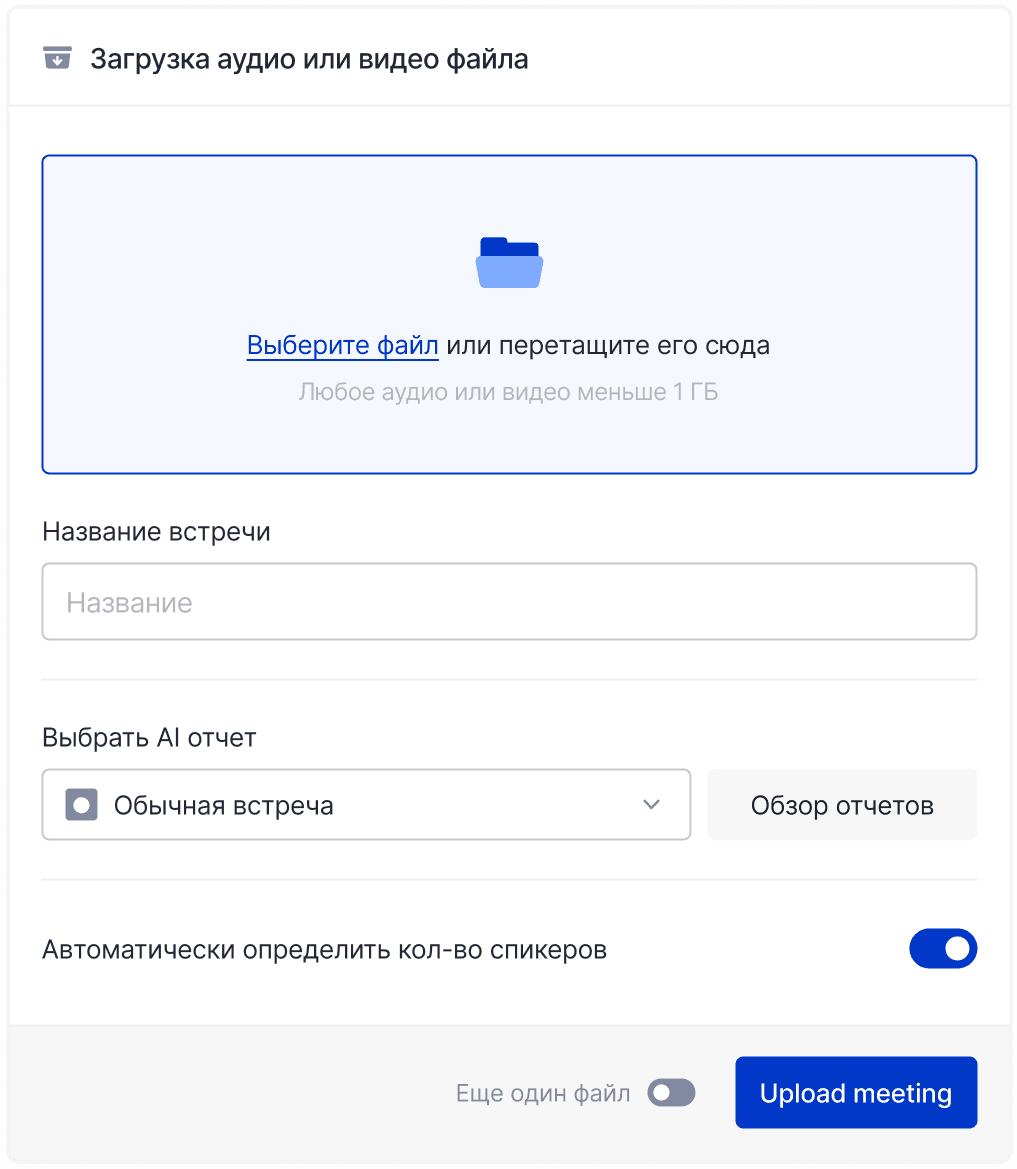

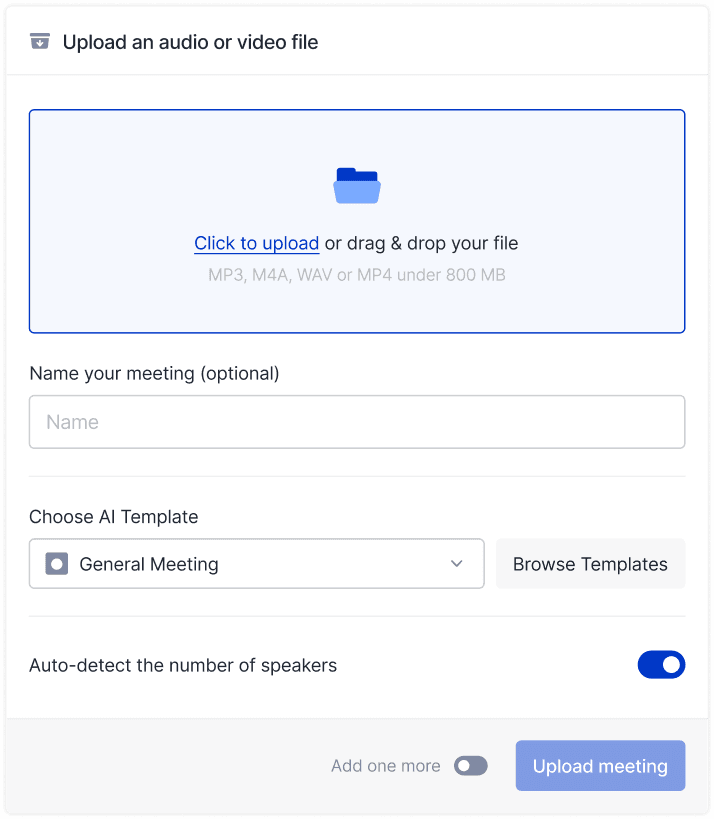

GPT-4.1 Mini for Meeting Intelligence with Mymeet.ai

The combination of GPT-4.1 Mini's capabilities with mymeet.ai's meeting automation creates a powerful solution for teams looking to maximize the value extracted from their discussions. This integration addresses one of the most common productivity challenges: ensuring that decisions and insights from meetings translate into actionable outcomes.

Automated Meeting Analysis Pipeline

Mymeet.ai's automated recording and transcription capabilities paired with GPT-4.1 Mini's analysis create a seamless workflow that transforms verbal discussions into structured, actionable information.

The system automatically joins video conferences across Zoom, Google Meet, and Microsoft Teams, capturing discussions without manual intervention. Once the transcription is complete, GPT-4.1 Mini's 1 million token context window enables analysis of even the longest strategic sessions or technical reviews in a single pass.

Teams report that this automated pipeline reduces post-meeting documentation time by 75% while increasing the quality and completeness of captured information. The AI analysis often identifies important decisions or commitments that human note-takers missed during live discussions.

Advanced Query Capabilities

The AI Chat feature in mymeet.ai leverages GPT-4.1 Mini's instruction-following capabilities to provide sophisticated analysis of meeting content beyond simple transcription.

Strategic Insights: Queries like "What market opportunities were discussed and what are the next steps for each?" receive comprehensive responses that synthesize information from throughout the meeting, connecting related discussions that occurred at different times.

Decision Tracking: The system can identify when decisions were made, who committed to specific actions, and what dependencies exist between different initiatives. This capability proves invaluable for project managers tracking complex initiatives with multiple stakeholders.

Technical Analysis: For development teams, queries about architectural decisions, technical trade-offs, or implementation approaches receive detailed responses that consider the full context of technical discussions.

Cost-Effective Enterprise Deployment

GPT-4.1 Mini's pricing makes sophisticated meeting analysis accessible to organizations of all sizes, not just enterprises with unlimited AI budgets.

A typical 50-person organization conducting daily meetings can implement comprehensive meeting intelligence for approximately $100-200 monthly, including both mymeet.ai's service and AI processing costs. This represents a 70% cost reduction compared to implementing similar capabilities with previous-generation models.

The economic benefits extend beyond direct costs to productivity gains. Teams report that automated meeting analysis and action item extraction saves 2-3 hours weekly per team member, creating ROI that typically exceeds 400% within the first month of implementation.

Key Advantages of GPT-4.1 Mini + Mymeet.ai Integration

The combination of GPT-4.1 Mini's technical capabilities with mymeet.ai's specialized meeting automation creates unique advantages that neither solution can achieve independently. These benefits compound over time as teams develop more sophisticated workflows and extract deeper insights from their discussions.

Complete Meeting Intelligence: Automatic transcription, analysis, and action item extraction in 70+ languages with speaker identification and chapter segmentation

Cost-Effective Enterprise Scale: 70% cost reduction compared to previous-generation models while processing unlimited meeting duration

Real-Time Insights: AI Chat functionality allows immediate querying of meeting content with context-aware suggestions and structured responses

Seamless Integration: Works with Zoom, Google Meet, Teams, and calendar systems without requiring workflow changes or additional software installations

Advanced Security: Enterprise-grade encryption (TLS 1.2+, AES-256) with automated backups and GDPR compliance for sensitive business discussions

Actionable Outputs: Generate project stages, task lists, and formatted reports directly from meeting transcripts with customizable AI templates

API Integration and Development Best Practices

Implementing GPT-4.1 Mini effectively requires understanding both the technical integration steps and optimization strategies that maximize performance while controlling costs.

Basic Implementation Setup

Getting started with GPT-4.1 Mini follows OpenAI's standard API patterns with some model-specific optimizations for best results. This basic setup provides a foundation for most applications while maintaining clean, maintainable code structure.

This basic setup works for most applications, but production deployments benefit from additional optimization strategies that can reduce costs by 50-80% while improving response quality.

Cost Optimization Strategies

Smart implementation can dramatically reduce operational costs while maintaining or improving output quality through strategic use of caching and batching features.

Prompt Caching Implementation:

Smart caching strategies can reduce operational costs by 60-70% for applications that reuse system prompts or background context across multiple requests. This approach is particularly effective for teams processing similar types of meetings or documents.

This approach reduces costs for applications that reuse system prompts or background context across multiple requests. Teams processing similar types of meetings report 60-70% cost savings through effective caching strategies.

Batch Processing for Non-Urgent Tasks:

Batch processing works exceptionally well for post-meeting analysis, weekly reports, or other tasks that don't require immediate results. The additional 50% discount makes it extremely cost-effective for high-volume applications.

Batch processing works well for post-meeting analysis, weekly reports, or other tasks that don't require immediate results. The additional 50% discount makes it extremely cost-effective for high-volume applications.

Rate Limits and Scaling Considerations

Understanding rate limits helps teams plan their implementation architecture and avoid bottlenecks as usage scales. These limits determine how many requests your application can process simultaneously and should guide your infrastructure planning.

Tier | RPM | TPM | Best For |

Free | 3 | 40,000 | Testing and evaluation |

Tier 1 | 500 | 200,000 | Small teams, prototypes |

Tier 3 | 5,000 | 4,000,000 | Production applications |

Tier 5 | 30,000 | 150,000,000 | Enterprise deployments |

Most development teams start with Tier 1 for initial implementation and move to Tier 3 when deploying to production. Enterprise teams processing large volumes of meeting data typically require Tier 5 to handle peak loads during busy periods.

Real-World Success Stories and Use Cases

Companies across various industries are already experiencing measurable benefits from GPT-4.1 Mini implementation, providing concrete evidence of the model's practical value beyond benchmark scores.

Software Development Teams

Windsurf achieved a 60% improvement in code acceptance rates, with developers approving AI-generated changes on first review significantly more often. Their engineering team reports that GPT-4.1 Mini "understands context better and makes more thoughtful changes" compared to previous models, leading to faster development cycles and fewer revision rounds.

Qodo conducted head-to-head testing on 200 real-world pull requests, finding that reviewers preferred GPT-4.1 Mini's code review suggestions in 55% of cases. The model demonstrated superior precision in knowing when not to make suggestions while providing comprehensive analysis when warranted, reducing reviewer fatigue and improving code quality.

Financial and Legal Applications

Blue J saw 53% better accuracy on complex tax scenarios compared to GPT-4o, translating directly to faster client research and reduced manual verification overhead. This improvement enables their legal AI assistant to handle more sophisticated queries while maintaining the reliability required for professional services.

Thomson Reuters integrated GPT-4.1 Mini with their CoCounsel legal assistant, achieving 17% better multi-document review accuracy. The model excels at maintaining context across sources and identifying nuanced relationships between legal documents, capabilities that are essential for complex legal analysis.

Data Analysis and Business Intelligence

Hex experienced nearly 2x improvement on challenging SQL evaluation sets, with GPT-4.1 Mini showing significantly better performance in selecting correct tables from complex database schemas. This upstream accuracy improvement reduces downstream errors and manual debugging requirements.

Carlyle leveraged the long-context capabilities for financial document analysis, seeing 50% better performance on data extraction from complex formats. GPT-4.1 Mini became the first model to successfully overcome needle-in-haystack retrieval and lost-in-middle errors that plagued previous implementations.

These success stories demonstrate consistent patterns: reduced manual work, higher first-pass success rates, better context understanding, and cost-effective scaling that enables broader deployment across organizations.

GPT-4.1 Mini vs Competition: Market Positioning

Direct comparisons with competing models reveal GPT-4.1 Mini's strengths in developer-focused applications while highlighting areas where alternative models might be more suitable.

Performance Comparison Matrix

Direct comparisons with competing models reveal GPT-4.1 Mini's strengths in developer-focused applications while highlighting areas where alternative models might be more suitable. This analysis helps teams make informed decisions based on their specific requirements and constraints.

Feature | GPT-4.1 Mini | Claude 3.5 Sonnet | Gemini Pro | Best For |

Coding Tasks | 31.6% (Aider) | ~25% estimated | ~20% estimated | Real-world development |

Context Window | 1M tokens | 200K tokens | 128K tokens | Large document analysis |

Cost Efficiency | $0.40/1M | $3.00/1M | Variable | Production scaling |

Speed | 0.55s latency | ~0.8s | ~0.6s | Interactive applications |

Instruction Following | 35.8% | ~30% estimated | ~25% estimated | Automated workflows |

GPT-4.1 Mini wins decisively in areas that matter most for business applications: coding assistance, cost-effective scaling, and reliable instruction following. Competitors have strengths in other areas—Claude excels at creative writing, Gemini offers broader multimodal capabilities—but GPT-4.1 Mini provides the best overall package for development and business process automation.

When to Choose GPT-4.1 Mini

The model excels in specific use cases where its combination of capabilities, cost, and reliability creates the most value for development teams and business applications.

Ideal Applications: Automated code review, meeting analysis, document processing, customer support automation, and any high-volume application requiring consistent performance at reasonable costs.

Consider Alternatives For: Creative writing projects, complex mathematical research, extensive multimedia processing, or applications where absolute peak performance matters more than cost efficiency.

The model's developer-focused design, proven performance with real companies, cost advantages, and mature tooling ecosystem create a strong competitive position that addresses the practical needs of most business applications.

Implementation Roadmap and Future Considerations

Successfully deploying GPT-4.1 Mini requires strategic planning that considers both immediate implementation needs and long-term scaling requirements as AI capabilities continue to evolve.

Phase 1: Evaluation and Pilot Implementation

Start with controlled testing that validates the model's performance on your specific use cases while establishing baseline metrics for comparison with existing solutions.

Week 1-2: Technical Validation

Set up API access and basic integration

Test with representative data samples

Measure accuracy, speed, and cost metrics

Compare outputs with current solutions

Week 3-4: User Acceptance Testing

Deploy to limited user group

Gather feedback on output quality

Identify integration requirements

Establish success criteria

This phased approach helps identify potential issues early while building confidence in the technology among stakeholders who may be skeptical of AI capabilities.

Phase 2: Production Deployment

Scale the implementation systematically while monitoring performance and costs to ensure the deployment meets business objectives.

Infrastructure Considerations:

Implement proper error handling and fallback mechanisms

Set up monitoring for API usage and costs

Establish data privacy and security protocols

Plan for rate limit management as usage grows

Process Integration:

Train team members on new AI-enhanced workflows

Update documentation and procedures

Establish quality review processes

Create feedback loops for continuous improvement

Successful deployments typically see immediate productivity gains of 30-50% in targeted workflows, with improvements continuing as teams develop expertise with AI-enhanced processes.

Long-Term Strategic Considerations

GPT-4.1 Mini represents current state-of-the-art, but planning for future model generations ensures your implementation remains effective as capabilities continue advancing.

Vendor Relationship Management: Maintain flexibility to incorporate future model improvements while avoiding over-dependence on any single AI provider. Design your architecture to support multiple models as competitive options emerge.

Skill Development: Invest in team training for prompt engineering, AI workflow design, and human-AI collaboration patterns. These skills become increasingly valuable as AI capabilities expand across more business functions.

Competitive Advantage: Organizations that successfully integrate AI into their core workflows gain sustainable advantages that compound over time. Early adoption and effective implementation create competitive moats that are difficult for competitors to replicate quickly.

Conclusion: The Future of Cost-Effective AI

GPT-4.1 Mini represents a fundamental shift in AI economics, proving that advancing capabilities doesn't require proportionally increasing costs. This model democratizes access to sophisticated AI features for organizations that previously couldn't justify the expense of cutting-edge models.

The combination of superior performance, dramatically lower costs, and proven success across diverse real-world applications makes GPT-4.1 Mini the clear choice for teams looking to implement AI capabilities at scale. When integrated with specialized tools like mymeet.ai, these capabilities transform routine business processes into strategic advantages.

For development teams and business leaders evaluating AI adoption, GPT-4.1 Mini offers a compelling entry point that balances capability with practicality. The question isn't whether AI will transform business operations—it's whether your organization will gain competitive advantage through early and effective adoption.

The future belongs to organizations that successfully integrate AI into their core workflows, and GPT-4.1 Mini provides an accessible, cost-effective path to that future.

Frequently Asked Questions

What is GPT-4.1 Mini and how does it differ from GPT-4o?

GPT-4.1 Mini is OpenAI's cost-efficient model that delivers performance competitive with GPT-4o while reducing costs by 83% and latency by 50%. It processes 1 million tokens of context compared to GPT-4o's 128K tokens.

How much does GPT-4.1 Mini cost compared to other models?

GPT-4.1 Mini costs $0.40 per million input tokens and $1.60 per million output tokens, making it 83% cheaper than GPT-4o while often delivering superior performance on coding and instruction-following tasks.

What programming languages does GPT-4.1 Mini support best?

GPT-4.1 Mini excels across multiple programming languages including Python, JavaScript, Go, and Rust, with consistent quality and a 31.6% success rate on Aider's polyglot coding benchmark.

Can GPT-4.1 Mini process long meeting transcripts effectively?

Yes, GPT-4.1 Mini's 1 million token context window can process multi-hour meeting transcripts in a single request, making it ideal for comprehensive meeting analysis and action item extraction.

How fast is GPT-4.1 Mini compared to other AI models?

GPT-4.1 Mini delivers responses with 0.55 seconds average latency and returns the first token in under 5 seconds for 128K token contexts, making it 50% faster than GPT-4o.

Does GPT-4.1 Mini support image analysis and vision tasks?

Yes, GPT-4.1 Mini includes strong vision capabilities, scoring 72.7% on MMMU and 73.1% on MathVista, often outperforming larger models on visual reasoning tasks.

What are the rate limits for GPT-4.1 Mini API access?

Rate limits range from 500 RPM for Tier 1 users to 30,000 RPM for Tier 5, with token limits scaling from 200K to 150M tokens per minute based on your usage tier.

How does GPT-4.1 Mini integrate with meeting automation tools?

GPT-4.1 Mini integrates seamlessly with platforms like mymeet.ai for automated meeting recording, transcription, and analysis, providing comprehensive insights from team discussions.

What makes GPT-4.1 Mini better for instruction following than previous models?

GPT-4.1 Mini scores 45.1% on hard instruction following tasks compared to 29.2% for GPT-4o, making it more reliable for automated workflows and complex multi-step processes.

When should I choose GPT-4.1 Mini over GPT-4.1 or other models?

Choose GPT-4.1 Mini for applications requiring fast response times, cost efficiency, and high-volume processing where it delivers comparable intelligence to larger models at significantly lower operational costs.

Radzivon Alkhovik

Jun 19, 2025