Technology & AI

Fedor Zhilkin

Mar 4, 2025

In May 2024, OpenAI unveiled GPT-4o, its most advanced AI model to date. The "o" stands for "omni," highlighting this model's groundbreaking ability to process text, images, audio, and video inputs simultaneously. Unlike previous models that required separate systems for different media types, GPT-4o handles everything in one unified neural network, dramatically improving speed and capabilities.

With its ability to respond to voice inputs in as little as 320 milliseconds (comparable to human conversation speed), GPT-4o represents a significant leap forward in human-AI interaction. This comprehensive guide explains everything you need to know about this revolutionary model, its capabilities, how it compares to other AI models, and how you can start using it today.

What is GPT-4o? Understanding OpenAI's Latest AI Model

GPT-4o is OpenAI's flagship multimodal large language model, released in May 2024. The model builds on the foundation of GPT-4 but adds native support for processing multiple types of data inputs—text, images, audio, and video—all within a single neural network.

The "o" in GPT-4o stands for "omni," reflecting its all-encompassing ability to handle different media types simultaneously. This integration represents a fundamental shift from previous AI systems, which typically required multiple specialized models working in sequence to handle different input types.

What sets GPT-4o apart is its end-to-end training across these modalities. Rather than cobbling together separate systems, OpenAI trained a single neural network to understand and generate content across different formats. This unified approach delivers several key advantages:

Near-instantaneous response times for voice conversations

Better understanding of context across different media types

More natural human-AI interactions

Improved performance on non-English languages

Significantly faster processing speeds overall

OpenAI describes GPT-4o as "a step towards much more natural human-computer interaction," highlighting its ability to maintain conversational flow at speeds approaching human response times.

GPT-4o vs. GPT-4: Complete Comparison of OpenAI's AI Models

To understand GPT-4o's significance, it helps to compare it with previous OpenAI models, particularly its immediate predecessors in the GPT-4 family.

Feature | GPT-4o | GPT-4 Turbo | Original GPT-4 |

|---|---|---|---|

Release Date | May 2024 | November 2023 | March 2023 |

Multimodal Capabilities | Native text, audio, images, video | Text and images only | Text only (initially) |

Response Speed | 2-3x faster | Standard | Standard |

Audio Response Time | ~320ms | 5.4 seconds (with Voice Mode) | Not available natively |

Cost (API) | $5/$15 per million tokens | $10/$30 per million tokens | $10/$30 per million tokens |

Context Window | 128,000 tokens | 128,000 tokens | 8,192 tokens (initially) |

Non-English Efficiency | Up to 4.4x better tokenization | Standard | Standard |

Knowledge Cutoff | October 2023 | April 2023 | September 2021 |

The most notable advantages of GPT-4o include:

Speed Improvements: GPT-4o is approximately 2-3x faster than GPT-4 Turbo, processing around 110 tokens per second in benchmark tests. This makes interactions feel much more responsive.

Cost Efficiency: At 50% the price of GPT-4 Turbo, GPT-4o is significantly more affordable for developers and businesses using the API.

Multimodal Integration: While GPT-4 Turbo could process images, it required separate models for audio input/output. GPT-4o handles everything in one unified model, dramatically improving performance.

Voice Response Time: Previous voice interactions using GPT-4 Turbo took an average of 5.4 seconds to respond; GPT-4o responds in around 320 milliseconds—a 16x improvement that enables natural conversation.

Language Efficiency: GPT-4o's improved tokenizer is particularly notable for non-English languages, using up to 4.4x fewer tokens for languages like Gujarati and 3.5x fewer for Telugu, making it more cost-effective and capable with international content.

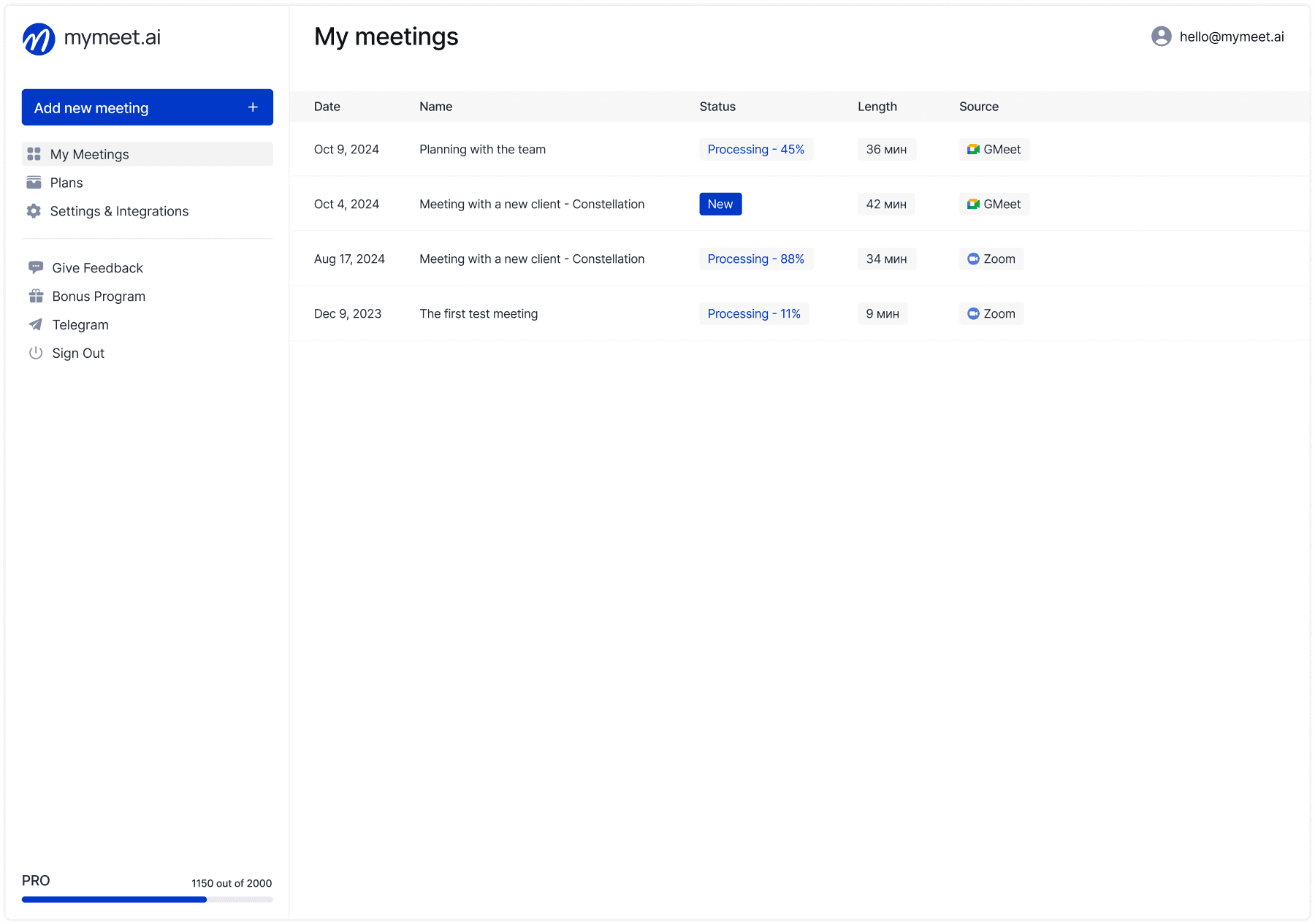

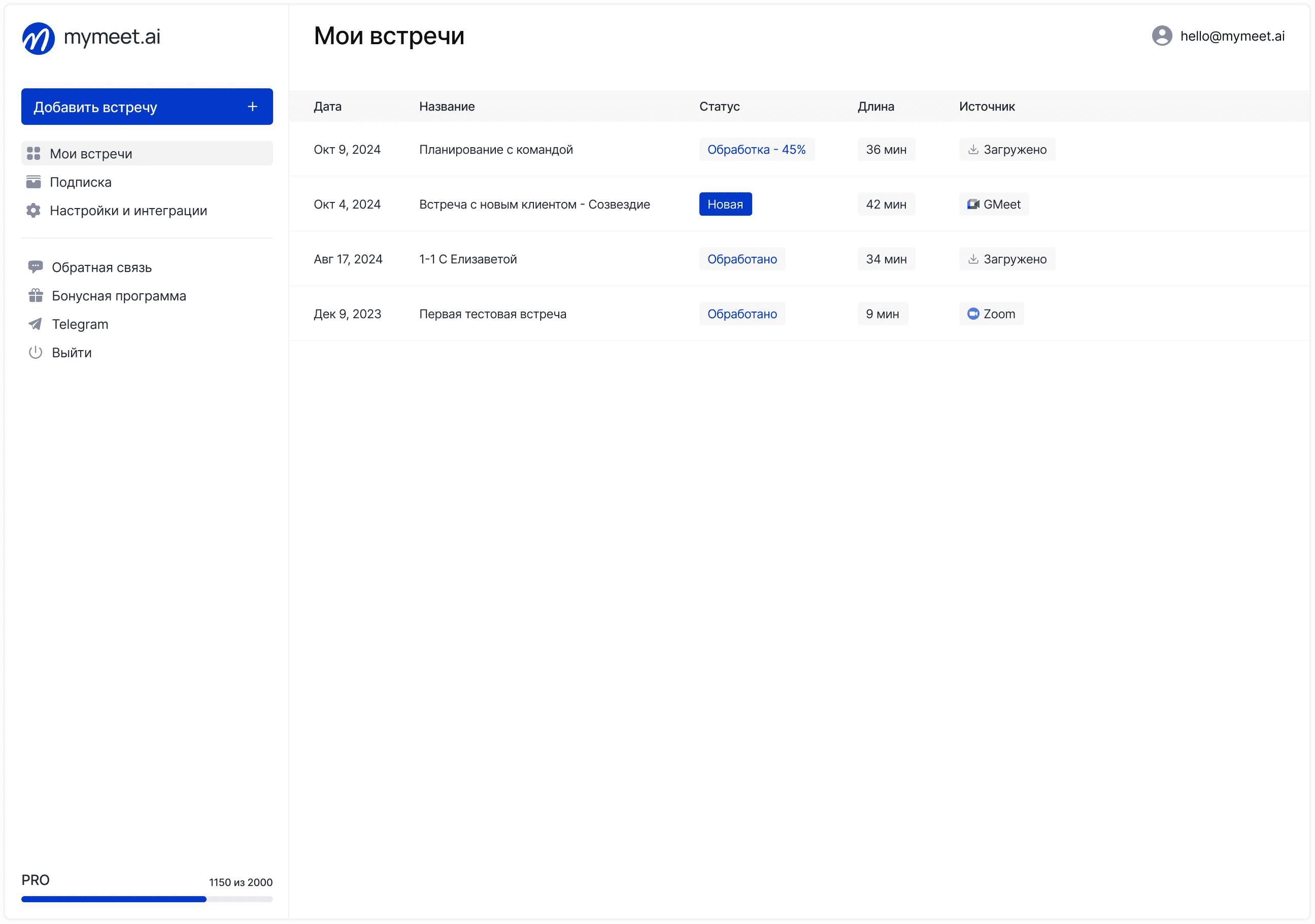

How to Combine GPT-4o with mymeet.ai for Ultimate Meeting Intelligence

While GPT-4o offers incredible capabilities across text, audio, and visual processing, it doesn't directly join your meetings. This is where mymeet.ai creates a powerful complementary workflow that enhances GPT-4o's abilities in professional settings.

What is mymeet.ai?

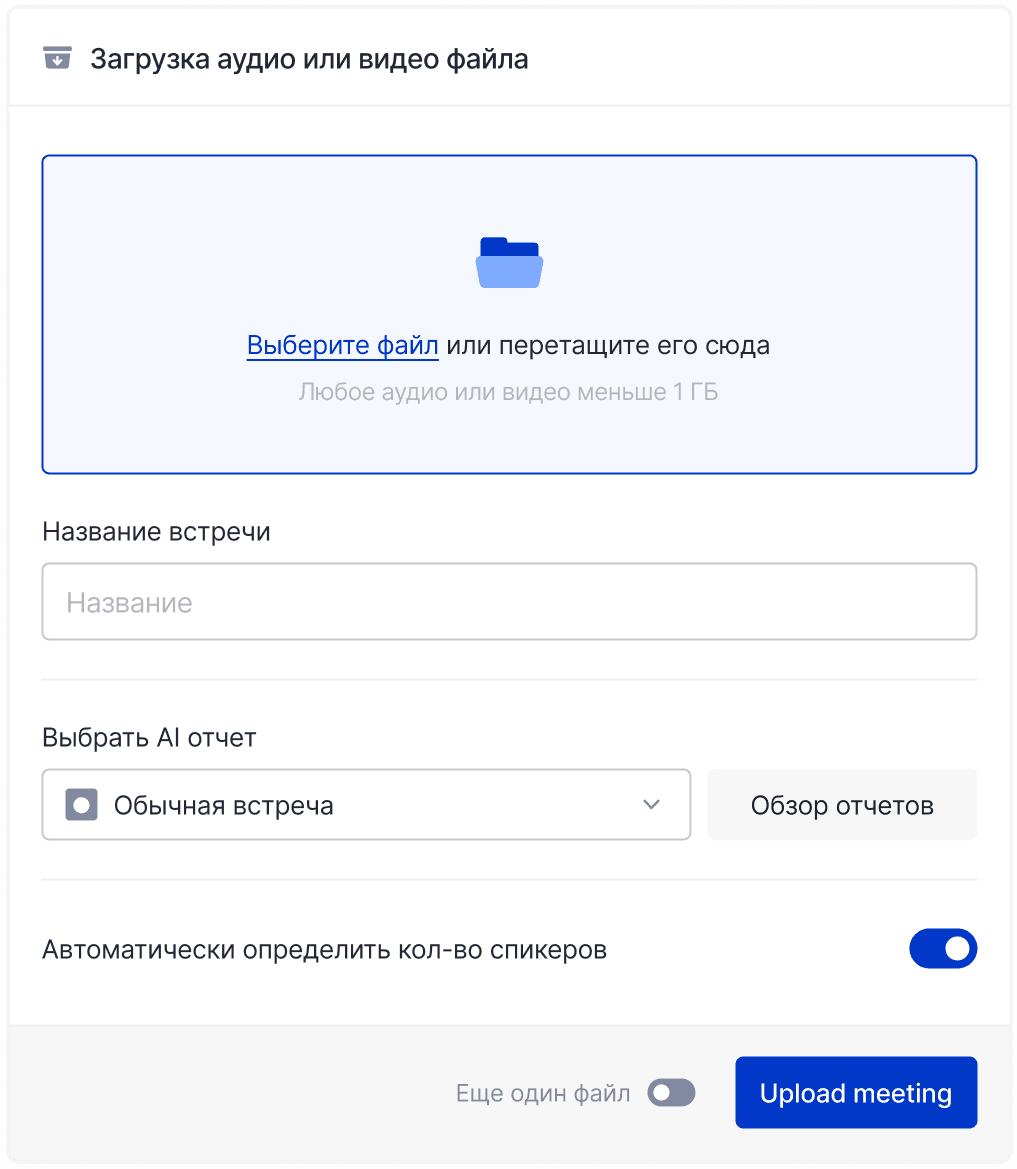

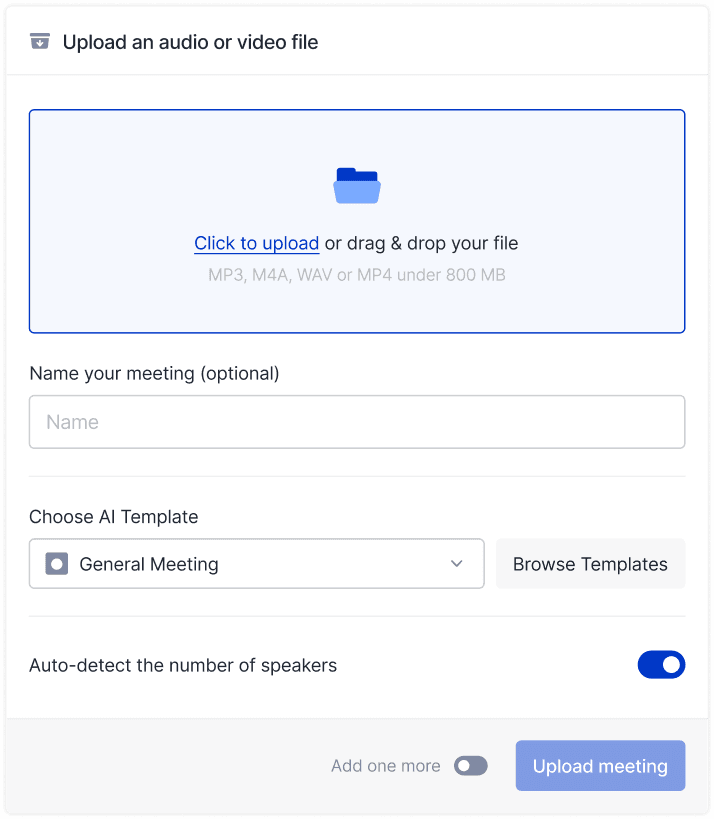

mymeet.ai is a specialized AI meeting assistant that automatically records, transcribes, and analyzes virtual meetings. It integrates directly with platforms like Zoom, Google Meet, and Телемост, capturing complete meeting content without requiring manual recording or note-taking.

Creating a Powerful GPT-4o + mymeet.ai Workflow

This integration creates a seamless process that maximizes both tools' strengths:

1. Automatic Meeting Capture: mymeet.ai joins scheduled meetings through calendar integration and records everything

2. Intelligent Transcription: Conversations are transcribed with speaker identification across 73 languages

3. Initial Analysis: mymeet.ai automatically extracts action items and creates basic summaries

4. Enhanced GPT-4o Processing: Export meeting content to GPT-4o for deeper analysis using its advanced capabilities:

Comprehensive meeting summaries with GPT-4o's reasoning abilities

Code generation from technical discussions using o3-mini-high

Complex problem-solving based on meeting content using o1

Visual analysis of shared screens and presentations

Business Applications That Leverage Both Tools

Organizations are finding innovative ways to combine these technologies:

Software Development Teams Development teams use mymeet.ai to capture technical discussions, then leverage GPT-4o's exceptional coding capabilities to transform requirements into implementation plans. For complex programming challenges, they can switch to o3-mini-high for even more specialized code generation.

Sales Organizations Sales teams record client meetings with mymeet.ai, then use GPT-4o to analyze customer objections, develop personalized follow-up strategies, and create proposal documents based on specific client needs mentioned during calls.

Data Analysis and Research When research teams discuss data findings in meetings, mymeet.ai captures the conversation while GPT-4o can later analyze charts and visualizations shared during the meeting, combining both the visual data and the contextual discussion.

Implementation Best Practices

To get the most from this powerful combination:

Configure mymeet.ai to automatically join important meetings through calendar integration

Use mymeet.ai's noise reduction and speaker identification features to ensure clean transcripts

Export complex technical discussions to o3-mini-high for specialized processing

Use GPT-4o's multimodal capabilities to analyze both the conversation and any visual materials shared

For mathematical or scientific discussions, consider using o1 for the deepest analytical capabilities

Early adopters report that this integration reduces meeting follow-up time by up to 70% while significantly improving the quality of documentation and action tracking—allowing teams to focus on implementation rather than recordkeeping.

OpenAI's Complete Model Lineup: GPT-4o, o1, o3-mini, and More

OpenAI now offers a diverse range of AI models, each with specialized capabilities designed for different use cases. Understanding the differences helps you choose the right tool for specific tasks.

GPT-4o: The Multimodal Flagship

GPT-4o excels at general-purpose tasks with its ability to process and generate content across multiple media types. It's the default choice for most users, offering balanced performance, speed, and versatility. This model is ideal when you need to work with images, voice, or text in combination.

GPT-4o with Scheduled Tasks (Beta)

This variant of GPT-4o adds the ability to schedule responses for a later time. Currently in beta, this feature allows you to ask ChatGPT to remind you of information, follow up on tasks, or deliver content at a specified future time. It's particularly useful for setting reminders, planning future work, or scheduling content creation.

o1: The Deep Reasoning Specialist

The o1 model focuses specifically on advanced reasoning capabilities. It excels at complex problem-solving tasks that require step-by-step thinking and logical analysis. While slower than other models, o1 delivers superior results for:

Multi-step mathematical problems

Complex logical puzzles

Scientific reasoning

Detailed analysis tasks

When accuracy on difficult reasoning problems matters more than speed, o1 is the preferred choice.

o3-mini: The Speed Demon

The o3-mini model prioritizes rapid responses while maintaining good reasoning capabilities. It processes information quickly, making it ideal for real-time applications where speed is critical. This model works well for:

Time-sensitive applications

Quick information retrieval

Applications requiring low latency

Simple to moderate reasoning tasks

o3-mini-high: The Coding Expert

Specialized for programming tasks, o3-mini-high delivers exceptional performance for coding and logical problems. It excels at:

Code generation and debugging

Algorithm development

Technical documentation

Data structure manipulation

This model combines the speed of o3-mini with enhanced capabilities for code-related tasks, making it the go-to choice for developers.

Key Features of ChatGPT 4o

GPT-4o introduces several groundbreaking features that transform how we interact with AI. These capabilities represent significant advances over previous models and enable entirely new use cases.

How ChatGPT 4o Enables Real-time Audio Conversations

The most immediately noticeable improvement in GPT-4o is its ability to conduct near-human-speed voice conversations. Previous versions of ChatGPT required a complex pipeline of models:

A speech-to-text model to transcribe user audio

GPT-3.5 or GPT-4 to process the text and generate a response

A text-to-speech model to convert the response back to audio

This process resulted in an average response time of 2.8 seconds using GPT-3.5 and 5.4 seconds using GPT-4—far too slow for natural conversation.

GPT-4o changes everything by processing audio directly within a single neural network. The result is an average response time of just 320 milliseconds, which approaches the human conversational response time of approximately 210 milliseconds.

This dramatic speed improvement transforms voice interaction from a novelty feature into a practical, natural way to use AI. The unified model also preserves important audio information that was previously lost in transcription, such as:

Tone of voice and emotional cues

Background context

Multiple speaker identification

Non-verbal sounds and cues

The result is a significantly more natural and context-aware conversation experience that feels remarkably human-like.

GPT-4o Multimodal Understanding: Text, Image, and Audio Capabilities

GPT-4o's unified approach to processing different media types leads to much better understanding of mixed-media content. The model can seamlessly analyze and respond to prompts that combine:

Text: GPT-4o matches GPT-4 Turbo performance on English text while significantly improving on non-English languages.

Images: The model can analyze photos, diagrams, charts, documents, and handwritten content with improved accuracy.

Audio: Beyond just transcribing speech, GPT-4o understands tonal qualities, accents, multiple speakers, and even background sounds.

Video: While still developing, GPT-4o can process video inputs to understand both visual and audio components simultaneously.

This integrated understanding enables entirely new workflows. For example, users can point their phone camera at an object while speaking a question about it, and GPT-4o can analyze both inputs together to provide more relevant answers.

In educational contexts, GPT-4o can analyze a student showing their work on a math problem while explaining their thought process, providing much more targeted assistance than was previously possible.

How GPT-4o Improves Token Efficiency for Global Languages

One of GPT-4o's less flashy but highly significant improvements is its enhanced tokenization for non-English languages. Tokenization is the process of breaking text into smaller units (tokens) that AI models can process. More efficient tokenization means:

Lower costs (since API usage is billed per token)

Longer effective context windows

Better understanding of language nuances

GPT-4o's improvements are particularly dramatic for languages that use non-Latin scripts:

Language | Improvement | Example (Before → After) |

|---|---|---|

Gujarati | 4.4x fewer tokens | 145 → 33 tokens |

Telugu | 3.5x fewer tokens | 159 → 45 tokens |

Tamil | 3.3x fewer tokens | 116 → 35 tokens |

Marathi | 2.9x fewer tokens | 96 → 33 tokens |

Hindi | 2.9x fewer tokens | 90 → 31 tokens |

Arabic | 2.0x fewer tokens | 53 → 26 tokens |

Korean | 1.7x fewer tokens | 45 → 27 tokens |

Chinese | 1.4x fewer tokens | 34 → 24 tokens |

Japanese | 1.4x fewer tokens | 37 → 26 tokens |

Even European languages see modest improvements, with French, Spanish, and Italian all using approximately 10% fewer tokens.

This enhanced efficiency dramatically improves GPT-4o's utility for global users, making it more cost-effective and capable when working with non-English content.

ChatGPT 4o Voice and Audio Features: Everything You Need to Know

GPT-4o's audio capabilities extend far beyond basic speech recognition. The model demonstrates several advanced audio-processing abilities:

Emotional Understanding: GPT-4o can detect emotional cues in speech, such as excitement, frustration, or confusion, and respond appropriately.

Voice Selection: At launch, GPT-4o offers four preset voices to choose from, though OpenAI limited these options initially to mitigate potential misuse.

Natural Interruption: Like human conversation, users can interrupt GPT-4o mid-response, and the model will adjust accordingly (this feature is still rolling out gradually).

Background Noise Processing: Unlike previous models that could only work with clean audio, GPT-4o can understand speech even with background noise or multiple speakers.

Voice Adaptation: The model can adjust its speaking style to match the context—speaking more slowly when explaining complex concepts or more energetically for encouraging messages.

These capabilities enable much more natural voice interactions that feel less like talking to a computer and more like conversing with a human assistant.

What is GPT-4o mini? The Smaller, Faster Alternative to GPT 4o

Alongside GPT-4o, OpenAI released GPT-4o mini, a smaller and more efficient model designed to balance performance with speed and cost. GPT-4o mini replaces GPT-3.5 Turbo as OpenAI's standard model for free users while delivering significantly improved capabilities.

GPT-4o mini is notable for several key advantages:

Performance Improvements: GPT-4o mini outperforms GPT-3.5 Turbo across all major benchmarks while maintaining similar speed.

Multimodal Capabilities: Unlike GPT-3.5 Turbo, GPT-4o mini can process both text and images natively, with audio capabilities in development.

Cost Efficiency: For API users, GPT-4o mini costs approximately 60% of what GPT-3.5 Turbo did, at just $0.15 per million input tokens and $0.60 per million output tokens.

Accessibility: GPT-4o mini brings many of the advanced capabilities previously limited to paying users to the free tier of ChatGPT.

For most everyday tasks, GPT-4o mini provides excellent results while being significantly faster and more cost-effective than the full GPT-4o model. It's particularly well-suited for:

Common question-answering tasks

Basic content generation

Simple image analysis

Routine coding assistance

Everyday conversational AI applications

The introduction of GPT-4o mini represents a significant democratization of AI capabilities, bringing advanced features to a broader audience at lower cost.

When to Use Each OpenAI Model: Choosing Between GPT-4o, o1, and o3-mini

With multiple specialized models now available, choosing the right one for your specific needs can maximize both performance and cost-efficiency. Here's a practical guide to selecting the optimal model:

Choose GPT-4o When:

You need to work with multiple media types (text, images, audio)

You want balanced performance across various tasks

You're creating conversational AI applications

You need strong performance across multiple languages

You want the most versatile, general-purpose model

Real-world example: Building a customer service chatbot that needs to understand product images, answer questions via voice, and provide helpful responses in multiple languages.

Choose GPT-4o with Scheduled Tasks When:

You need to set reminders or follow-ups

You're building applications with time-delayed responses

You want to schedule content creation or delivery

You need an AI assistant that can prompt you at specific times

Real-world example: Creating a learning application that follows up with students at predetermined intervals to reinforce concepts through spaced repetition.

Choose o1 When:

You need to solve complex reasoning problems

Mathematical accuracy is critical

You're working on scientific or academic content

You need step-by-step problem solving

Accuracy matters more than speed

Real-world example: Solving complex mathematical proofs or analyzing scientific research papers where precision is essential.

Choose o3-mini When:

Speed is your top priority

You're handling high-volume, simple requests

You need real-time responses

Cost-efficiency is important

The tasks involve straightforward reasoning

Real-world example: Building a high-traffic website chatbot that needs to respond instantly to common customer queries.

Choose o3-mini-high When:

You're focused on coding tasks

You need help with complex programming problems

You're working with logical systems and structures

You need technical documentation assistance

Real-world example: Developing software with real-time code suggestions, debugging assistance, and documentation generation.

From a cost perspective, it's generally most efficient to use the simplest model that meets your needs. For developers, this typically means:

Start with GPT-4o mini for basic tasks

Upgrade to o3-mini or o3-mini-high for specialized applications

Use GPT-4o when multimodal capabilities are needed

Reserve o1 for the most complex reasoning tasks

By strategically selecting the right model for each use case, you can optimize both performance and cost.

GPT-4o Performance Benchmarks: How Good is OpenAI's New Model?

OpenAI's benchmark testing shows GPT-4o delivering impressive performance across various evaluation metrics. Here's how it compares to other leading models on key benchmarks:

Text Understanding and Reasoning

Benchmark | GPT-4o | GPT-4 Turbo | Claude 3 Opus | Gemini 1.5 Pro |

|---|---|---|---|---|

MMLU (%) | 88.7 | 86.5 | 86.8 | 81.9 |

GPQA (%) | 53.6 | 48.0 | 50.4 | N/A |

MATH (%) | 76.6 | 72.6 | 60.1 | 58.5 |

HumanEval (%) | 90.2 | 87.1 | 84.9 | 71.9 |

MGSM (%) | 90.5 | 88.5 | 90.7 | 88.7 |

MMLU (Massive Multitask Language Understanding) tests knowledge across 57 subjects, while GPQA evaluates graduate-level reasoning ability. GPT-4o shows consistent improvements over GPT-4 Turbo across all text-based benchmarks.

Multilingual Performance

GPT-4o demonstrates particularly strong results on multilingual benchmarks, with significant gains in non-English language tasks. On the M3Exam Zero-Shot test, which evaluates performance across multiple languages, GPT-4o consistently outperforms GPT-4 across languages including Chinese, Portuguese, Thai, and Vietnamese.

Audio Transcription and Translation

For audio capabilities, GPT-4o approaches or exceeds specialized audio models:

Word Error Rate: Comparable to Whisper v3, OpenAI's dedicated speech recognition model

Translation Performance: Competitive with specialized translation models like SeamlessM4T-v2

Vision Understanding

On visual perception benchmarks, GPT-4o achieves state-of-the-art performance for a general-purpose model, showing particular strength in:

Chart and diagram interpretation

Text extraction from images

Visual reasoning tasks

Multi-step visual problem solving

When compared with specialized reasoning models like o1 and o3-mini, GPT-4o shows competitive performance on general tasks while offering the additional advantage of multimodal capabilities. o1 still leads on the most complex mathematical and reasoning tasks, while o3-mini-high retains an edge for specialized coding applications.

The benchmark results confirm that GPT-4o represents a significant advancement over previous models, particularly in its ability to deliver strong performance across different modalities within a single model.

How to Access ChatGPT 4o: All Available Options

GPT-4o is accessible through multiple channels, with different options depending on your needs and budget. Here's a comprehensive guide to all the ways you can start using this powerful AI model.

Is ChatGPT 4o Free? Understanding the Free Tier

Yes, GPT-4o is available to free ChatGPT users—with some limitations. Free users can access GPT-4o's text and image capabilities through the standard ChatGPT interface, but with usage caps:

Limited number of messages per day

Automatic fallback to GPT-4o mini when limits are reached

Restricted access during peak usage times

Some advanced features (like voice capabilities) may be limited

Despite these restrictions, the free tier offers remarkable value, bringing flagship AI capabilities to a wide audience at no cost.

ChatGPT 4o Plus: What You Get for $20/month

ChatGPT Plus subscribers ($20/month) enjoy significantly enhanced access to GPT-4o:

5x higher message limits compared to free users

Priority access during high-traffic periods

Full access to advanced features including voice capabilities

Early access to new features as they roll out

Ability to switch between all available models (GPT-4o, o1, o3-mini, o3-mini-high)

Access to GPT-4o with scheduled tasks (beta)

For regular users who need reliable access to GPT-4o's full capabilities, the Plus subscription offers excellent value.

ChatGPT 4o Pro: Premium Features and Capabilities

OpenAI recently introduced a higher-tier subscription called ChatGPT Pro ($200/month) with additional benefits:

Unlimited usage of all models including GPT-4o

No rate limits or throttling

Highest priority during peak times

Advanced data analysis capabilities

Extended output tokens for longer content generation

Deep research features

This premium tier is designed for professional users who rely heavily on AI for daily work and need guaranteed access without limitations.

GPT-4o API Access: Developer Integration Guide

Developers can access GPT-4o through OpenAI's API with a significantly improved pricing structure:

Input tokens: $5 per million (50% cheaper than GPT-4 Turbo)

Output tokens: $15 per million (50% cheaper than GPT-4 Turbo)

Rate limits: 5x higher than GPT-4 Turbo

Response speed: 2x faster than GPT-4 Turbo

The API provides comprehensive access to GPT-4o's text and vision capabilities, with audio and video features available to selected partners in a controlled rollout.

For smaller applications or cost-sensitive uses, GPT-4o mini is available through the API at even lower rates:

Input tokens: $0.15 per million

Output tokens: $0.60 per million

Developers can also access specialized models like o1, o3-mini, and o3-mini-high through the API for specific use cases.

Using GPT-4o on Desktop and Mobile Apps

OpenAI has expanded access to GPT-4o through dedicated applications:

Desktop App: The ChatGPT desktop app for macOS (with Windows coming soon) provides quick access to GPT-4o through a native interface. The app includes special features like screen capture for visual analysis and a universal keyboard shortcut (Option+Space) to summon ChatGPT from anywhere.

Mobile Apps: Official ChatGPT apps for iOS and Android offer full access to GPT-4o, including voice conversation capabilities. The mobile experience is optimized for on-the-go use, with camera integration for visual analysis and voice interaction.

These apps provide more seamless integration with your devices than the web interface, making GPT-4o more accessible and useful in daily workflows.

What Can ChatGPT 4o Do? Real-World Applications

GPT-4o's versatility makes it valuable across numerous domains. Here are some of the most impactful real-world applications enabled by its capabilities.

How GPT-4o Revolutionizes Software Development

GPT-4o offers developers powerful assistance throughout the software development lifecycle:

Code Generation: The model can create complex code across multiple programming languages, translating natural language descriptions into functional implementations. For even better coding performance, developers can also switch to the specialized o3-mini-high model.

Real-time Debugging: By analyzing code visually through screenshots or directly through text, GPT-4o can identify bugs and suggest fixes with contextual understanding.

Documentation: Automatically generate comprehensive documentation from existing code, making projects more maintainable and accessible.

Architecture Planning: Help design software systems and database structures based on requirements descriptions.

Developers report significant productivity gains when using GPT-4o, with some tasks being completed in minutes that would previously have taken hours.

GPT-4o for Content Creation: Text, Images, and More

Content creators benefit from GPT-4o's ability to work across different media types:

Multilingual Content: Create content in multiple languages with improved efficiency, particularly for non-Latin scripts.

Visual Content Analysis: Analyze images, charts, and visual data to incorporate insights into written content.

Image Generation: Create custom images based on text descriptions for illustrations, diagrams, and visual content.

Audio Transcription and Summarization: Convert spoken content to text with high accuracy, then summarize or repurpose it across different formats.

The unified nature of GPT-4o streamlines creative workflows by eliminating the need to switch between different tools for different media types.

ChatGPT 4o in Education and Research

Educators and researchers are finding numerous applications for GPT-4o:

Interactive Learning: Create conversational learning experiences that adapt to student questions and provide immediate feedback.

Multimodal Explanation: Explain complex concepts using a combination of text, generated images, and voice to accommodate different learning styles.

Research Assistance: Analyze academic papers, data visualizations, and experimental results to generate insights and suggest new directions.

Language Learning: Provide real-time pronunciation feedback and conversational practice with native-like speech in multiple languages.

For complex reasoning tasks in scientific or mathematical domains, educators can also leverage the specialized capabilities of the o1 model for even deeper analysis.

GPT-4o Business Applications: From Customer Service to Data Analysis

Businesses across industries are adopting GPT-4o for various applications:

Customer Support: Deploy voice-capable chatbots that can analyze product images, answer questions naturally, and provide assistance across multiple channels.

Data Visualization Analysis: Extract insights from charts, graphs, and business dashboards to inform decision-making.

Meeting Assistance: Transcribe, summarize, and extract action items from meetings in real-time with multimodal understanding.

Scheduled Follow-ups: Use GPT-4o with scheduled tasks to automatically follow up on business conversations or remind team members of commitments.

The dramatic speed improvements in GPT-4o make these business applications practical in ways that weren't possible with previous models.

GPT-4o Limitations: What OpenAI's New Model Can't Do

Despite its impressive capabilities, GPT-4o has several important limitations users should be aware of:

Knowledge Cutoff: GPT-4o's training data ends in October 2023, meaning it lacks knowledge of more recent events unless it uses web browsing features.

Hallucinations: Like all large language models, GPT-4o can sometimes generate incorrect information presented confidently as fact, particularly when dealing with specialized knowledge domains.

Math and Complex Reasoning: While improved, GPT-4o still makes errors in complex mathematical calculations and multi-step reasoning. For these tasks, the specialized o1 model often performs better.

Contextual Understanding Limits: Despite its 128K token context window, GPT-4o may struggle with very long documents or conversations, occasionally forgetting details from earlier in the exchange.

Privacy Considerations: GPT-4o's conversations may be retained by OpenAI (within their data retention policies) and potentially used to improve future models unless users opt out.

Safety Limitations: OpenAI has implemented various safety measures that may restrict GPT-4o's responses in sensitive domains, which some users might find limiting for legitimate use cases.

Accent and Dialect Challenges: While generally good with languages, GPT-4o may struggle with strong accents or regional dialects in audio inputs.

OpenAI is continuously working to address these limitations, but users should keep them in mind when relying on GPT-4o for important tasks.

The Future of GPT-4o: What's Coming Next from OpenAI

OpenAI isn't resting after the GPT-4o release. The company has outlined an ambitious roadmap that will make this already impressive model even more powerful in the coming months.

According to insider reports and OpenAI's public statements, we can expect several major enhancements:

Enhanced voice features with natural interruption capabilities and additional voice options that make conversations feel more human. Early testers report being able to cut in mid-sentence just like they would with a real person.

Full video input processing that will allow GPT-4o to analyze and respond to videos in real-time, opening up applications from education to sports coaching. This builds on the current image analysis capabilities to handle dynamic content.

Advanced API controls giving developers more granular parameters for multimodal generation and fine-tuned outputs. These new endpoints will provide unprecedented control over how GPT-4o processes different types of inputs.

Improved safety measures that better balance protection against misuse with fewer false rejections of legitimate requests. OpenAI is working to reduce the frustrating "false positive" blocks that sometimes occur.

Expanded custom GPT capabilities for creating specialized assistants tailored to specific industries and tasks. This will allow users to build even more powerful domain-specific applications.

Industry analysts see GPT-4o as just the beginning of a new era in AI. The unified multimodal approach—bringing text, image, audio, and video together in one model—is rapidly becoming the new standard that competitors are racing to match.

How to Get the Most Out of GPT-4o: Expert Tips and Tricks

Maximize your results with GPT-4o by applying these expert strategies:

Multimodal Prompting: Combine text, images, and voice in your prompts for the most comprehensive responses. For example, show a chart while asking a specific question about data trends.

Model Switching: Learn when to leverage specialized models—use o1 for complex reasoning, o3-mini for speed-critical applications, and o3-mini-high for coding tasks.

Voice Optimization: When using voice features, speak clearly and provide context. GPT-4o responds better to natural, conversational speech than to robotic commands.

Detailed Prompts: Be specific in your instructions. Instead of "Write a blog post," try "Write a 1000-word blog post about renewable energy trends for a technical audience, focusing on recent innovations in solar storage."

Iterative Refinement: Use GPT-4o's responses as starting points. Ask for revisions, clarifications, or expansions to refine outputs until they match your needs exactly.

Cost Optimization for Developers: When using the API, preprocess inputs to reduce token count, cache common responses, and use the most efficient model for each task type.

Visual Prompt Engineering: When showing images, direct attention to specific areas or aspects you want analyzed. For example, "Look at the chart in the top-right corner and tell me what trend it shows."

These strategies can significantly improve your results while minimizing costs and maximizing efficiency.

When Was GPT-4o Released and What's Changed Since Then?

GPT-4o was officially announced on May 13, 2024, as part of OpenAI's Spring Updates event. The initial release included:

Text and image capabilities available immediately

Voice features announced but rolled out gradually to Plus users

API access for text and vision capabilities

Controlled access to audio and video capabilities for trusted partners

Since the initial release, OpenAI has delivered several updates:

May 2024: Initial rollout to ChatGPT Plus users and controlled API access.

June 2024: Extended availability to free users (with usage limits) and launched the desktop app for macOS.

July 2024: Expanded voice capabilities to more Plus users and introduced additional voice options.

August 2024: Released scheduled tasks feature in beta for Plus subscribers.

September 2024: Added additional API capabilities and expanded partner access to audio features.

The rollout strategy reflects OpenAI's cautious approach to deploying powerful new capabilities, with gradual expansion as safety measures are refined and infrastructure scaled to meet demand.

Conclusion

GPT-4o represents a genuine breakthrough in AI technology, unifying multiple capabilities into a single, cohesive model that can process and generate content across different media types. Its near-human conversation speeds, improved multilingual capabilities, and unified approach to multimodal understanding set a new standard for AI assistants.

For everyday users, GPT-4o makes AI interaction more natural and intuitive than ever before. For developers and businesses, it opens up new possibilities for creating applications that seamlessly blend text, image, and audio processing in ways that were previously impractical or impossible.

While GPT-4o still has limitations—as all AI models do—its capabilities mark a significant step toward more natural and powerful human-computer interaction. As OpenAI continues to refine and expand its features, GPT-4o will likely become an increasingly central tool for work, creativity, and communication.

Whether you're using the free tier, a Plus subscription, or integrating through the API, GPT-4o offers compelling value and capabilities that weren't available just months ago. As AI development continues its rapid pace, GPT-4o gives us a glimpse of a future where the boundaries between different media types—and between human and artificial intelligence—continue to blur.

GPT-4o FAQ

What is GPT-4o?

GPT-4o is OpenAI's multimodal AI model released in May 2024. The "o" stands for "omni," reflecting its ability to process text, images, audio, and video in a single unified neural network. It represents a significant advancement over previous models by handling multiple types of media simultaneously.

When was GPT-4o released?

GPT-4o was officially announced and released on May 13, 2024, as part of OpenAI's Spring Updates event. The rollout was gradual, with different features becoming available over subsequent months.

What does the "o" in GPT-4o stand for?

The "o" in GPT-4o stands for "omni," indicating the model's omni-modal capabilities—its ability to work with multiple input and output modalities (text, images, audio, and video) within a single neural network.

Is GPT-4o free to use?

Yes, GPT-4o is available to free ChatGPT users with certain limitations. Free users can access GPT-4o's text and image capabilities but with daily message limits. When these limits are reached, the system falls back to GPT-4o mini. Premium features like voice capabilities may require a ChatGPT Plus subscription.

What is GPT-4o mini?

GPT-4o mini is a smaller, faster, and more cost-effective version of GPT-4o. It replaces GPT-3.5 Turbo as OpenAI's standard model for free users while offering multimodal capabilities and improved performance. It's designed to balance efficiency with capability for everyday tasks.

How is GPT-4o different from GPT-4?

GPT-4o differs from GPT-4 in several key ways: it's multimodal (handling text, images, audio, and video natively), much faster (2-3x), cheaper (50% cost reduction in the API), and has improved tokenization for non-English languages. It also offers near-instantaneous voice conversation capabilities that weren't possible with GPT-4.

Can GPT-4o access real-time data?

GPT-4o itself has a knowledge cutoff of October 2023. However, when used in ChatGPT with web browsing enabled, it can access and process current information from the internet. This combination allows it to respond to questions about recent events while leveraging its multimodal capabilities.

What languages does GPT-4o support?

GPT-4o supports over 50 languages with improved efficiency, particularly for non-Latin scripts. Languages like Gujarati, Telugu, Tamil, Hindi, Arabic, Chinese, and Japanese see dramatic improvements in tokenization efficiency, making GPT-4o much more effective for global content.

How fast is GPT-4o compared to previous models?

GPT-4o is approximately 2-3x faster than GPT-4 Turbo for text processing. For voice conversations, the improvement is even more dramatic—GPT-4o responds in around 320 milliseconds compared to 5.4 seconds with previous voice implementations,

Fedor Zhilkin

Mar 4, 2025