Technology & AI

Fedor Zhilkin

Feb 28, 2025

On January 31, 2025, OpenAI released o3-mini, the newest addition to their reasoning model series. Positioned as a cost-efficient yet powerful AI model, o3-mini delivers exceptional STEM capabilities—particularly in science, math, and coding—while maintaining low cost and reduced latency compared to its predecessors.

The model serves as a smaller version of the expected o3 flagship model and represents a significant advancement over o1-mini, which it effectively replaces in OpenAI's lineup. It brings advanced reasoning capabilities to more users through both ChatGPT's interface and API offerings.

What is o3-mini? Understanding OpenAI's New Model

o3-mini is a specialized reasoning model designed to break down complex problems into manageable parts before solving them. It focuses primarily on STEM domains, providing enhanced performance for mathematical reasoning, scientific problem-solving, and coding tasks.

Unlike larger models that prioritize breadth of knowledge, o3-mini concentrates on depth in technical areas. It's built with a deliberative alignment approach that enables it to reason about safety specifications before responding to queries, resulting in both accurate and safe outputs.

The model introduces a configurable "reasoning effort" feature, allowing users to select between three levels (low, medium, high) that balance speed against performance. At higher reasoning effort levels, o3-mini can match or even exceed the performance of larger models in specific domains.

Why o3-mini Matters

o3-mini represents a significant step in democratizing access to advanced AI capabilities. For the first time, a reasoning model is available to free ChatGPT users, albeit with limitations.

The model addresses a core challenge in AI deployment: delivering high-quality reasoning at lower cost and latency. This makes advanced AI more accessible for applications that require technical precision but can't afford the computational expense of larger models.

For developers, o3-mini introduces function calling, structured outputs, and developer messages to a smaller model—features previously available only in larger, more expensive models. This expands the range of production applications that can use a smaller, more cost-effective model.

The release also comes amid increased competition from models like DeepSeek-R1, showing OpenAI's commitment to maintaining leadership in cost-effective intelligence.

o3-mini Features and Capabilities

o3-mini comes with several key features that set it apart from previous OpenAI models:

Adjustable Reasoning Levels: Choose between low, medium, and high reasoning effort to optimize for either speed or performance.

Developer-Friendly Features: Supports function calling, structured outputs, and developer messages—essential tools for production applications.

Streaming Support: Like o1-mini and o1-preview, o3-mini supports streaming responses for more interactive applications.

Search Integration: Works with search to find up-to-date answers with links to relevant web sources (prototype feature).

Faster Response Times: Delivers responses 24% faster than o1-mini, with an average response time of 7.7 seconds compared to 10.16 seconds.

Higher Rate Limits: ChatGPT Plus and Team users get triple the rate limit compared to o1-mini (150 messages per day vs. 50).

The model does not support vision capabilities, so developers should continue using o1 for visual reasoning tasks.

How to Build a Perfect Meeting Documentation System with mymeet.ai and o3-mini

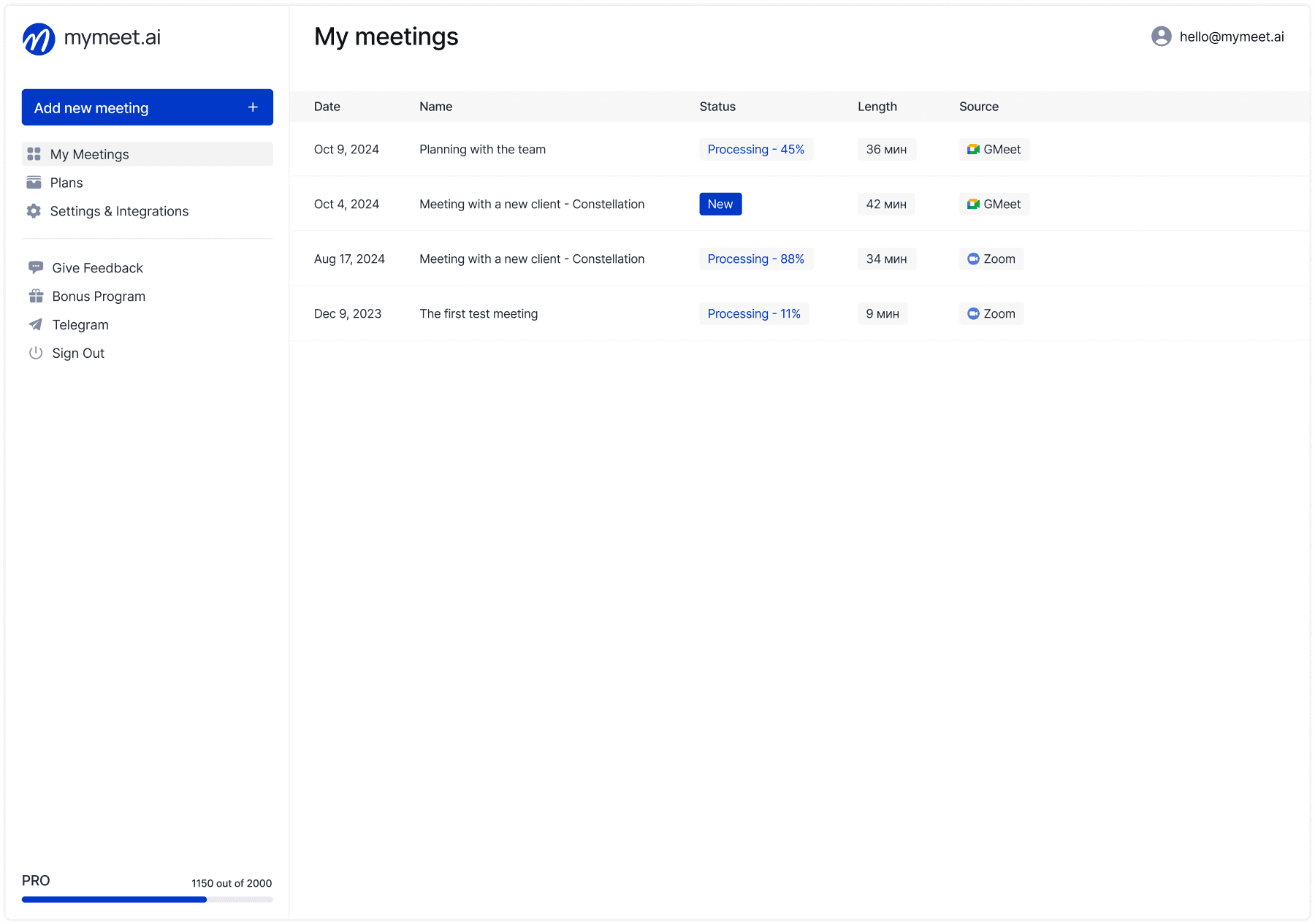

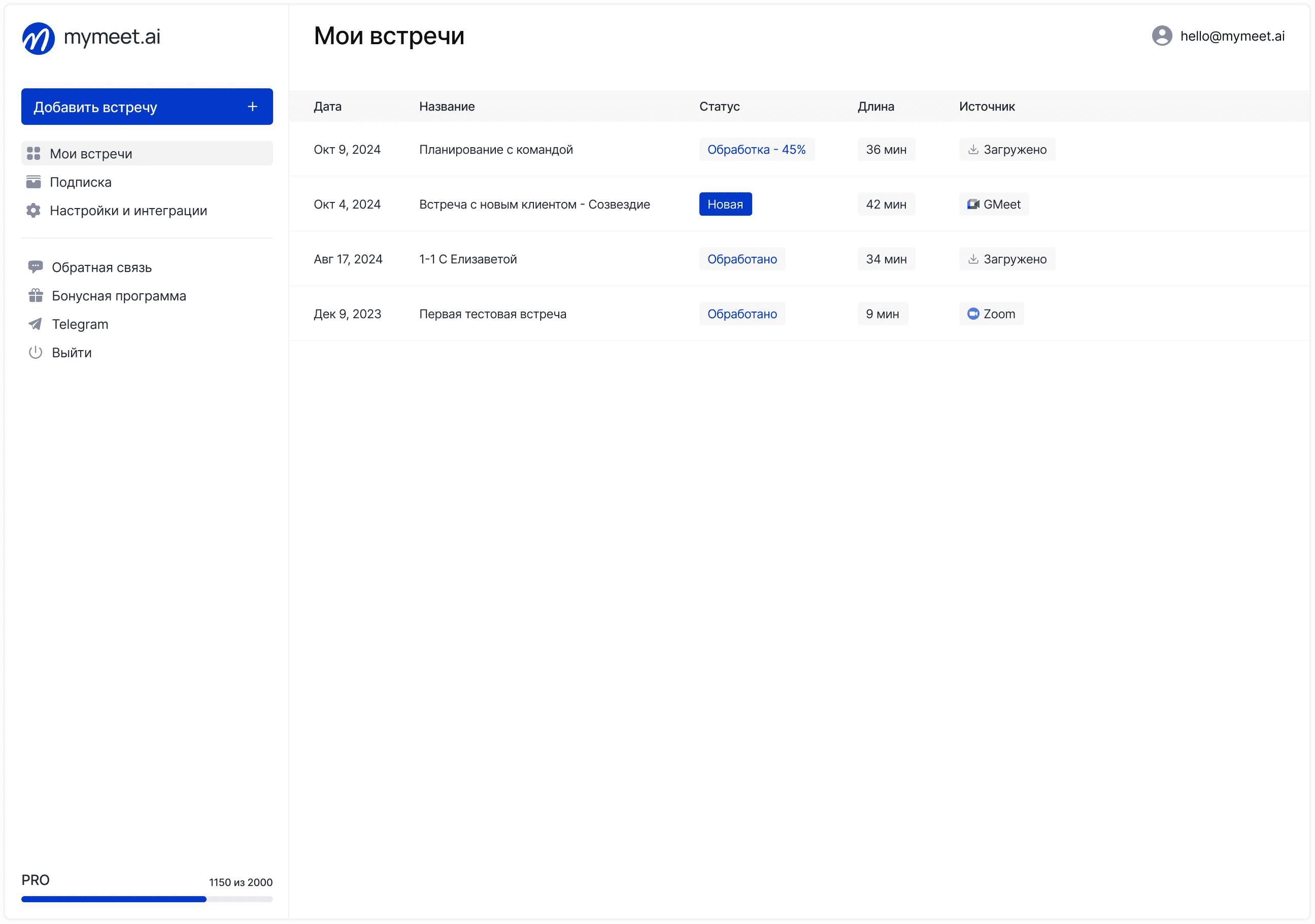

While o3-mini excels at STEM reasoning and problem-solving, pairing it with specialized AI tools can extend its capabilities to new domains. One powerful integration gaining traction among businesses is combining o3-mini with mymeet.ai for comprehensive meeting intelligence.

How mymeet.ai Complements o3-mini

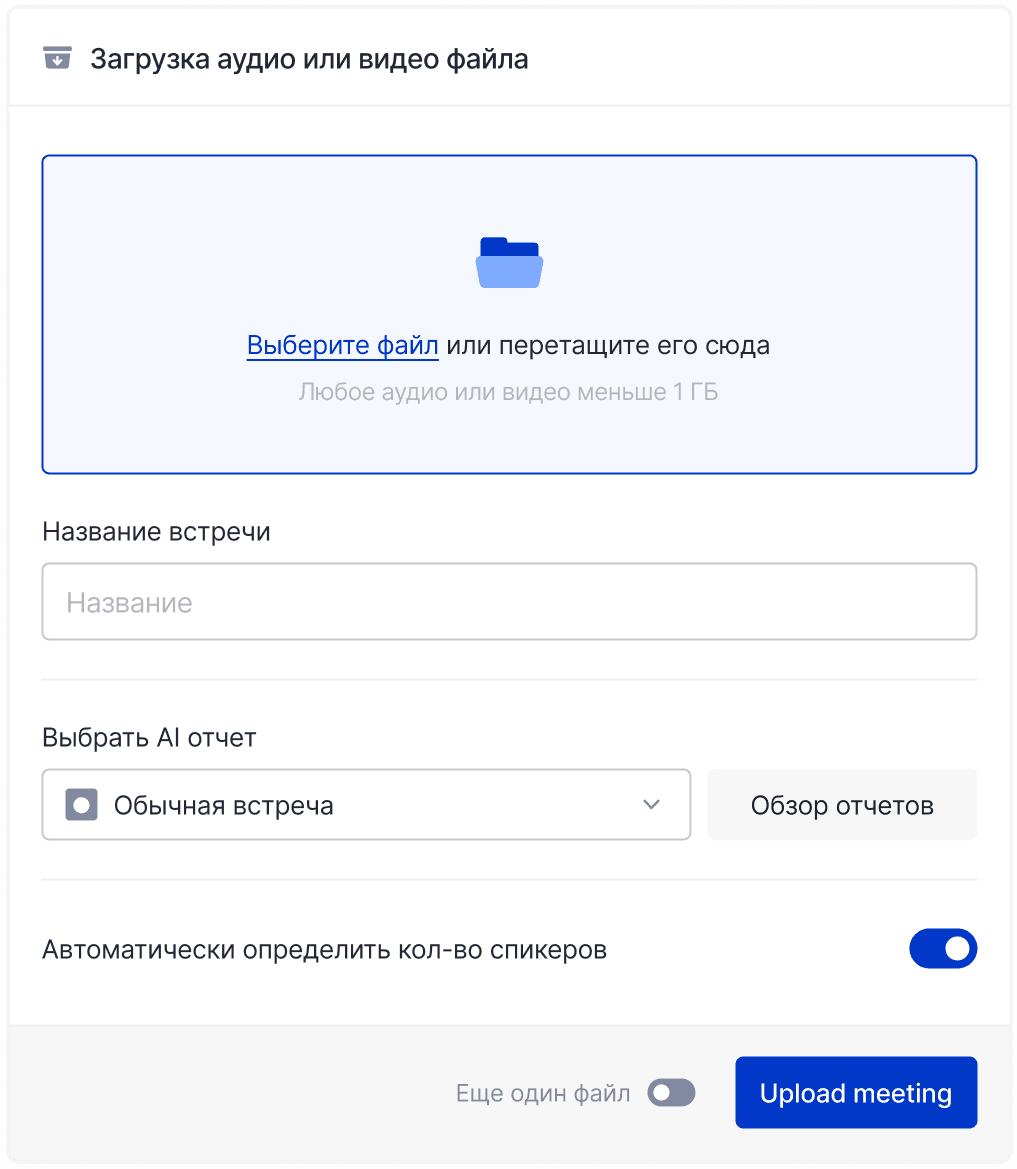

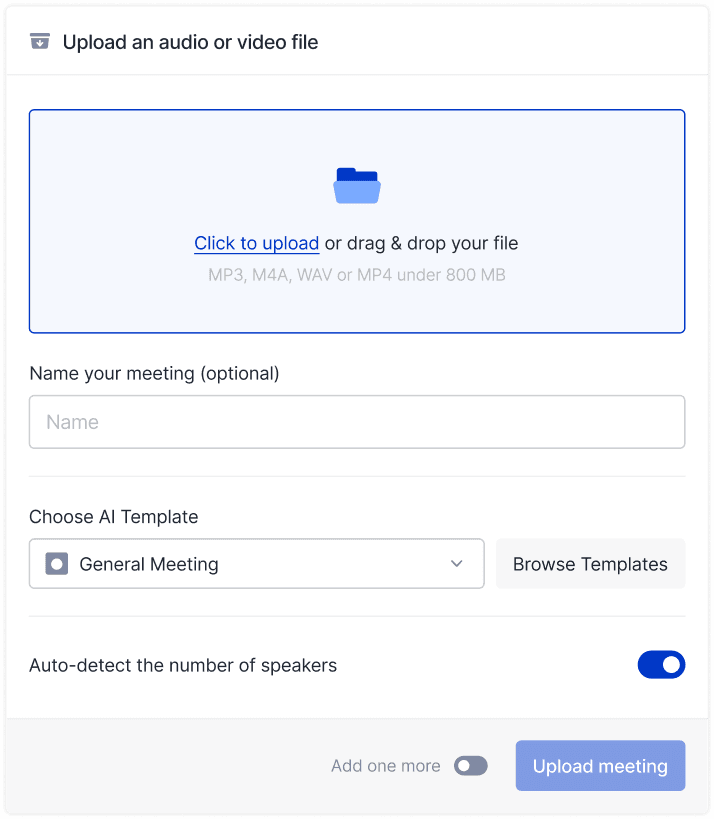

mymeet.ai is an AI meeting assistant that automatically joins, records, and transcribes online meetings across platforms like Zoom, Google Meet, and Телемост. This complementary tool addresses one of o3-mini's limitations—the inability to directly participate in live meetings:

mymeet.ai Capabilities | o3-mini Enhancement |

|---|---|

Automatic meeting transcription with speaker identification | Technical analysis using adjustable reasoning levels |

Action item extraction from conversations | Structured organization of complex technical tasks |

Transcription in 73 languages | Clear explanation of technical concepts from meetings |

Background noise removal | Deep analysis of technical discussions |

Practical Integration Workflow

The integration creates an efficient workflow that maximizes both tools' strengths:

mymeet.ai joins scheduled meetings through calendar integration

It creates detailed transcripts with speaker identification

The transcript is fed to o3-mini (with appropriate reasoning level)

o3-mini processes the technical content, providing:

Detailed analysis of technical discussions

Code solutions based on requirements mentioned

Mathematical validation of concepts discussed

Structured documentation of technical decisions

Business Value Proposition

This combination proves particularly valuable for:

Software Development Teams: mymeet.ai captures developer discussions while o3-mini translates requirements into structured code solutions using its superior programming capabilities

Scientific Research: Research meetings recorded by mymeet.ai can be analyzed by o3-mini using its advanced scientific reasoning, helping identify new approaches or validate hypotheses

Technical Education: Training sessions recorded through mymeet.ai can be processed by o3-mini to create comprehensive learning materials with step-by-step explanations

For organizations already investing in ChatGPT Plus or Team subscriptions, this paired approach leverages o3-mini's STEM reasoning while addressing its inability to participate in live meetings—creating a more comprehensive AI solution for technical teams.

o3-mini Performance Benchmarks

o3-mini demonstrates impressive performance across various benchmarks, especially in STEM domains. Here's how it performs on key metrics:

Mathematics (AIME)

With high reasoning effort, o3-mini achieves 83.6% accuracy on the American Invitational Mathematics Examination, outperforming both o1-mini and o1.

PhD-level Science (GPQA Diamond)

On graduate-level science questions across biology, chemistry, and physics, o3-mini with high reasoning effort achieves 77.0% accuracy, comparable to o1.

FrontierMath

In research-level mathematics, o3-mini with high reasoning solves over 32% of problems on the first attempt when using Python tools, including more than 28% of challenging (T3) problems.

Coding Performance

On Codeforces competitive programming, o3-mini achieves progressively higher Elo scores with increased reasoning effort:

Low effort: Outperforms o1-mini

Medium effort: Matches o1's performance

High effort: Achieves 2073 Elo, significantly higher than previous models

Software Engineering (SWE-bench Verified)

o3-mini is OpenAI's highest-performing released model on SWE-bench Verified, achieving 48.9% accuracy with high reasoning effort.

Latency

o3-mini has approximately 2500 ms faster time to first token than o1-mini, delivering a more responsive user experience.

Human Preference Evaluation

In evaluations by expert testers, o3-mini produces more accurate and clearer answers than o1-mini. Testers preferred o3-mini's responses 56% of the time and observed a 39% reduction in major errors on difficult real-world questions.

Model Comparison: o3-mini vs o1 and DeepSeek-R1

Feature/Metric | o3-mini (low) | o3-mini (medium) | o3-mini (high) | o1-mini | o1 | DeepSeek-R1 |

|---|---|---|---|---|---|---|

AIME Accuracy | ~60% | ~75% | 83.6% | ~60% | ~75% | ~73% |

GPQA Accuracy | ~65% | ~70% | 77.0% | ~60% | ~75% | ~68% |

Codeforces Elo | ~1800 | ~1950 | 2073 | ~1700 | ~1950 | ~1900 |

SWE-bench | ~30% | ~40% | 48.9% | ~25% | ~35% | ~42% |

Function Calling | Yes | Yes | Yes | No | Yes | Yes |

Vision Support | No | No | No | No | Yes | Yes |

Avg Response Time | 7.7s | 9.5s | 12s | 10.16s | 15s+ | 8–10s |

API Pricing (relative) | $ | $ | $$ | $ | $$$ | Free (open) |

MMLU | 84.9% | 85.9% | 86.9% | 85.2% | ~87% | ~87% |

General Knowledge | Good | Good | Good | Good | Excellent | Very Good |

o3-mini with medium reasoning effort matches o1's performance in math, coding, and science, while delivering faster responses. With high reasoning effort, it can exceed o1 in specific STEM domains.

Compared to DeepSeek-R1, o3-mini offers competitive performance, particularly in mathematical reasoning and coding tasks with high reasoning effort. While DeepSeek-R1 is available as an open model, o3-mini offers tighter integration with OpenAI's ecosystem and additional developer features.

How to Access o3-mini: ChatGPT and API Options

ChatGPT Access

Free Users:

Select 'Reason' in the message composer

Alternatively, regenerate a response to try o3-mini

Limited number of messages per day

ChatGPT Plus, Team, and Pro Users:

Select "o3-mini" from the model picker dropdown

150 messages per day limit (triple the previous 50 message limit with o1-mini)

Option to select "o3-mini-high" for higher intelligence at the cost of slightly longer response times

Enterprise Users: Access coming in February 2025

API Access

o3-mini is available through:

Chat Completions API

Assistants API

Batch API

Currently rolling out to select developers in API usage tiers 3-5.

To implement o3-mini via API:

The model is also accessible via Microsoft Azure OpenAI Service and GitHub Copilot.

Practical Applications of o3-mini Today

o3-mini excels in several practical applications:

Mathematical Problem-Solving

Perfect for handling complex mathematical problems, particularly in educational settings or research applications. The high reasoning effort setting makes it especially valuable for tackling challenging mathematical proofs and calculations.

Coding and Software Development

Ideal for:

Algorithm development

Debugging complex code

Explaining programming concepts

Optimizing existing code

Translating between programming languages

Scientific Problem-Solving

Well-suited for:

Lab protocol suggestions

Data analysis guidance

Scientific concept explanations

Literature review assistance

Experimental design feedback

Educational Applications

Effective for:

Creating practice problems with solutions

Explaining complex STEM concepts

Generating educational content

Providing step-by-step problem walkthroughs

Answering technical questions with clear explanations

Business Applications

Useful for:

Data analysis and interpretation

Technical documentation generation

Process optimization modeling

Technical support automation

Prototype development assistance

o3-mini and the Future of AI Models

o3-mini represents a significant trend in AI development: the creation of more specialized, efficient models that deliver exceptional performance in targeted domains while reducing costs.

This approach addresses several key challenges:

Accessibility: By making sophisticated reasoning available at lower cost, more organizations can implement advanced AI.

Efficiency: The focus on optimizing for specific use cases rather than general capabilities allows for better resource utilization.

Specialization: Domain-specific models like o3-mini's focus on STEM may become more common than general-purpose models.

Looking ahead, we can expect:

Further refinement of reasoning models across different specialties

More configurable parameters to fine-tune model behavior for specific tasks

Greater integration of reasoning models with tools and external data sources

Continued competition between OpenAI and other AI providers, driving innovation

o3-mini also suggests OpenAI is focused on democratizing access to reasoning capabilities, as evidenced by making the model available to free users for the first time.

o3-mini Cost and Value: Is It Worth It?

o3-mini offers significant value compared to larger models while delivering comparable performance in STEM domains:

API Pricing Considerations

While official pricing may vary, o3-mini is designed to be significantly more cost-effective than larger models like o1. The ability to select different reasoning levels also allows for cost optimization based on specific needs.

For applications requiring heavy STEM reasoning, o3-mini with high reasoning effort offers exceptional value, delivering performance similar to much more expensive models at a fraction of the cost.

Use Case Value Analysis

Educational platforms: High value, as o3-mini provides excellent explanations and problem-solving at lower cost

Coding assistants: Excellent value for code generation and debugging tasks

Scientific applications: Strong value for research assistance and data analysis

General-purpose assistants: Good value if STEM capabilities are important, but larger models may be better for broad knowledge

Cost Optimization Strategies

Use lower reasoning effort for simpler queries and initial drafts

Reserve high reasoning effort for complex problems that require deeper thinking

Consider implementing a tiered approach based on query complexity

Batch similar requests where possible to optimize API usage

Conclusion

o3-mini marks a significant breakthrough in the realm of specialized AI models, offering users outstanding performance in STEM tasks at low cost and with minimal latency. With its adjustable reasoning levels, the model adapts to various complexities—from delivering quick responses for simple queries to providing detailed, step-by-step analysis for challenging problems.

For developers and business users alike, o3-mini is a practical tool that combines cost-efficiency with high accuracy. Its advanced features, including function calling, structured outputs, and seamless integration with other systems, enable effective handling of programming, scientific analysis, and technical support tasks, making it an indispensable asset in today’s digital transformation.

Overall, o3-mini represents a major advancement in AI technology, opening new avenues for practical and commercial applications. Its flexibility and scalability ensure that it meets the needs of both individual users and large corporate clients, driving further growth in the digital economy.

o3-mini FAQ: Your Questions Answered

Is o3-mini available for free?

Yes, free ChatGPT users can access o3-mini by selecting "Reason" in the message composer or by regenerating responses, though with limited usage compared to paid plans.

Does o3-mini replace o1-mini?

Yes, o3-mini is the recommended replacement for o1-mini, offering better performance, faster responses, and enhanced developer features.

How does o3-mini compare to DeepSeek-R1?

o3-mini with high reasoning effort outperforms DeepSeek-R1 in mathematical reasoning and coding benchmarks, though DeepSeek-R1 offers the advantage of being an open model.

Does o3-mini support vision?

No, o3-mini does not support vision or image processing capabilities. For visual reasoning tasks, OpenAI recommends using o1.

What are the rate limits for o3-mini?

ChatGPT Plus and Team users get 150 messages per day with o3-mini, triple the previous 50 message limit with o1-mini. Pro users have unlimited access to both o3-mini and o3-mini-high.

Can o3-mini be used with other tools and APIs?

Yes, o3-mini supports function calling and structured outputs, making it compatible with external tools and APIs for more complex applications.

How does o3-mini ensure safe outputs?

OpenAI trained o3-mini using deliberative alignment, where the model reasons about safety specifications before responding. It was also rigorously tested through red-teaming and external evaluations.

When should I use o3-mini vs o1?

Use o3-mini for coding, math, scientific reasoning, and when cost-efficiency and speed are important. Use o1 for broader knowledge tasks, visual reasoning, and more creative applications.

Fedor Zhilkin

Feb 28, 2025