Technology & AI

Radzivon Alkhovik

Dec 15, 2025

In January 2025, Chinese company DeepSeek caused shock in technology world. DeepSeek-R1 model showed results comparable to GPT-4 and Claude, but spent $6 million on development versus OpenAI's $500 million. Within days, the app became most downloaded in American App Store, surpassing ChatGPT.

On January 27, NVIDIA shares fell 17%, losing nearly $600 billion in market cap—largest single-day loss in American stock market history. Many call DeepSeek's release a "Sputnik moment," undermining confidence in US technological superiority.

In this guide, we'll break down what DeepSeek is, how Chinese startup achieved such results with limited access to advanced chips, whether it's safe to use the model, and what this means for global AI competition.

What Is DeepSeek

DeepSeek is Chinese artificial intelligence development company founded in late 2023. Company creates open large language models for natural language processing, code generation and mathematical reasoning. In short time, DeepSeek transformed from unknown startup to serious player on global AI arena.

Flagship model DeepSeek-R1 uses Mixture-of-Experts architecture with 671 billion parameters. This architecture allows model to activate only necessary parts when responding to query, saving computational resources. Tests show DeepSeek-R1 surpasses Llama 3.1 and Qwen 2.5, while being comparable to GPT-4o and Claude 3.5 Sonnet.

Key DeepSeek Features:

Fully open source—available for free use

Publication of detailed methodology for all research

Development cost $6M vs $500M for competitors

Mixture-of-Experts architecture for efficient resource use

Support for 338 programming languages in Coder-V2 version

Context window up to 128,000 tokens for large inputs

Who's Behind DeepSeek

DeepSeek founded in 2023 by Liang Wenfeng—Chinese entrepreneur and mathematical prodigy born in 1985 in Guangdong province. Before launching DeepSeek, he created High-Flyer, a hedge fund that now finances company. By end of 2017, most of High-Flyer's trading operations managed by AI systems.

Foreseeing AI's importance, Liang began accumulating NVIDIA GPUs in 2021—before US government imposed restrictions on chip sales to China. This allowed gathering about 10,000 NVIDIA A100 GPUs, laying hardware foundation. First breakthrough came in May 2024 with DeepSeek-V2 release, which launched price war among Chinese tech giants.

Key DeepSeek Development Milestones:

February 2016: Liang Wenfeng founded High-Flyer hedge fund

2021: Started accumulating NVIDIA A100 GPUs (about 10,000 units)

July 2023: Official registration of DeepSeek as separate company

May 2024: DeepSeek-V2 release, launching price war in China

December 2024: DeepSeek-V3 release with 671B parameters

January 2025: DeepSeek-R1 release, entering international market

DeepSeek vs ChatGPT and Other AI Models

To understand DeepSeek's place in AI ecosystem, useful to compare with popular models. Comparison shows not only technical differences but different approaches to AI development and commercialization.

Feature | DeepSeek | ChatGPT | Claude | Gemini |

Key models | V3, R1 | GPT-4, 4-turbo | Claude 3.5 | Gemini 2 |

Open source | Yes | No | No | No |

Development cost | $6M | ~$500M | ~$200M | ~$700M |

Best for | Math, code | Conversations | Reasoning | Creative |

Main DeepSeek Advantages Over Competitors:

27x cheaper to use ($0.55 per 1M tokens vs $15 for o1)

Open source for free use and modification

Full methodology transparency with all research published

MoE architecture enables computational resource savings

Synthetic data from o1 demonstrates knowledge distillation efficiency

Main DeepSeek Models

DeepSeek offers family of specialized models for various tasks. Each model optimized for specific applications, from general chat to specialized programming.

DeepSeek Model Lineup:

DeepSeek-V3 — universal chat assistant (671B parameters, 37B active)

DeepSeek-R1 — reasoning model with chain of thought (128K context)

DeepSeek Coder — for programming (1B, 5.7B, 6.7B, 33B versions)

DeepSeek-Coder-V2 — improved version (338 programming languages, 128K context)

Janus-Pro-7B — text-to-image generation (7B parameters)

DeepSeek-V3

DeepSeek-V3 is universal multimodal model, competitor to ChatGPT-4o. Model has 671B parameters (37B active per token) and trained on 14.8 trillion tokens. Uses MLA and DeepSeekMoE architectures for fast inference.

Model surpasses open models and competes with closed commercial ones, requiring only 2.788M GPU H800 hours for training. After pre-training, underwent Supervised Fine-Tuning and reinforcement learning.

DeepSeek-R1

DeepSeek-R1 is model for advanced reasoning tasks. Built on DeepSeek-V3 and competes with OpenAI's o1 at lower cost. Model has 671 billion parameters and 128,000 token context window.

DeepSeek-R1 uses chain-of-thought approach, showing "thinking" process before final answer. This model became most downloaded app in American App Store and triggered AI infrastructure investment reassessment.

DeepSeek Coder and Coder-V2

DeepSeek Coder is specialized model for software development. Trained on dataset with 87% code and 13% natural language. Available in sizes 1B, 5.7B, 6.7B and 33B parameters. Shows best performance among public models on HumanEval, MultiPL-E, MBPP tests.

DeepSeek-Coder-V2 is improved version with performance comparable to GPT-4 Turbo. Support expanded from 86 to 338 programming languages, context window increased from 16K to 128K tokens.

Benefits of Using DeepSeek

DeepSeek offers range of competitive advantages for business and developers. Economy, efficiency and openness make it attractive choice for different use scenarios.

Key DeepSeek Benefits:

Usage cost 27x lower than o1 ($0.55 vs $15 per 1M tokens)

Development for $6M vs $500M for OpenAI

40% development time reduction for programmers

Open source for customization to specific needs

Local deployment option without sending data to cloud

Support for 73 languages for multilingual teams

Accuracy over 90% on specialized tasks

Revolutionary Economy

DeepSeek R1 developed for $6 million versus estimated $500 million for OpenAI's o1. Usage cost: input tokens $0.55 per 1M (vs $15 for o1), output tokens $2.19 per 1M (vs $60 for o1).

This economy opens AI technologies for smaller companies and startups that previously couldn't afford frontier models. Low entry barrier democratizes access to powerful AI tools.

Openness and Transparency

Full methodology transparency with all research published. Open source allows free use and modification of models. Researchers worldwide can learn from DeepSeek achievements, accelerating industry innovation.

DeepSeek Use Cases

DeepSeek's advanced AI capabilities make it universal tool for various domains. From personal productivity to enterprise solutions—model finds application in different scenarios.

Main Use Scenarios:

Code generation and debugging in 338 programming languages

Customer support automation through 24/7 chatbots

Feedback analysis and trend detection in data

Scientific paper and technical documentation summarization

Multilingual translation for international teams

Creating reports, meeting summaries and documentation

Assistance with mathematical calculations and statistical analysis

For Developers

Generating clean, efficient code snippets in multiple programming languages. Automating routine tasks like debugging, testing and formatting. DeepSeek Coder especially effective for large projects with context window up to 128K tokens.

For Business

Automating frequent question responses through DeepSeek-based chatbots. Round-the-clock customer support without large operator staff. Feedback analysis and trend detection in large data volumes. Report and documentation generation automatically.

For Researchers

Scientific paper summarization for quick key idea understanding. Assistance in data analysis and statistical calculations. DeepSeek's open nature allows researchers to experiment with model and adapt to specific needs.

DeepSeek Limitations

Despite all advantages, DeepSeek has drawbacks you should know before using. Understanding limitations helps make informed decisions about model application.

Main DeepSeek Limitations:

Data storage in China raises privacy concerns

Censorship of queries about Chinese government and political topics

Security vulnerabilities—user data leak in January 2025

Not optimal for creative tasks and artistic content

Mobile app requests excessive device data access

Global bans in Taiwan, Italy, Australia, NASA, US Navy

Unclear full development cost (possible hidden R&D expenses)

Data Storage in China

When you use DeepSeek chat, your data stored in People's Republic of China. This raises privacy concerns, especially for sensitive information. Recently reported vulnerability on DeepSeek website that disclosed data including user chats.

Excessive Censorship

DeepSeek blocks queries about China, government and political topics. Model refuses to discuss Tiananmen Square events, Tibet, Taiwan and Uyghur persecution. These restrictions built-in at training level, making it difficult to use for certain research.

Safe DeepSeek Usage

University of Notre Dame developed guide for safe DeepSeek use in partnership with information security department. Important to understand difference between DeepSeek services and models themselves.

Not Recommended (DeepSeek control):

DeepSeek website—vulnerability disclosed user data

Mobile app—requests excessive data access

DeepSeek API—not approved for sensitive data

Safe Options:

Chat through US providers (Perplexity)—public data only

Local use through Hugging Face and ollama

AWS Bedrock—with privacy guarantees from Amazon

Local Use

DeepSeek models available on Hugging Face for running on own hardware through tools like ollama. For additional security, limit use to devices with limited public internet access.

AWS Bedrock

Amazon offers DeepSeek through Bedrock with data privacy guarantees. AWS is close partner ensuring security of all models. If you're programmer wanting access to DeepSeek this way, can reach out to AI Enablement.

Who DeepSeek Is For

DeepSeek created for professionals working with complex tasks. Developers will appreciate strong programming performance and low costs. Researchers get model for deep analysis with customization capability.

DeepSeek Target Audience:

Software developers — code generation in 338 programming languages

Startups — economical AI solution without massive budgets

Researchers — open models for experiments and adaptation

Students — studying advanced AI concepts with transparent methodology

Data scientists — data analysis and mathematical computations

Small business — affordable AI automation without vendor lock-in

Open-source enthusiasts — ability to contribute model improvements

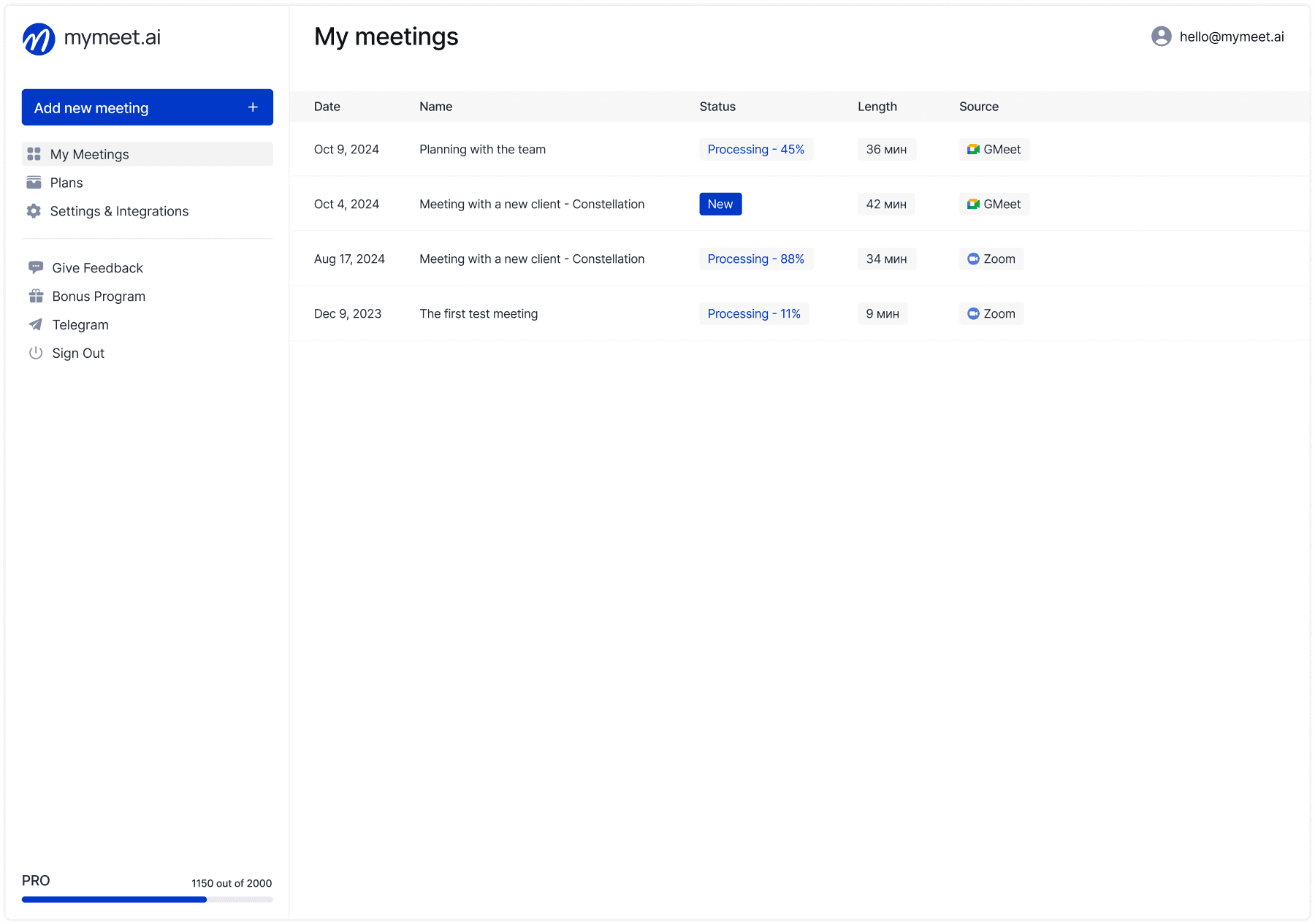

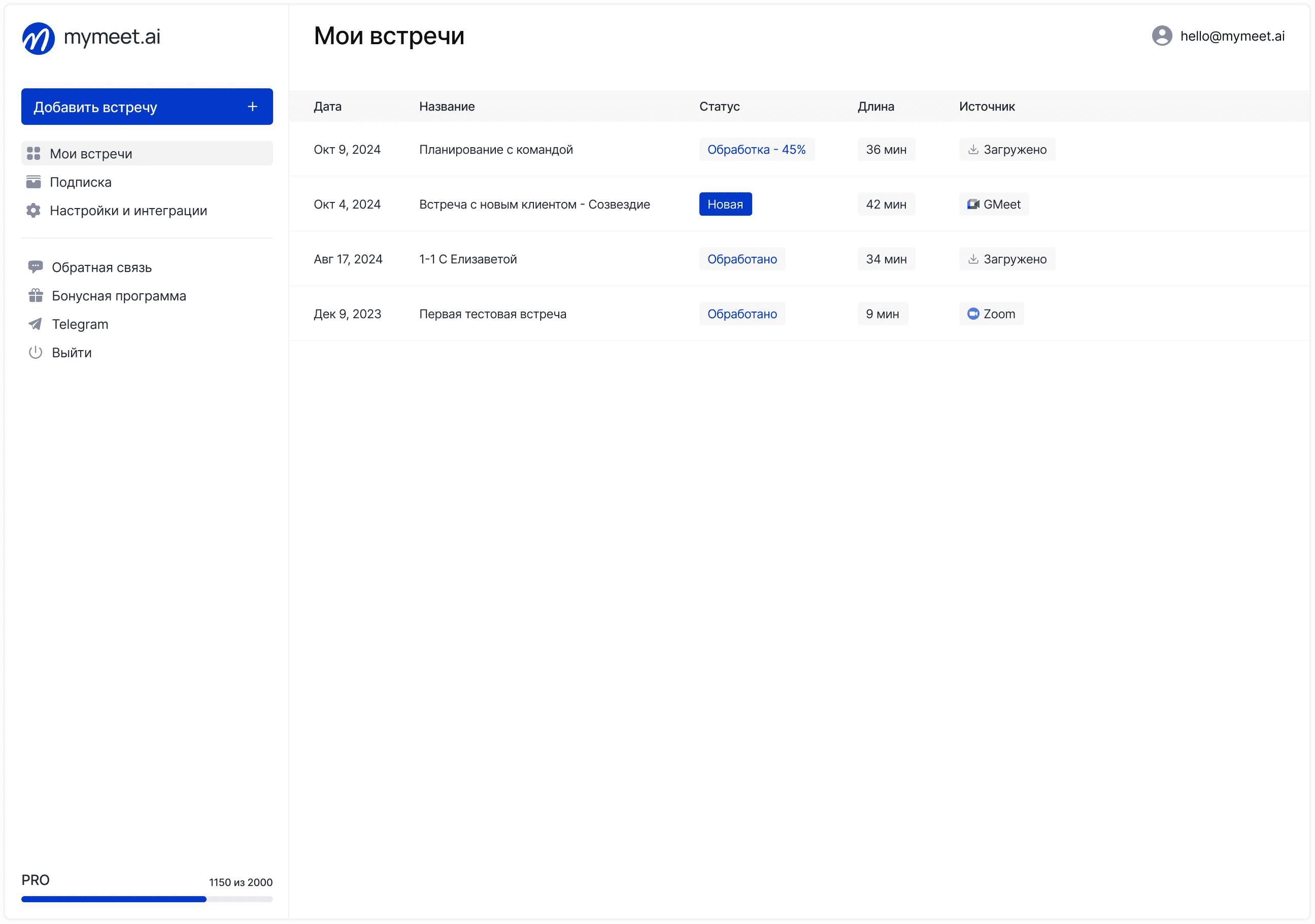

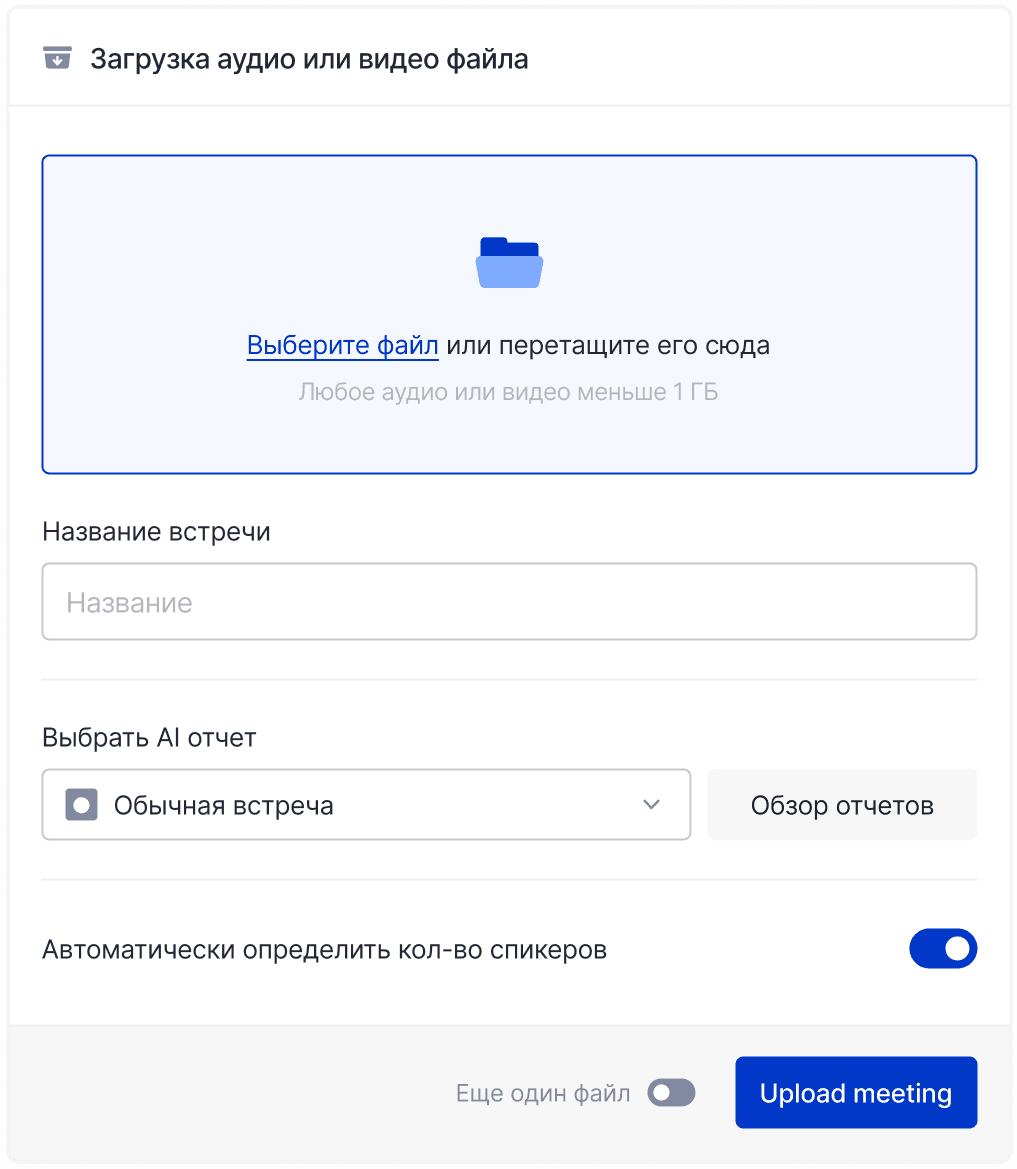

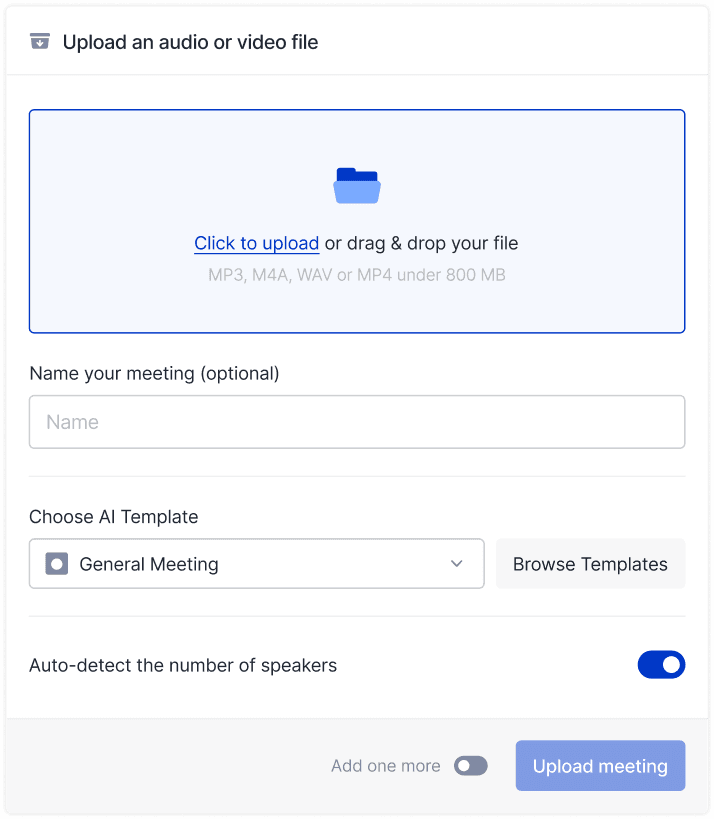

mymeet.ai for Recording and Analyzing AI Meetings

DeepSeek shows how AI becomes more efficient and accessible. But for business meetings and teamwork need specialized solutions optimized for specific business tasks.

mymeet.ai is AI assistant for online meetings. System automatically records calls, creates transcripts with speaker identification and generates structured reports with key decisions and tasks.

What mymeet.ai Can Do:

✅ Automatic recording — Zoom, Google Meet, Microsoft Teams, Yandex.Telemost

✅ Accurate transcription — 95% accuracy for Russian, supports 73 languages

✅ AI reports — structured summaries with decisions, tasks, next steps

✅ Smart search — find what was discussed at any meeting through questions to AI

✅ Integrations — calendar sync, sending reports to CRM

✅ Security — data in Russia, Federal Law 152 compliance

✅ Export — DOCX, PDF, JSON formats

Case Study: Sales team conducted 30-40 client meetings weekly. Manual note-taking took 10-15 hours. After implementing mymeet.ai, process automated: system recorded meetings, created transcripts, generated reports with client objections, automatically sent summaries to CRM. Documentation time reduced to zero.

Try mymeet.ai free — 180 minutes processing without card attachment. [Get Started →]

DeepSeek Pros and Cons

DeepSeek sets new standards in AI efficiency but has strengths and limitations. Balanced assessment helps understand when model fits and when better to consider alternatives.

DeepSeek Pros:

✅ Revolutionary economy — development for $6M vs $500M for competitors, 27x cheaper to use

✅ Full openness — source code, published methodology, free access for researchers

✅ Training efficiency — achieving competitive results on old chips through algorithmic innovations

✅ Strong math and code — excellent results on HumanEval, MBPP, support for 338 programming languages

✅ MoE architecture — efficient resource use through specialized sub-models

✅ Multiple models — from general chatbots to specialized coders

DeepSeek Cons:

⚠️ Data storage in China — privacy concerns for sensitive information

⚠️ Excessive censorship — blocking queries about Chinese government and political topics

⚠️ Security vulnerabilities — data leaks and exploits in web version (January 2025)

⚠️ Not for creativity — focus on reasoning, less suited for artistic content

⚠️ Unclear full cost — claimed $6M may not include all R&D expenses

⚠️ Global bans — restrictions in Taiwan, Italy, Australia, NASA, US Navy

Conclusion

DeepSeek represents paradigm shift in technology race between US and China. DeepSeek's success demonstrates that Chinese companies are no longer just imitators of Western technologies but serious AI innovators. Model's efficiency underscores limitations of US semiconductor export controls.

Biden administration imposed restrictions on advanced NVIDIA chips, aiming to slow AI development in China. DeepSeek's efficiency demonstrates that China developed techniques to maximize computational power with unprecedented efficiency, circumventing these restrictions. This raises concerns in Washington that existing export controls may be insufficient.

For developers and researchers, DeepSeek offers opportunity to experiment with advanced AI capabilities without massive budgets. Open nature of models accelerates innovation globally. While security and censorship concerns remain valid, technological achievements are undeniable. DeepSeek proves that efficiency and algorithmic innovations can rival brute computational power.

Try DeepSeek through safe channels—locally through Hugging Face or through AWS Bedrock. [Start exploring →]

Frequently Asked Questions (FAQ)

How does DeepSeek differ from ChatGPT?

DeepSeek fully open with published methodology, while ChatGPT is closed proprietary system. DeepSeek developed for $6M vs $500M for OpenAI. DeepSeek usage cost 27x cheaper ($0.55 per 1M tokens vs $15 for o1). DeepSeek uses MoE architecture for efficiency, ChatGPT uses dense architecture.

How much does DeepSeek cost to use?

DeepSeek available free through open source for local deployment. Through API costs: input tokens $0.55 per 1M, output tokens $2.19 per 1M. This is 27x cheaper than OpenAI's o1 ($15 and $60 per 1M tokens respectively).

Is DeepSeek safe to use?

Direct use of DeepSeek website and mobile app not recommended due to vulnerabilities and data storage in China. Safe options: local deployment through Hugging Face, use through AWS Bedrock, or through US providers like Perplexity (public data only).

What models does DeepSeek offer?

DeepSeek-V3 (general chat, 671B parameters), DeepSeek-R1 (reasoning, 128K context), DeepSeek Coder (programming, 1B-33B versions), DeepSeek-Coder-V2 (338 languages, 128K context), Janus-Pro-7B (image generation).

Does DeepSeek work with Russian language?

Yes, DeepSeek supports Russian language. Model trained on multilingual data including Russian. DeepSeek-V3 trained on 14.8 trillion tokens in many languages. Russian response quality may differ slightly from English but remains high for most tasks.

Can DeepSeek be used for commercial projects?

Yes, DeepSeek fully open and available for commercial use. Models distributed under open license allowing free use, modification and commercialization. However, consider censorship and data security limitations when planning commercial projects.

Why is DeepSeek so much cheaper than competitors?

DeepSeek uses Mixture-of-Experts architecture, activating only 37B of 671B parameters per query. This saves computational resources. Company also applied algorithmic innovations for efficient training, using synthetic data from o1. Training on old NVIDIA chips and process optimization reduced costs.

Which countries banned DeepSeek?

Taiwan (January 27, 2025, all government agencies), Texas USA (January 28, government devices), NASA and US Navy (internal bans), Italy (January 30, entire country), Australia (February 4, government devices). Bans related to national security and data privacy concerns.

How does DeepSeek compare with Claude and Gemini?

DeepSeek R1 shows results comparable to Claude 3.5 Sonnet and Gemini 2 on mathematics and programming. DeepSeek significantly cheaper to use and fully open. Claude better for long reasoning, Gemini for creative and visual tasks. DeepSeek optimal for logic, mathematics and code.

Will there be DeepSeek-V4 or R2?

DeepSeek hasn't publicly announced next versions. Given rapid release pace (V2 in May 2024, V3 in December 2024, R1 in January 2025), can expect new models in 2025. Company likely to continue improving efficiency and expanding capabilities while maintaining open approach.

Radzivon Alkhovik

Dec 15, 2025