Technology & AI

Radzivon Alkhovik

Jun 19, 2025

OpenAI released GPT-4.1 on April 14, 2025—a developer-focused API model that achieves 54.6% success on real GitHub issues while costing 26% less than GPT-4o. This isn't an incremental update; it's built specifically for software engineering.

GPT-4.1 comes in two variants that outperform previous models across coding benchmarks. The breakthrough feature is a 1 million token context window that processes entire codebases—eight times the React codebase—in a single request.

Key Benefits: 54.6% accuracy on real-world coding tasks, 1 million token context for massive codebase analysis, 26% cost reduction with superior performance, and 38.3% accuracy on complex instruction following.

What is GPT-4.1? OpenAI's Most Advanced Developer API

GPT-4.1 puts software engineering first. While previous models treated coding as one feature among many, GPT-4.1 was built specifically for developers, delivering breakthrough performance in coding, instruction following, and long-context understanding.

OpenAI created two variants for different development needs:

GPT-4.1 (Full Model): Designed for complex software engineering, architectural planning, and comprehensive code analysis. Best for enterprise applications requiring maximum intelligence.

GPT-4.1 Mini: Beats GPT-4o on many benchmarks while reducing latency by 50% and costs by 83%. Perfect for high-volume applications needing speed and cost-efficiency without quality loss.

GPT-4.1's focus on real-world developer needs shows. OpenAI worked with companies like Windsurf, Qodo, and Thomson Reuters to ensure excellence at daily development tasks, not just academic benchmarks. The model includes a refreshed knowledge cutoff of June 2024, understanding the latest frameworks and practices.

GPT-4.1 vs GPT-4o: Revolutionary Improvements in Coding Performance

The evolution from GPT-4o to GPT-4.1 represents one of the most significant model improvements in OpenAI's history, particularly for software engineering applications. These aren't marginal gains—they're transformative leaps that fundamentally change what's possible with AI-assisted development.

The performance gains from GPT-4o to GPT-4.1 are substantial across every metric that matters to developers:

Feature | GPT-4.1 | GPT-4o | Improvement |

SWE-bench Verified | 54.6% | 33.2% | +21.4% |

MultiChallenge (Instruction Following) | 38.3% | 27.8% | +10.5% |

Context Window | 1M tokens | 128K tokens | 8x larger |

Aider Polyglot (Diff Format) | 52.9% | 18.2% | +34.7% |

Video-MME (Long Context) | 72.0% | 65.3% | +6.7% |

API Pricing | $2.00/1M input | $2.50/1M input | 26% cheaper |

Output Token Limit | 32,768 | 16,384 | 2x larger |

These improvements aren't just numbers on a benchmark—they translate directly into practical benefits that development teams experience daily. The combination of better performance and lower costs represents a rare achievement in the AI industry, where improvements typically come with increased expenses.

Coding Performance Breakthrough

The most dramatic improvement comes in real-world software engineering. On SWE-bench Verified—a benchmark that tests a model's ability to solve actual GitHub issues—GPT-4.1 achieves 54.6% accuracy compared to GPT-4o's 33.2%. This isn't just a numbers game; it represents the difference between an AI that can occasionally help with coding and one that can genuinely function as a capable development partner.

Instruction Following Revolution

GPT-4.1's 38.3% score on MultiChallenge (vs 27.8% for GPT-4o) translates to dramatically more reliable behavior when following complex, multi-step instructions. This improvement is crucial for agentic applications where the AI needs to maintain context across long conversations and execute precise workflows.

Context Window Expansion

The jump from 128K to 1 million tokens fundamentally changes what's possible. Developers can now feed entire codebases, comprehensive documentation, or lengthy technical specifications into a single API call, enabling analysis and modifications that were previously impossible.

GPT-4.1 Key Features

GPT-4.1's features are optimized specifically for software development, making the difference between an AI assistant and an AI pair programmer.

1 Million Token Context Window

The expansion to 1 million tokens qualitatively changes how developers work with AI. Previous limitations forced breaking complex tasks into chunks, losing valuable context between codebase parts.

This massive context enables previously impossible workflows:

Codebase Analysis: Process entire project structures to identify architectural patterns and technical debt

Cross-File Refactoring: Make changes across multiple files while maintaining consistency

Documentation Generation: Analyze all related code files, tests, and docs simultaneously

Bug Investigation: Trace issues across modules to identify root causes

To put this in perspective: 8 complete React codebases, 750,000+ words of documentation, or entire API specifications in one request.

Enhanced Instruction Following

GPT-4.1 achieves 38.3% on MultiChallenge, representing breakthrough reliability. While previous models understood requests ~70% of the time, GPT-4.1 consistently delivers exactly what you specify, when you specify it, in the format you need.

The model excels across critical categories: format following (XML, YAML, JSON), negative instructions (avoiding specified behaviors), ordered instructions (multi-step processes), content requirements (comprehensive documentation), ranking and organization, and uncertainty handling (admitting when information is unavailable rather than hallucinating).

Advanced Diff Format Support

GPT-4.1 more than doubles GPT-4o's performance on diff-format tasks (52.9% vs 18.2%), making it reliable for code reviews, patch generation, version control integration, and cost optimization by processing only changed lines.

Reduced Extraneous Edits

Internal testing shows GPT-4.1 makes unnecessary code edits just 2% of the time compared to 9% for GPT-4o. This means cleaner pull requests, safer refactoring, and better code reviews where reviewers focus on intentional changes rather than filtering noise.

These improvements transform GPT-4.1 into a reliable development partner that respects existing codebase structure.

GPT-4.1 API Pricing: 26% Cost Reduction with Superior Performance

In an industry where better performance typically comes with higher costs, GPT-4.1 breaks the mold by delivering both improved capabilities and reduced expenses. This pricing strategy reflects OpenAI's commitment to making advanced AI accessible to development teams of all sizes.

OpenAI has delivered the rare combination of better performance and lower costs. The GPT-4.1 pricing structure offers significant savings while providing superior capabilities:

Model | Input Tokens | Cached Input | Output Tokens | Blended Pricing* |

GPT-4.1 | $2.00/1M | $0.50/1M | $8.00/1M | $1.84/1M |

GPT-4.1 Mini | $0.40/1M | $0.10/1M | $1.60/1M | $0.42/1M |

The pricing model is designed to reward efficient usage patterns common in development workflows, where teams often reuse system prompts, documentation, and boilerplate code across multiple requests.

Cost Optimization Features

GPT-4.1 introduces several intelligent cost-saving features that compound over time, making it increasingly economical for teams with consistent usage patterns.

Enhanced Prompt Caching: The prompt caching discount increases to 75% (up from 50%), making repeated context processing extremely cost-effective. Perfect for applications that reuse boilerplate code, documentation, or system prompts.

Batch API Savings: Additional 50% discount when using the Batch API for non-time-sensitive processing, ideal for code analysis, documentation generation, or large-scale refactoring tasks.

No Long Context Premium: Unlike some competitors, GPT-4.1 charges standard per-token rates regardless of context length. Process 1 million tokens at the same rate as 1,000 tokens.

These optimizations mean that development teams can scale their AI usage without worrying about exponential cost increases, making GPT-4.1 viable for everything from individual projects to enterprise-wide deployments.

ROI Calculations for Development Teams

The true value of GPT-4.1 extends far beyond direct API costs to include productivity gains, reduced debugging time, and faster feature delivery cycles.

For a typical 10-developer team using GPT-4.1 for daily coding assistance:

Previous Cost (GPT-4o): ~$400/month

New Cost (GPT-4.1): ~$300/month

Performance Gain: 21.4% improvement in code completion accuracy

Time Savings: Estimated 15-20% reduction in development time

Net ROI: 300%+ within the first month

These calculations don't include the additional benefits of reduced technical debt, fewer bugs reaching production, and improved code quality that compound over time.

GPT-4.1 Benchmarks: 54.6% SWE-bench Success Rate

GPT-4.1's performance across industry-standard evaluations demonstrates clear leadership in developer-focused capabilities. These aren't abstract academic scores—they measure exactly the skills that matter in real software development.

GPT-4.1 excels where it counts most for developers. The 54.6% success rate on SWE-bench Verified means it can resolve more than half of typical GitHub issues independently—a breakthrough in practical coding assistance. Its 52.9% performance on Aider Polyglot (diff format) proves reliability across multiple programming languages, crucial for clean code reviews and multi-language projects.

Instruction following separates useful AI from frustrating tools. GPT-4.1's 38.3% score on MultiChallenge tests complex multi-turn conversations, directly correlating with success in automated workflows. The 87.4% score on IFEval demonstrates compliance with formatting and content requirements—critical for generating code that meets style guides.

Long context understanding enables entirely new categories of development assistance. GPT-4.1's 72.0% on Video-MME and 57.2% on OpenAI-MRCR mean it can maintain understanding throughout extended development sessions, remembering earlier decisions and maintaining consistency across large projects.

Benchmark | GPT-4.1 | GPT-4o | o3-mini | Claude 3.5 | What It Measures |

SWE-bench | 54.6% | 33.2% | 49.3% | ~45%* | Real-world GitHub issues |

MultiChallenge | 38.3% | 27.8% | 39.9% | ~35%* | Complex instruction following |

Aider Polyglot | 52.9% | 18.2% | 60.4% | ~40%* | Multi-language coding |

IFEval | 87.4% | 81.0% | 93.9% | ~85%* | Format compliance |

Video-MME | 72.0% | 65.3% | - | ~60%* | Long-form content analysis |

OpenAI-MRCR | 57.2% | 31.9% | - | ~45%* | Context resolution |

While no single model dominates every category, GPT-4.1's leadership in real-world software engineering tasks and practical instruction following positions it as the clear choice for production development workflows.

How to Access GPT-4.1 API: Developer Integration Guide

Getting started with GPT-4.1 requires understanding both the technical integration steps and the strategic considerations for maximizing its effectiveness in your development workflow. This isn't just about making API calls—it's about transforming how your team builds software.

GPT-4.1 is exclusively available through OpenAI's API, ensuring developers get the most advanced capabilities for their applications.

API Integration Setup

The integration process is straightforward, but understanding the nuances of each step will help you avoid common pitfalls and optimize performance from day one.

Step 1: API Key Configuration

These examples demonstrate the basic integration patterns, but the real power comes from understanding how to structure your prompts and manage context for optimal results.

Rate Limits and Scaling

Understanding rate limits is crucial for planning your application architecture and ensuring smooth scaling as your usage grows.

Tier | RPM | TPM | Best For |

Tier 1 | 1,000 | 125,000 | Individual developers, prototyping |

Tier 3 | 5,000 | 1,000,000 | Small teams, production apps |

Tier 5 | 10,000 | 2,000,000 | Enterprise teams, high-volume applications |

Most development teams start with Tier 1 for experimentation and quickly move to Tier 3 for production deployments. Enterprise teams requiring high-volume processing should plan for Tier 5 to avoid rate limiting during peak usage periods.

Optimization Best Practices

Maximizing GPT-4.1's effectiveness requires understanding how to structure requests for both performance and cost efficiency.

Use Prompt Caching: For applications that reuse system prompts or large context:

These optimization techniques can reduce costs by 60-80% for applications with predictable usage patterns, making GPT-4.1 economical even for high-volume production systems.

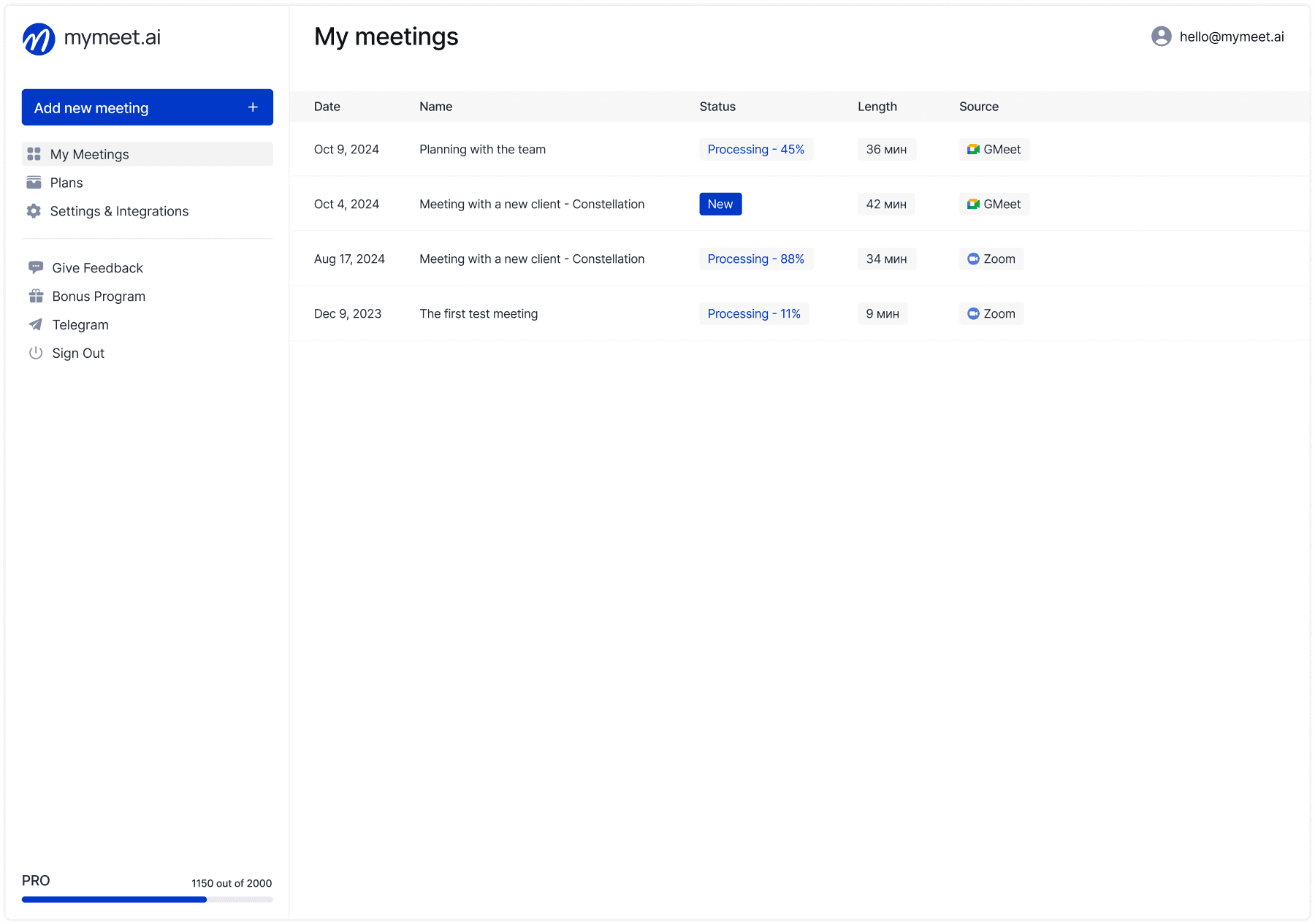

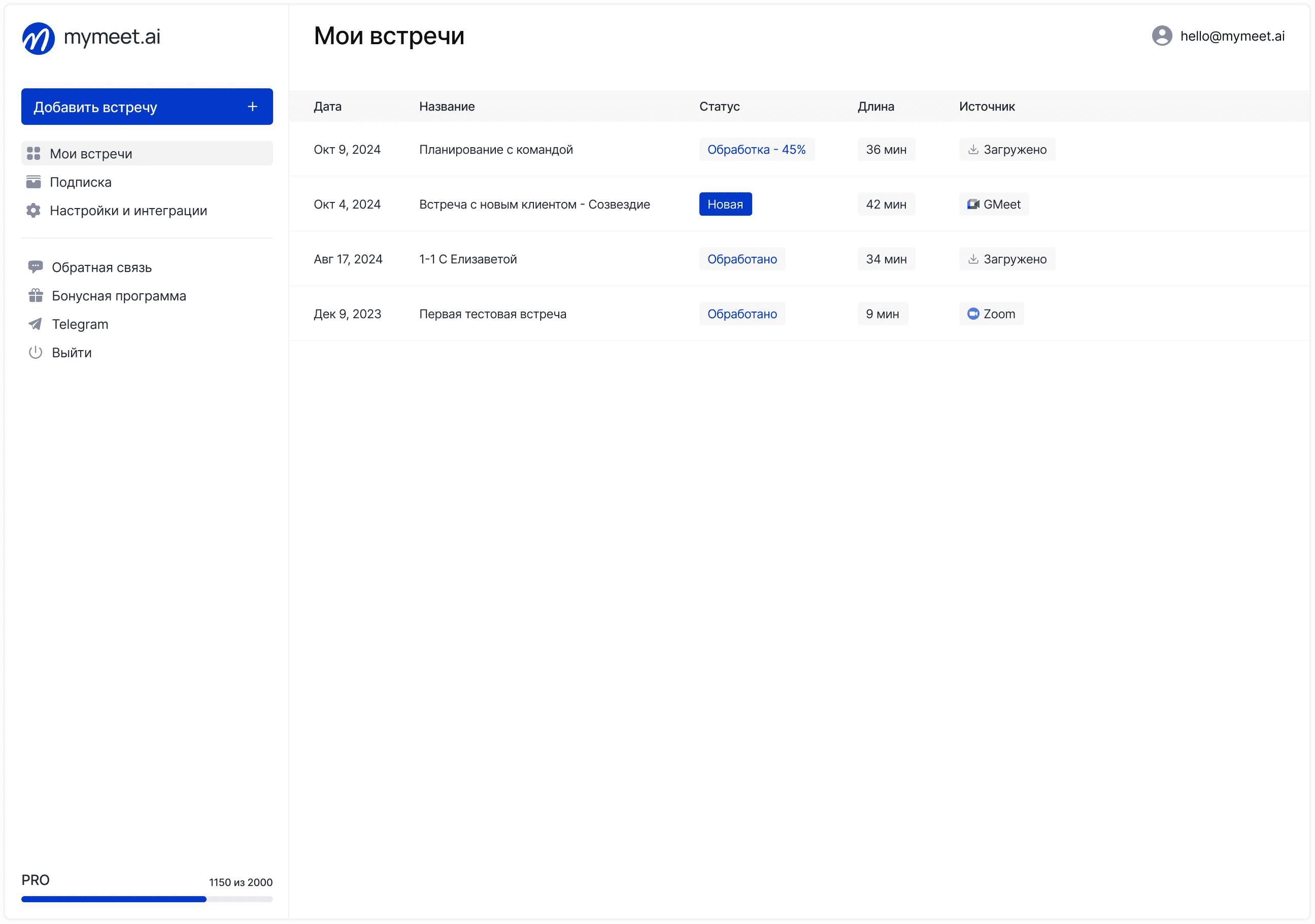

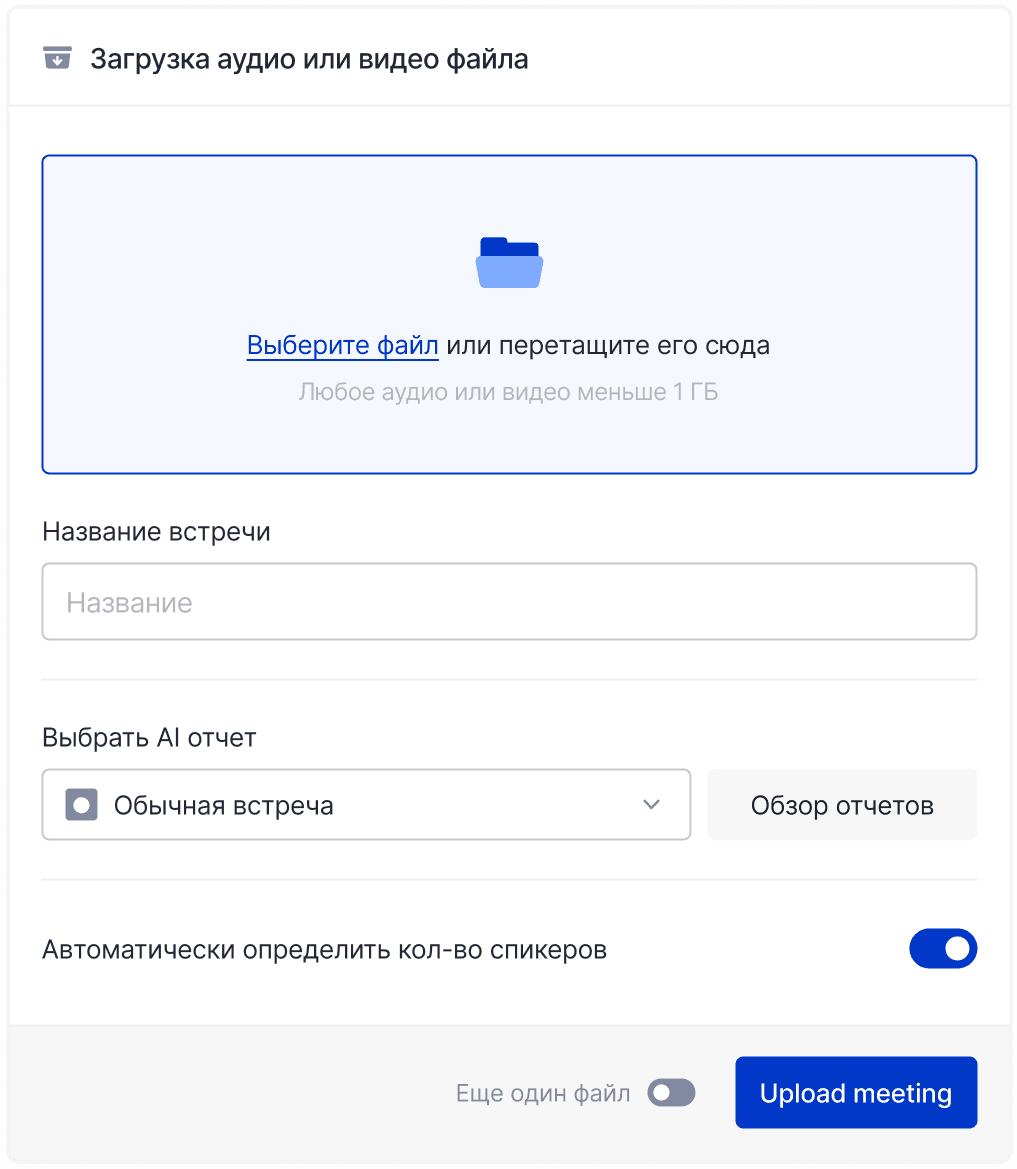

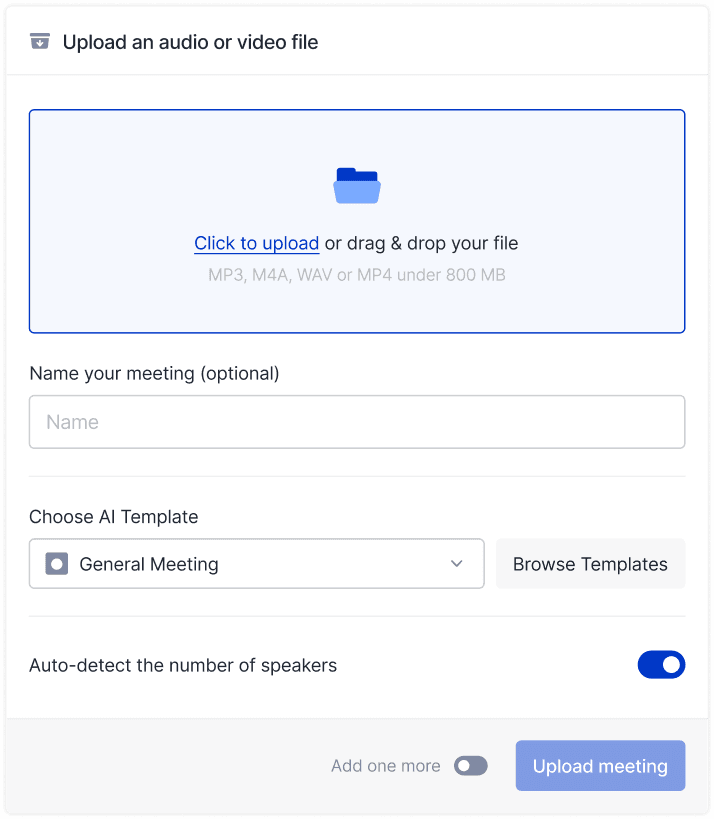

Supercharging GPT-4.1 with mymeet.ai for Development Teams

While GPT-4.1 excels at analyzing code and following complex instructions, it can't capture the context and decisions that emerge during your team's daily meetings. This is where mymeet.ai creates powerful synergy with GPT-4.1's capabilities.

What is mymeet.ai?

mymeet.ai is an AI meeting assistant that automatically joins video conferences across Zoom, Google Meet, and Microsoft Teams to record meetings, create transcripts, and extract action items. Unlike manual processes, mymeet.ai captures meeting content without human intervention across 70+ languages with enterprise-grade security.

Key benefits for development teams:

Automatically joins and records meetings without manual intervention

Creates accurate transcripts with speaker identification

Extracts action items and deadlines automatically

Organizes content into searchable, shareable formats

These capabilities become exponentially more powerful when combined with GPT-4.1's advanced analysis.

The Perfect Development Workflow

The integration creates a seamless workflow that transforms verbal discussions into actionable development artifacts:

Meeting Capture: mymeet.ai automatically joins technical meetings, capturing discussions about requirements, architecture decisions, and code reviews.

Enhanced Analysis: Export transcripts to GPT-4.1 for deep analysis using its 1 million token context window.

Actionable Outputs: Generate precise technical specifications, code snippets, and architectural documentation.

This workflow eliminates the gap between what gets discussed in meetings and what actually gets implemented.

Success Metrics

Teams using this integrated approach report:

75% reduction in post-meeting documentation time

60% fewer follow-up clarification messages

40% faster sprint delivery cycles

90% better requirement coverage

These improvements compound over time, leading to fewer bugs and more predictable delivery schedules.

Security and Implementation

Both platforms maintain enterprise-grade security with TLS 1.2+ encryption, GDPR compliance, and automatic data retention policies. Setup involves connecting calendar integration, configuring GPT-4.1 templates for different meeting types, and establishing automated workflows.

This integration transforms routine development meetings into highly productive, well-documented strategic sessions that drive faster software delivery.

GPT-4.1 Success Stories: Real Developer Use Cases

Leading development teams worldwide are already experiencing transformative results with GPT-4.1, proving its value far beyond academic benchmarks.

Windsurf achieved a 60% improvement in code acceptance rates, with developers approving AI-generated changes on first review. Their engineering team reports that GPT-4.1 "understands context better and makes more thoughtful changes" compared to previous models.

Qodo tested GPT-4.1 against competitors on 200 real-world pull requests, with 55% of reviewers preferring its code review suggestions. The model demonstrated superior precision in knowing when not to make suggestions while providing comprehensive analysis when needed.

Blue J saw 53% better accuracy on complex tax scenarios compared to GPT-4o. This improvement translates directly to faster, more reliable client research and reduced manual verification overhead in their legal AI assistant.

Hex experienced nearly 2x improvement on challenging SQL evaluation sets. GPT-4.1's enhanced ability to select correct tables from complex database schemas—a critical upstream decision—dramatically reduced manual debugging requirements.

Thomson Reuters integrated GPT-4.1 with their CoCounsel legal assistant, achieving 17% better multi-document review accuracy. The model excels at maintaining context across sources and identifying nuanced relationships between legal documents.

Carlyle leveraged GPT-4.1's long-context capabilities for financial document analysis, seeing 50% better performance on data extraction from complex formats. GPT-4.1 became the first model to successfully overcome needle-in-haystack retrieval and lost-in-middle errors.

These success stories share common patterns: reduced manual work, higher first-pass success rates, better context understanding through the 1 million token window, and cost-effective scaling. Development teams consistently report that GPT-4.1 delivers better results while reducing both time and API costs.

GPT-4.1 vs Competition: Beating o3-mini and Claude in Coding

GPT-4.1 outperforms major competitors in developer-focused tasks. Here's how it stacks up against alternatives across metrics that actually matter for development teams.

Feature | GPT-4.1 | o3-mini | Claude 3.5 | Gemini | Best For |

SWE-bench Verified | 54.6% | 49.3% | ~45%* | ~35%* | Real-world coding |

Context Window | 1M tokens | 128K | 200K | 128K | Large codebase analysis |

Cost Efficiency | $1.84/1M | Higher | Higher | Variable | Production scaling |

Response Speed | Fast | Slow | Fast | Fast | Interactive development |

Instruction Following | 38.3% | 39.9% | ~35%* | ~30%* | Agentic applications |

Math Reasoning | 48.1% | 87.3% | ~60%* | ~65%* | Academic research |

Creative Writing | Good | Poor | Excellent | Good | Content generation |

Multimodal | Text/Image | Text/Image | Text/Image | Full | Media processing |

GPT-4.1 wins where it counts for software development: solving actual GitHub issues, processing entire codebases, and doing it cost-effectively. Competitors have strengths—o3-mini excels at complex math, Claude handles creative tasks better, Gemini offers broader multimodal support—but GPT-4.1 delivers the best overall package for coding work.

Use GPT-4.1 for coding assistance, codebase analysis, automation workflows, production scaling, and interactive development where speed matters.

Use alternatives for mathematical problem-solving (o3-mini), creative writing (Claude), Google Workspace integration (Gemini), or extensive multimedia processing.

GPT-4.1's developer-focused design, proven performance with real companies, cost advantages, and mature tooling ecosystem create a strong competitive position. While others will likely improve their coding capabilities, GPT-4.1's head start in software engineering performance gives it significant momentum.

For teams building software, GPT-4.1 offers the strongest combination of performance, cost, and reliability currently available.

Future of AI-Powered Development

GPT-4.1 marks a shift toward AI purpose-built for software engineering, reshaping how we build software entirely.

Autonomous Development is already emerging. AI systems can analyze bug reports, implement fixes, write tests, and submit pull requests independently. With 1 million token context, AI monitors entire codebases for technical debt and coordinates updates across system architectures. Companies like Windsurf and Qodo are building these autonomous tools today.

Democratized Engineering means non-programmers can describe complex applications and receive production-ready code. Domain experts in finance, healthcare, and law create specialized tools without deep programming knowledge. Ideas become working applications in hours instead of weeks.

Enterprise Transformation is underway as organizations restructure around AI capabilities. Tasks requiring multiple developers now need smaller teams. Complex applications that took months are completed in weeks. AI-generated code often follows best practices more consistently, reducing bugs and technical debt.

Developer Roles Evolve as AI handles routine coding. Developers focus on system design, architectural decisions, and strategic planning. New essential skills include prompt engineering, AI tool integration, and human-AI workflow design. Quality assurance shifts to ensuring AI-generated code meets standards.

New Challenges include maintaining code quality as AI generates more code, managing unexpected dependencies, balancing AI assistance with skill development, and ensuring AI doesn't introduce security vulnerabilities or bias.

GPT-4.1 represents the beginning of this transformation—changes ahead will be as significant as the shift from assembly to high-level programming languages.

Conclusion

GPT-4.1 transforms coding from assisted development to true AI collaboration. With 54.6% success on real-world engineering tasks, 1 million token context, and 26% lower costs, it's fundamentally different from previous models.

Companies like Windsurf, Qodo, and Thomson Reuters prove GPT-4.1 delivers measurable results: faster development cycles, higher code quality, and significant cost savings. These aren't theoretical benefits—they're happening now.

Combined with tools like mymeet.ai, GPT-4.1 creates complete workflows that bridge meeting discussions and implemented code. This represents the future: AI that understands context, follows instructions reliably, and delivers production-ready results.

For development teams, GPT-4.1's superior performance, lower costs, and proven success make it the clear choice. The question isn't whether AI will transform software development—it's whether your team will gain competitive advantage through early adoption.

The future of software development is here, powered by GPT-4.1.

FAQ

What is GPT-4.1 and how does it differ from GPT-4o?

GPT-4.1 is OpenAI's latest API model specifically optimized for software development. It delivers 54.6% accuracy on SWE-bench Verified (vs 33.2% for GPT-4o), processes 1 million tokens of context (8x larger), and costs 26% less while providing superior coding and instruction-following capabilities.

When was GPT-4.1 released?

OpenAI released GPT-4.1 on April 14, 2025, exclusively through their API platform.

What are the GPT-4.1 variants?

GPT-4.1 comes in two versions: GPT-4.1 (full model for complex tasks), GPT-4.1 Mini (83% cheaper with comparable performance). All support 1 million token context windows.

How much does GPT-4.1 cost?

GPT-4.1 pricing starts at $2.00 per million input tokens and $8.00 per million output tokens, with significant discounts for cached content (75% off) and batch processing (50% off). GPT-4.1 Mini costs $0.40/$1.60 per million tokens.

Can I use GPT-4.1 in ChatGPT?

No, GPT-4.1 is exclusively available through OpenAI's API. However, some improvements from GPT-4.1 are being gradually incorporated into the ChatGPT version of GPT-4o.

What is the context window size for GPT-4.1?

All GPT-4.1 variants support up to 1 million tokens of context, allowing you to process entire codebases, comprehensive documentation, or multiple large files in a single request.

How does GPT-4.1 compare to competitors like Claude and Gemini?

GPT-4.1 leads in software engineering tasks (54.6% on SWE-bench vs ~45% estimated for Claude) and instruction following (38.3% on MultiChallenge). It offers the largest context window (1M tokens) and most cost-effective pricing for coding applications.

What makes GPT-4.1 better for coding than previous models?

GPT-4.1 excels at diff format generation (52.9% vs 18.2% for GPT-4o), makes 78% fewer extraneous edits, follows complex instructions more reliably, and can analyze entire codebases simultaneously due to its 1 million token context window.

Can GPT-4.1 replace human developers?

GPT-4.1 is designed to augment rather than replace developers. It excels at routine coding tasks, code analysis, and following specifications, but human oversight remains essential for architecture decisions, quality assurance, and business requirement translation.

How do I get started with GPT-4.1?

Access GPT-4.1 through OpenAI's API by setting up an API key and using the model identifier "gpt-4.1", "gpt-4.1-mini". Start with the mini variant for cost-effective experimentation before scaling to the full model for production use.

Radzivon Alkhovik

Jun 19, 2025