Technology & AI

Fedor Zhilkin

Dec 12, 2025

On July 9, 2025, xAI introduced Grok 4—the most intelligent model in the world at release time. The model includes built-in tool use, real-time search integration and is available to SuperGrok, Premium+ subscribers and through xAI API. In parallel, a new SuperGrok Heavy tier was announced with access to Grok 4 Heavy—the most powerful model version.

In this guide, we'll break down the revolutionary approach to training through reinforcement learning, built-in tools for working with X and web search, unprecedented results on the most difficult tests and practical model applications.

What Is Grok 4 from xAI

Grok 4 is an artificial intelligence model from xAI, created with focus on large-scale reinforcement learning. If predecessor Grok 3 achieved incredible results through scaling next-token prediction, Grok 4 went further: the company used a Colossus cluster of 200,000 graphics processors to train the model to reason at pre-training scale level.

Key difference from other AI models—Grok 4 trained not just on mathematics and programming, but on many other areas with verifiable data. The training process used an order of magnitude more compute than any previous model. Infrastructure and algorithm innovations increased training efficiency 6x.

Two Model Versions:

Grok 4 — standard version with outstanding capabilities

Grok 4 Heavy — extended version with parallel computation

Both versions available through grok.com, X apps (iOS/Android) and xAI API.

Grok 3 Reasoning: Scaling Training

While working on Grok 3 Reasoning, xAI team noticed trends showing possibility of significant reinforcement learning scaling. Grok 3 achieved unprecedented results in world knowledge through next-token prediction, and Grok 3 Reasoning learned to think longer about problems to increase accuracy.

For Grok 4, the team used the entire Colossus cluster for reinforcement learning at a pre-training scale. This became possible thanks to infrastructure and algorithm breakthroughs. A critical factor was expansion of verifiable training data: previously models trained mainly on mathematics and code, now coverage expanded to many knowledge areas.

Result exceeded expectations: smooth performance growth when using compute power exceeding previous experiments by an order of magnitude. The model showed stable improvements throughout the training process.

Built-in Tool Use — AI Revolution

Grok 4 trained to use tools through reinforcement learning. This allows the model to augment its thinking with code interpreters and web browsing in situations typically difficult for language models.

When searching for real-time information or answering complex research questions, Grok 4 itself chooses search queries, finds knowledge across the entire internet and digs as deep as needed for quality answers. The model is not limited to one query—it can conduct multi-step research like a human researcher would.

Tools for Working with X:

Grok 4 received powerful tools for searching information within the X platform. The model uses advanced keyword search and semantic search, and can view media content to improve answer quality. This gives a unique advantage—access to real-time discussions and trends on the platform.

Example of working with X search:

When user asks "I remember popular post few days ago about crazy word puzzle with something about legs, help find", Grok 4:

Analyzes query and formulates search strategy

Executes multiple search queries with different parameters

Reviews results and refines search

Finds viral post with 89,123 likes

Provides context: it was NYT Connections puzzle #756, where needed to find words ending in homophones (same-sounding words) of body parts

The entire reasoning process is shown to the user—you can see how the model thinks and makes decisions.

Grok 4 Heavy — Parallel Computation for Maximum Accuracy

Grok 4 Heavy represents further progress in parallel computation during response. This allows the model to consider multiple hypotheses simultaneously, setting new performance and reliability standards.

Grok 4 Heavy became the first model to reach 50% on Humanity's Last Exam—a test "designed as a final closed-book academic exam". Model shows maximum results on most academic tests, demonstrating metrics unattainable for competitors.

How Heavy Version Works:

When queried, Grok 4 Heavy launches multiple parallel agents, each thinking approximately 10 minutes. Agents work independently, considering different solution approaches. Then the system combines results, choosing the optimal answer.

Test Results — New Intelligence Level

Grok 4 sets best results on key tests, demonstrating a leap in frontier intelligence.

Humanity's Last Exam:

Grok 4 Heavy (with Python + Internet): 44.4%

Grok 4 (with Python + Internet): 38.6%

Gemini Deep Research: 26.9%

o3 (with Python + Internet): 24.9%

This is a deep expert test at the boundary of human knowledge. Grok 4 Heavy showed 50.7% on the text portion with tools.

ARC-AGI V2 (abstraction and reasoning):

Grok 4: 15.9%

Claude Opus 4: 8.6%

o3: 6.5%

Grok 4 shows nearly two-fold advantage over Claude Opus—this is a substantial breakthrough in abstract reasoning.

Vending-Bench (agentic tasks):

Grok 4: $4,694.15 net worth, 4569 units sold

Claude Opus 4: $2,077.41, 1412 units

Humans: $844.05, 344 units

On agentic tasks, Grok 4 significantly surpasses not only other AI models but humans on average.

Mathematics Tests:

AIME 2025 (math competitions):

Grok 4 Heavy (with Python): 100%

Grok 4 (with Python): 98.8%

o3 (with Python): 98.4%

USAMO 2025 (olympiad math proofs):

Grok 4 Heavy (with Python): 61.9%

Gemini Deep Think: 49.4%

Grok 4: 37.5%

HMMT 2025 (competitive mathematics):

Grok 4 Heavy (with Python): 96.7%

Grok 4 (with Python): 93.9%

Programming:

LiveCodeBench (January-May, competitive programming):

Grok 4 Heavy (with Python): 79.4%

Grok 4 (with Python): 79.3%

Gemini 2.5 Pro: 74.2%

GPA (science):

Grok 4 Heavy (with Python): 88.4%

Grok 4: 87.5%

Grok 4 API for Developers

Grok 4 API gives developers access to frontier multimodal understanding with 256,000 token context windows. API includes advanced reasoning capabilities for solving complex tasks with text and images.

Key API Capabilities:

Real-time data search across X, internet and news sources through live search API

Built-in tool use for up-to-date responses

Enterprise security: SOC 2 Type 2, GDPR, CCPA certifications

Integration with major cloud providers for scaled deployment

API enables creating innovative AI solutions with security guarantees for sensitive applications. Grok 4 will soon be available through cloud partners, simplifying enterprise adoption.

Grok 4 Voice Mode — New Level AI Conversations

Updated voice mode offers improved realism, responsiveness and intelligence. xAI introduced a new calm voice and redesigned conversation design for greater naturalness.

Revolutionary feature: Grok now sees what you see. Point camera, speak immediately, and Grok analyzes the scene, responding in real-time directly in voice chat. This is model trained in-house using advanced reinforcement learning methods and speech compression techniques.

Turn on video during voice chat and Grok will analyze what it sees while talking with you. This creates a unique multimodal interaction experience—the model doesn't just listen but also sees your query context.

Who Grok 4 Is For

Grok 4 created for professionals working with complex tasks. Researchers get models that can conduct deep analysis using multiple tools. Developers will appreciate 100% accuracy on AIME 2025 and high results on competitive programming.

Data analysts and scientists will find a powerful assistant for research tasks in Grok 4. Built-in search integration allows getting current information without manual data collection. Parallel computation in the Heavy version provides reliability critical for decision-making.

X users get a unique advantage—Grok 4 has deep platform integration. The model can search posts, analyze trends, and find discussion context. This makes it ideal for content creators and community managers.

Students and teachers will appreciate voice mode with video—ability to point cameras at problems and get real-time explanations that change the learning process.

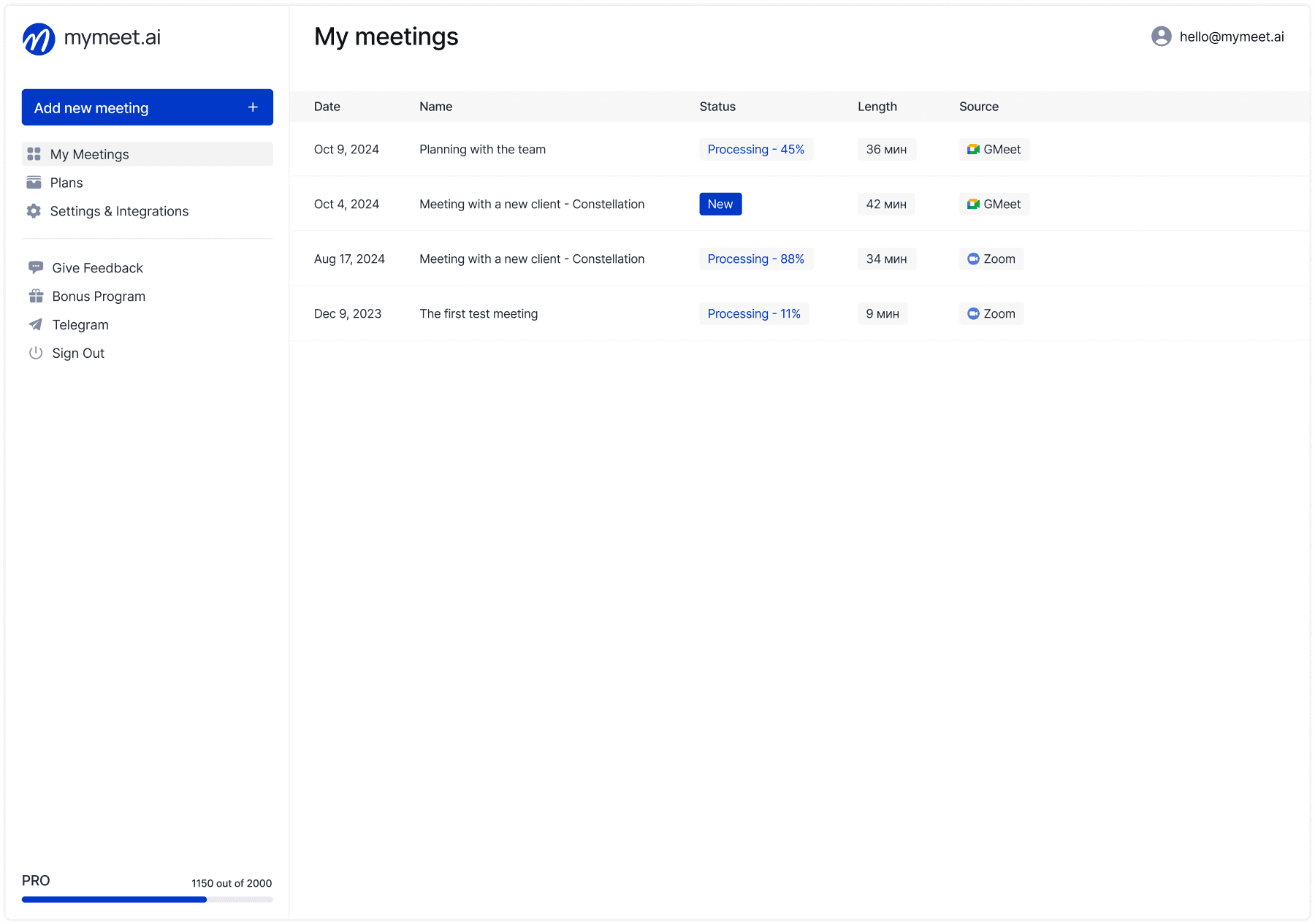

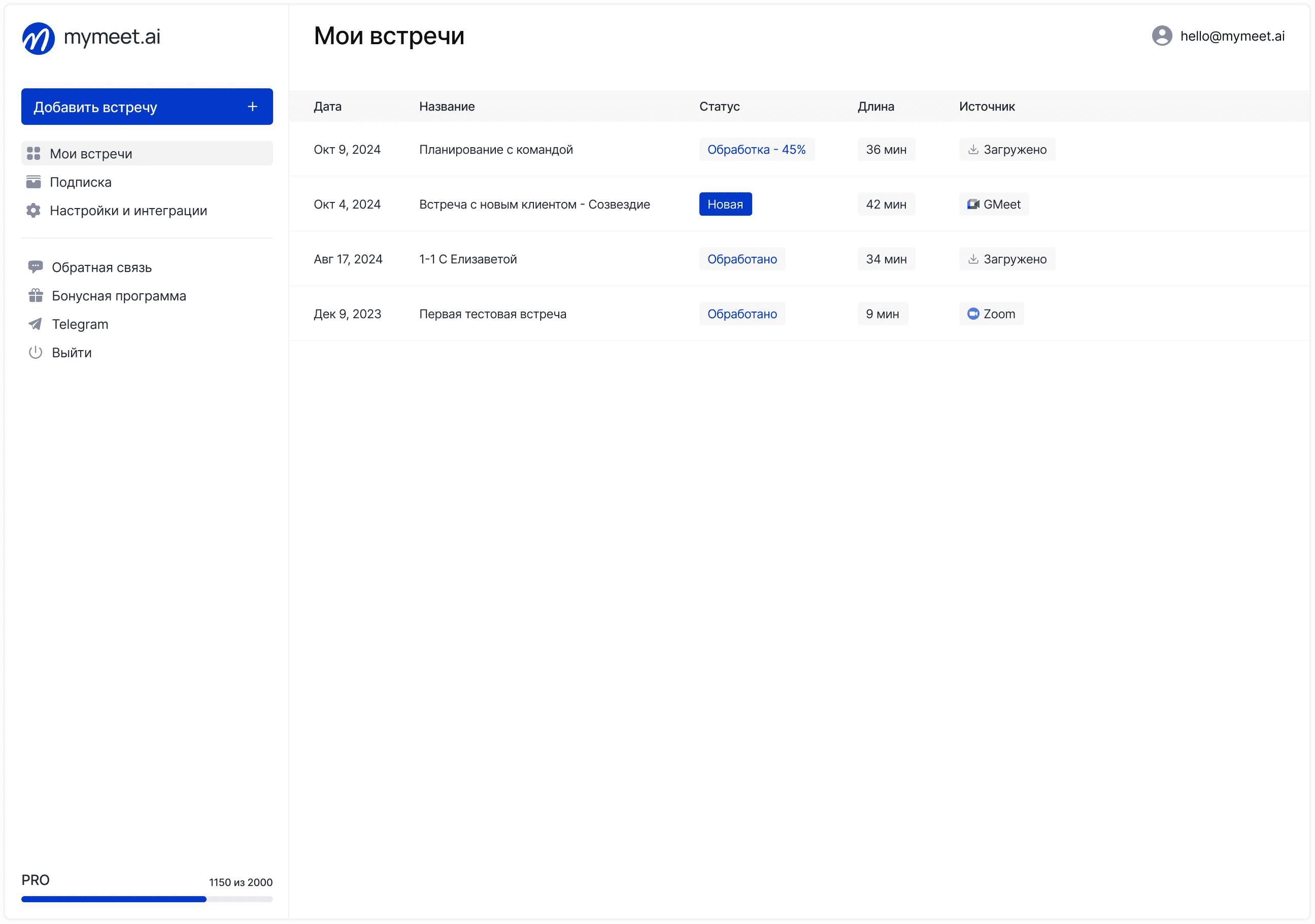

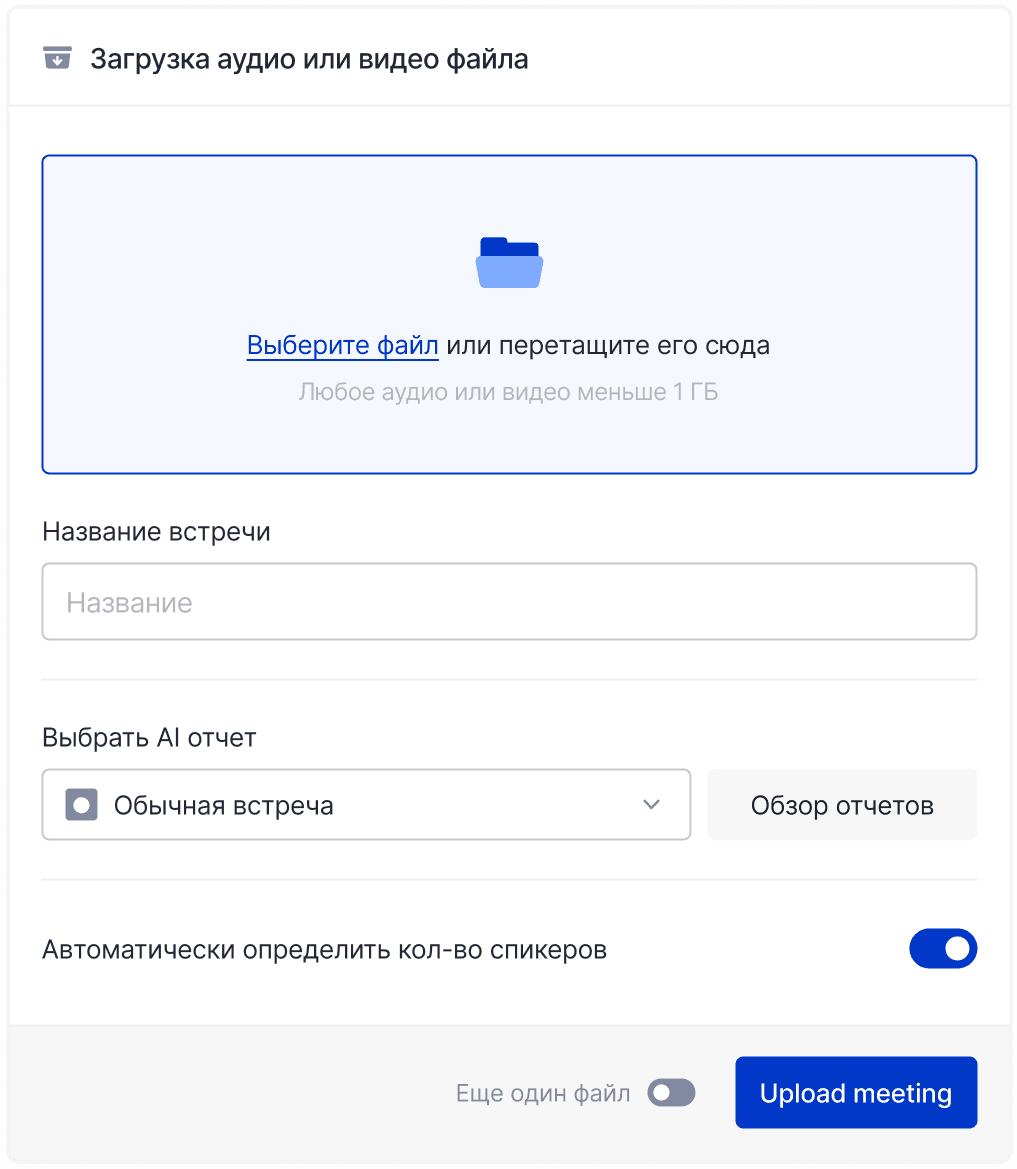

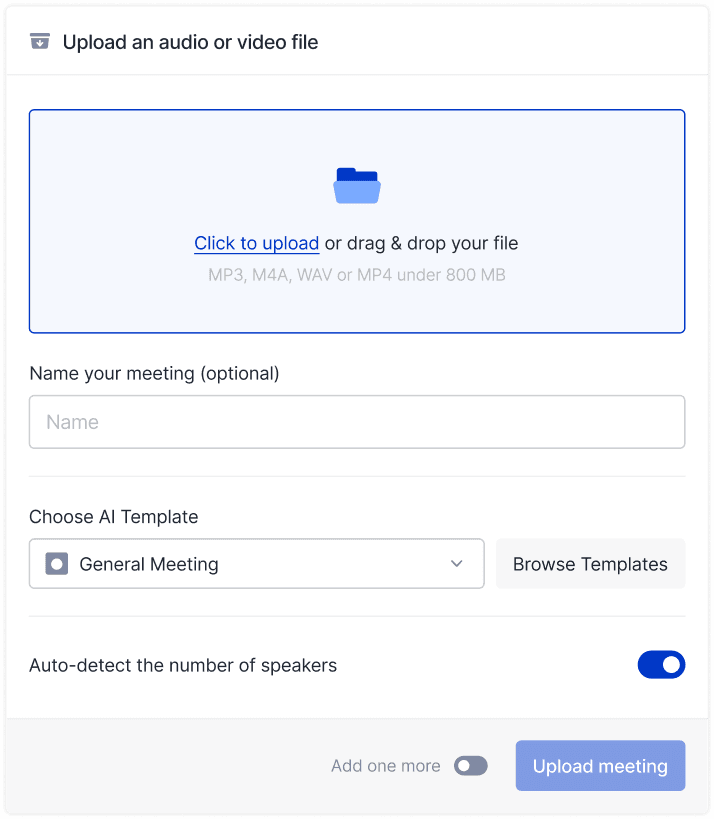

mymeet.ai for Recording and Analyzing AI Meetings

Grok 4 shows how AI becomes a powerful tool for research and analysis. But when it comes to meetings and teamwork, I need specialized solutions.

mymeet.ai is an AI assistant for online meetings. The system automatically records calls, creates transcripts with speaker identification and generates structured reports.

What mymeet.ai Can Do:

✅ Automatic recording — connects to Zoom, Google Meet, Microsoft Teams, Yandex.Telemost

✅ Accurate transcription — 95% accuracy for Russian language, supports 73 languages

✅ AI reports — structured summaries with decisions, tasks, next steps

✅ Smart search — find what was discussed at any meeting through questions to AI

✅ Integrations — calendar sync, sending reports to CRM

✅ Security — data stored in Russia, Federal Law 152 compliance

✅ Multilingual — works with teams in different languages

✅ Export — download in DOCX, PDF, JSON

Case Study: Research team conducted 15-20 user product interviews per week. Manual processing took 25-30 hours for transcription and analysis. After implementing mymeet.ai, the process was fully automated: system recorded interviews, created detailed transcripts with timestamps, generated reports with key insights and user pains. Processing time reduced to 2-3 hours for final review, analysis quality improved—nothing lost between interviews.

Try mymeet.ai free — 180 minutes processing without card attachment. [Get Started →]

Grok 4 Pros and Cons

Grok 4 sets new AI standards, but like any technology has strengths and limitations. Let's break down what makes the model unique and where opportunities for improvement remain.

Grok 4 Pros:

✅ Best results on difficult tests — first place on Humanity's Last Exam (50.7%), ARC-AGI V2 (15.9%), dominance in mathematics and programming

✅ Built-in tool use — model decides itself when to search information, use code interpreter or analyze content, without need for explicit commands

✅ Unique X integration — deep platform access through semantic search, media viewing, real-time trend analysis

✅ Parallel computation in Heavy — multiple agents work simultaneously, providing maximum reliability for critical tasks

✅ Voice mode with video — revolutionary ability to show camera and get real-time voice analysis

✅ Huge context window — 256,000 tokens through API enables working with large documents

✅ Enterprise security — SOC 2 Type 2, GDPR, CCPA certifications for corporate use

Grok 4 Cons:

⚠️ Subscription-only access — SuperGrok or Premium+ for full functionality, no free access

⚠️ Heavy version very slow — up to 10 minutes reasoning on complex tasks, not suitable for quick answers

⚠️ Focus on X ecosystem — unique capabilities tied to X platform, which may be disadvantage for those not actively using it

⚠️ No API pricing information — prices not publicly disclosed, complicating planning for developers

⚠️ High competition — GPT-5, Claude 4, Gemini 2.5 also show strong results, model choice depends on specific task

⚠️ Voice mode only in app — multimodal capabilities available through mobile apps, not web interface

Overall, Grok 4 advantages make it the best choice for research tasks and working with X platform. The heavy version sets a new reliability standard, albeit at the cost of speed.

Conclusion

Grok 4 represents an important step in AI development—scaling reinforcement learning to pre-training level showed results that seemed unattainable. The model doesn't just solve tasks more accurately than competitors, it does so through built-in tool use and deep real-time data integration.

Parallel computation in Grok 4 Heavy sets a new reliability standard for AI models. When multiple agents independently solve tasks and combine results, this gives confidence in answers critical for making important decisions.

For professionals working with research, data analysis or complex programming, Grok 4 becomes a powerful tool. X users get a unique advantage through the model's deep platform integration.

xAI promises to continue scaling reinforcement learning, expanding application area from verifiable tasks to complex real-world problems. Multimodal capabilities will improve, integrating vision, audio and other modalities for more intuitive interactions.

Try Grok 4 at grok.com or through xAI API for developers. [Get Started →]

Frequently Asked Questions (FAQ)

How does Grok 4 differ from Grok 3?

Grok 3 achieved results through scaling next-token prediction, Grok 4 goes further—uses reinforcement learning at pre-training scale with a cluster of 200,000 graphics processors. Grok 4 has built-in tool use (code, search, working with X), while Grok 3 requires explicit commands.

How much does Grok 4 cost?

Grok 4 available via SuperGrok or Premium+ subscription on X platform. Exact cost depends on region and plan. Grok 4 Heavy requires a separate SuperGrok Heavy tier. API pricing not publicly disclosed—need to request from xAI.

What's the difference between Grok 4 and Grok 4 Heavy?

Grok 4—standard version with excellent test results. Grok 4 Heavy uses parallel computation during response: launches multiple agents simultaneously, each thinking approximately 10 minutes. The heavier version is more accurate and reliable but significantly slower.

How to get access to Grok 4 API?

Register on xAI website and request API access. After approval, receive API key for integration. Grok 4 will also be available through cloud partners (AWS, Azure, GCP) for enterprise clients.

Does Grok 4 work with the Russian language?

Yes, Grok 4 supports Russian language. The model is trained in many languages and can respond, reason and use tools in Russian. Quality may differ slightly from English but remains high.

Can Grok 4 be used without an X Premium subscription?

No, Grok 4 requires SuperGrok or Premium+ subscription for access through X platform. Alternative—use xAI API directly, but this requires technical skills and API access (paid).

What is voice mode with video in Grok 4?

Voice mode allows talking with Grok by voice. The video feature adds the ability to show a camera—the model sees what you show and responds in real-time. This works through Grok mobile apps on iOS and Android.

How does Grok 4 compare with GPT-5 and Claude 4?

Grok 4 leads on ARC-AGI V2 (15.9% vs Claude Opus 4 approximately 8.6%) and Humanity's Last Exam (50.7%). On mathematics and programming results comparable with o3. Unique advantage—X integration and built-in tool use.

Is it safe to use Grok 4 for corporate data?

Grok 4 API has enterprise security: SOC 2 Type 2, GDPR, CCPA certifications. For maximum security recommended to use through xAI API with additional enterprise guarantees, not through public X interface.

Will there be Grok 5 after Grok 4?

xAI stated will continue scaling reinforcement learning. Next versions will expand application from verifiable tasks to complex real-world problems. Multimodal capabilities will improve. No specific Grok 5 release dates yet.

Fedor Zhilkin

Dec 12, 2025