Technology & AI

Fedor Zhilkin

Mar 5, 2025

On September 12, 2024, OpenAI added another specialized model to its growing lineup: o1 mini. This cost-efficient reasoning model joins the company's suite of purpose-built AI systems designed for specific tasks rather than general-purpose applications.

Unlike broader models such as GPT-4o, o1 mini focuses specifically on STEM reasoning tasks—particularly excelling at mathematics and coding challenges. What makes this model particularly noteworthy is its ability to achieve performance levels comparable to its larger sibling (o1) on many technical benchmarks, while operating at significantly lower cost and higher speeds.

This comprehensive guide explores everything you need to know about o1 mini—from its technical capabilities and performance benchmarks to practical applications, access options, and comparisons with other OpenAI models.

What is O1 Mini? Understanding OpenAI's Specialized Reasoning Model

O1 mini represents OpenAI's continued push toward specialized AI models optimized for specific tasks rather than general-purpose applications. Released in September 2024, it's a smaller, faster, and more cost-efficient version of OpenAI's o1 reasoning model.

The "mini" designation doesn't mean severely compromised performance. Rather, o1 mini is designed to deliver comparable reasoning capabilities to the full-sized o1 model in STEM domains while requiring fewer computational resources. This efficiency comes from its specialized training approach—optimized specifically for STEM reasoning during pretraining, then refined using the same high-compute reinforcement learning pipeline as the original o1.

OpenAI developed o1 mini to address a common challenge in AI deployment: while large, general-purpose models excel across many tasks, they're often unnecessarily powerful (and expensive) for specialized applications. By creating a model focused primarily on technical reasoning rather than broad world knowledge, OpenAI has delivered a solution that's:

80% cheaper than o1 preview

Significantly faster at inference

Nearly as capable on technical reasoning tasks

Optimized for practical deployment

This approach reflects a growing trend in AI development toward purpose-built models that deliver exceptional performance in specific domains rather than trying to excel at everything.

O1 Mini vs. O1: Key Differences Between OpenAI's Reasoning Models

While both o1 mini and o1 belong to OpenAI's reasoning-focused model family, they differ in several important respects:

Feature | O1 Mini | O1 |

|---|---|---|

Size | Smaller, more efficient model | Larger model with more parameters |

AIME (mathematics) | 70.0% accuracy | 74.4% accuracy |

Codeforces (programming) | 1650 Elo rating | 1673 Elo rating |

HumanEval (coding) | 92.4% accuracy | 92.4% accuracy |

Processing Speed | Approximately 3–5x faster than o1 preview | Standard processing speed |

API Cost | 80% cheaper than o1 preview | Higher cost reflecting larger model size |

World Knowledge | Limited outside STEM domains | Broader knowledge across domains |

Best For | Fast, efficient STEM reasoning | Strongest possible reasoning across domains |

These performance metrics demonstrate that for specialized technical tasks, o1 mini delivers capabilities remarkably close to its larger counterpart while offering significant advantages in speed and cost-efficiency.

O1 Mini vs. O1 Preview: How the New Model Improves on Its Predecessor

Before o1 mini, OpenAI offered o1 preview as its initial reasoning-focused model. The newer o1 mini represents a significant advance over this predecessor in several important ways:

Feature | O1 Mini | O1 Preview | Improvement |

|---|---|---|---|

AIME (mathematics) | 70.0% accuracy | 44.6% accuracy | +25.4% |

Codeforces (programming) | 1650 Elo rating | 1258 Elo rating | +392 Elo |

MATH-500 | 90.0% accuracy | 85.5% accuracy | +4.5% |

Processing Speed | 3–5x faster (base comparison) | 3–5x slower (base comparison) | – |

API Cost | 80% cheaper (base comparison) | 5x more expensive (base comparison) | – |

GPQA (science) | 60.0% accuracy | 73.3% accuracy | –13.3% |

Non-STEM Knowledge | More limited | Better | Reduced capability |

Best For | STEM-focused applications | Balanced reasoning and knowledge | Depends on use case |

These improvements demonstrate that despite being smaller and more efficient, o1 mini actually delivers stronger performance than o1 preview on many technical tasks, particularly in mathematics and programming. The tradeoff comes in reduced performance on tasks requiring broader knowledge outside STEM domains.

O1 Mini vs. GPT-4o: Comparing Performance and Capabilities

O1 mini and GPT-4o represent different approaches to AI model design—specialized versus general-purpose. Understanding their relative strengths helps determine which is better suited for particular tasks.

Feature | O1 Mini | GPT-4o | Advantage |

|---|---|---|---|

MATH-500 | 90.0% | 60.3% | O1 Mini (+29.7%) |

GPQA (science) | 60.0% | 53.6% | O1 Mini (+6.4%) |

MMLU (broad knowledge) | 85.2% | 88.7% | GPT-4o (+3.5%) |

HumanEval (coding) | 92.4% | 90.2% | O1 Mini (+2.2%) |

Multimodal Capabilities | Text only | Text, images, audio | GPT-4o |

Processing Speed | Faster for reasoning tasks | Optimized for general tasks | O1 Mini (for reasoning) |

General Knowledge | Limited outside STEM | Comprehensive | GPT-4o |

Creative Tasks | Less capable | Strong performance | GPT-4o |

Mathematical Calculations | Preferred in human evaluations | Less preferred | O1 Mini |

Data Analysis | Preferred in human evaluations | Less preferred | O1 Mini |

Personal Writing | Less preferred | Preferred in human evaluations | GPT-4o |

Best For | STEM reasoning, mathematics, coding | Broad capabilities, creative tasks | Depends on use case |

These results demonstrate o1 mini's clear advantage for specialized technical reasoning tasks, while GPT-4o maintains superiority in broader capabilities and multimodal features.

What Are the Main Differences Between O3-Mini and O1-Mini?

OpenAI offers two specialized "mini" models—o3-mini and o1-mini—each designed for different purposes. Understanding their key differences helps select the right tool for specific tasks.

Category | O1-mini | O3-mini |

|---|---|---|

Core Architecture | Optimized specifically for STEM reasoning during pretraining, with a focus on mathematical and programming tasks. | Designed for quick reasoning across a broader range of domains, with less specialized focus on STEM. |

Task Performance | Excels at complex mathematical problems, coding challenges, and systematic reasoning tasks requiring step-by-step thinking. | Performs better on quick general reasoning tasks across diverse domains where speed matters more than depth. |

Speed and Efficiency | Optimized for maximum reasoning performance within cost constraints, striking a balance between depth and speed. | Prioritizes speed and responsiveness, making it the faster option for time-sensitive applications. |

Ideal Use Cases | Educational applications in STEM fields, technical problem-solving, coding assistance, and mathematical modeling. | Applications requiring quick reasoning across varied domains, customer support, and general problem-solving where rapid response is critical. |

When choosing between these models, consider whether STEM-specific performance or general reasoning speed is more important, the complexity of the reasoning tasks you'll be handling, your specific domain requirements (technical vs. general), and your budget constraints and performance needs.

Is O3 Mini Better Than O1 Mini? Performance Comparison

When comparing o3-mini and o1-mini, "better" depends entirely on your specific needs and use cases. Each model has distinct strengths that make it more suitable for different applications.

Capability | O1 Mini | O3 Mini | Better Option |

|---|---|---|---|

Complex Mathematics | Superior depth and accuracy | Faster but less thorough | O1 Mini for quality |

Programming Tasks | Better for complex problems | Better for simple problems | Depends on complexity |

Step-by-Step Reasoning | More detailed and systematic | Faster but less detailed | O1 Mini for depth |

Response Speed | Moderate | Faster | O3 Mini for speed |

Request Throughput | Good | Excellent | O3 Mini for volume |

User Experience | Better for complex tasks | Better for interactive use | Depends on application |

Technical Education | Superior explanations | Quicker responses | O1 Mini for learning |

General Applications | Less versatile | More versatile | O3 Mini for versatility |

Benchmark Performance | Stronger on STEM benchmarks | Stronger on speed metrics | Depends on metrics |

The key is matching the model to your specific requirements rather than viewing either as universally "better."

ChatGPT O1 Mini: How to Access and Use the Model

O1 mini is available through both ChatGPT's interface and the OpenAI API. Here's how to access and effectively use this specialized reasoning model.

Access Through ChatGPT

O1 mini is available to ChatGPT Plus, Team, Enterprise, and Edu users. To use it:

Log in to your ChatGPT account

Click on the model selector at the top of the chat interface

Select "o1-mini" from the available models

Start a new conversation focused on STEM reasoning tasks

Note that o1 mini is not available on the free tier of ChatGPT.

Best Practices for Using O1 Mini

To get the most from o1 mini:

Be specific about reasoning tasks: Clearly state the problem or question you need solved

Structure complex problems: Break down multi-step problems into clearly defined components

Provide necessary context: Include relevant information and constraints

Ask for step-by-step reasoning: Request that the model explain its thinking process

Verify results: For critical applications, cross-check answers against known solutions

Effective Prompt Engineering

O1 mini responds best to well-structured prompts. For example:

For a math problem: "Solve this step-by-step: Find all positive integers n such that n^2 + 100 is divisible by n + 10."

For a coding task: "Write a Python function that takes a list of integers and returns the longest increasing subsequence. Explain your approach."

Rate Limits and Usage Considerations

ChatGPT Plus users have higher rate limits for o1 mini compared to o1 preview, allowing more frequent use of the model. This makes it practical for extended problem-solving sessions or educational applications.

For heavy usage, the API may be more cost-effective than subscription plans, especially for applications requiring systematic processing of many problems.

O1 Mini Pricing: Understanding the Cost Structure

One of o1 mini's key advantages is its cost-efficiency compared to other reasoning models. Here's what you need to know about pricing across different access options.

API Pricing

Through the OpenAI API, o1 mini is priced at 80% less than o1 preview:

This dramatic cost reduction makes it practical for high-volume applications and extended use

The exact per-token pricing is available in OpenAI's current documentation (rates may change over time)

For applications requiring substantial reasoning capabilities, o1 mini offers the best performance-to-cost ratio in OpenAI's model lineup

Access Through Subscriptions

O1 mini is available through various ChatGPT subscription plans:

ChatGPT Plus ($20/month): Includes access to o1 mini with higher rate limits than o1 preview

ChatGPT Team: Business-oriented plan with o1 mini access

ChatGPT Enterprise: Full access with maximum rate limits and additional security features

ChatGPT Edu: Educational plan with o1 mini access for academic use

Cost Efficiency Considerations

When evaluating the cost-efficiency of o1 mini:

For applications requiring mainly STEM reasoning, o1 mini typically offers the best value

If you need both reasoning and broader capabilities, combining o1 mini with other models may be more cost-effective than using a single larger model for everything

For high-volume API usage, o1 mini's 80% cost reduction compared to o1 preview represents substantial savings

ROI Analysis

Organizations implementing o1 mini have reported significant returns on investment:

Educational platforms can offer higher-quality STEM assistance at lower costs

Development teams can integrate advanced reasoning capabilities without prohibitive API expenses

Research organizations can run more extensive simulations and analyses within the same budget

For most STEM-focused applications, o1 mini represents the most economical path to advanced reasoning capabilities.

O1 Mini API: Developer Implementation Guide

Integrating o1 mini into your applications through the OpenAI API is straightforward. Here's what developers need to know to get started.

API Access and Authentication

Sign up for an OpenAI API account if you don't have one

Navigate to the API Keys section and generate a new secret key

Store this key securely—it will be used to authenticate your API requests

Ensure you have sufficient credit or a payment method linked to your account

Basic Implementation

Here's a simple example of how to call o1 mini using Python:

Parameter Optimization

To get the best results from o1 mini:

Use temperature=0 for mathematical and logical problems to ensure maximum accuracy

For coding tasks, a slightly higher temperature (0.1-0.3) may produce more creative solutions

Set appropriate max_tokens based on the complexity of your reasoning task

Consider using top_p=1 to ensure deterministic outputs for technical problems

Error Handling

Implement robust error handling to manage:

Rate limiting errors (429)

Authentication issues (401)

Server errors (500 series)

Timeout handling for complex reasoning tasks

Rate Limiting Considerations

O1 mini has higher rate limits than o1 preview, but you should still:

Implement exponential backoff for retries

Monitor your usage to avoid unexpected costs

Consider batching requests when possible

For production applications, implement proper monitoring and alerting around API usage to manage costs effectively.

O1 Mini for STEM and Technical Tasks

O1 mini particularly shines in STEM and technical applications, where its specialized training delivers impressive results across various domains.

In mathematics, o1 mini demonstrates remarkable capabilities. On the AIME high school math competition, it scores 70.0%, equivalent to answering about 11 out of 15 questions correctly. This performance places it approximately at the level of the top 500 US high school mathematics students. The model excels at algebra, geometry, number theory, and combinatorics problems, and can provide step-by-step reasoning for complex mathematical proofs and solutions.

For software development tasks, o1 mini shows strong performance. It achieves 1650 Elo on Codeforces, placing it at approximately the 86th percentile of programmers on the platform. The model scores 92.4% on the HumanEval coding benchmark, matching the full o1 model, and performs well on cybersecurity capture the flag challenges (28.7% accuracy, compared to o1 preview's 43.0%).

Key strengths in coding include:

Algorithm design, debugging, and code optimization

Systematic problem analysis and solution development

Clear explanation of programming concepts and approaches

Effective translation of requirements into functional code

In scientific domains, o1 mini demonstrates solid capabilities, outperforming GPT-4o on GPQA (science) with a score of 60.0% vs. 53.6%. It effectively handles problems in physics, chemistry, and other scientific disciplines, works through complex scientific reasoning tasks methodically, and provides clear explanations of scientific concepts.

Engineers have found o1 mini valuable for various applications including solving complex engineering problems that require systematic analysis, optimizing designs through mathematical modeling, troubleshooting technical issues, and converting engineering requirements into mathematical frameworks.

O1 mini is particularly well-suited for STEM education, providing:

Step-by-step explanations of complex concepts

Practice problems with varying difficulty levels

Personalized tutoring support

Educational content creation for technical subjects

For any application requiring deep technical reasoning without the need for broad world knowledge, o1 mini represents an optimal balance of capability, speed, and cost.

How to Use O1 Mini for Optimal Results

Getting the most from o1 mini requires understanding its strengths and how to effectively prompt it for different types of tasks.

Optimizing Mathematical Problem-Solving

When using o1 mini for mathematics:

State problems clearly and completely

Ask for step-by-step solutions

Specify the level of detail you need

Include any constraints or special conditions

For complex problems, break them down into smaller components

Example prompt: "Solve step-by-step: Find all values of x that satisfy the equation 2sin(x) + sin(2x) = 0 in the interval [0, 2π]."

Effective Coding Assistance

For programming tasks:

Specify the programming language clearly

Define the problem requirements precisely

Mention any performance constraints

Ask for explanations of the approach

Request test cases to verify the solution

Example prompt: "Write a Python function to find the longest palindromic substring in a given string. Include your reasoning, the algorithm's time complexity, and test cases."

Scientific Problem-Solving

When tackling scientific questions:

Provide all relevant information and constants

Specify the precision needed for numerical answers

Ask for the underlying principles to be explained

Request verification of results when applicable

For complex problems, suggest a methodical approach

Example prompt: "A 2kg object is thrown vertically upward with an initial velocity of 20 m/s. Calculate how high it will go, the time to reach maximum height, and the time to return to the starting point. Use g = 9.8 m/s² and show your work."

Handling Complex Reasoning

For multi-step reasoning tasks:

Break the problem into logical stages

Ask the model to think through each step explicitly

Request verification at critical decision points

For complex logic, use concrete examples

Follow up on any unclear reasoning

By leveraging these approaches, you can maximize o1 mini's effectiveness for your specific technical and educational needs.

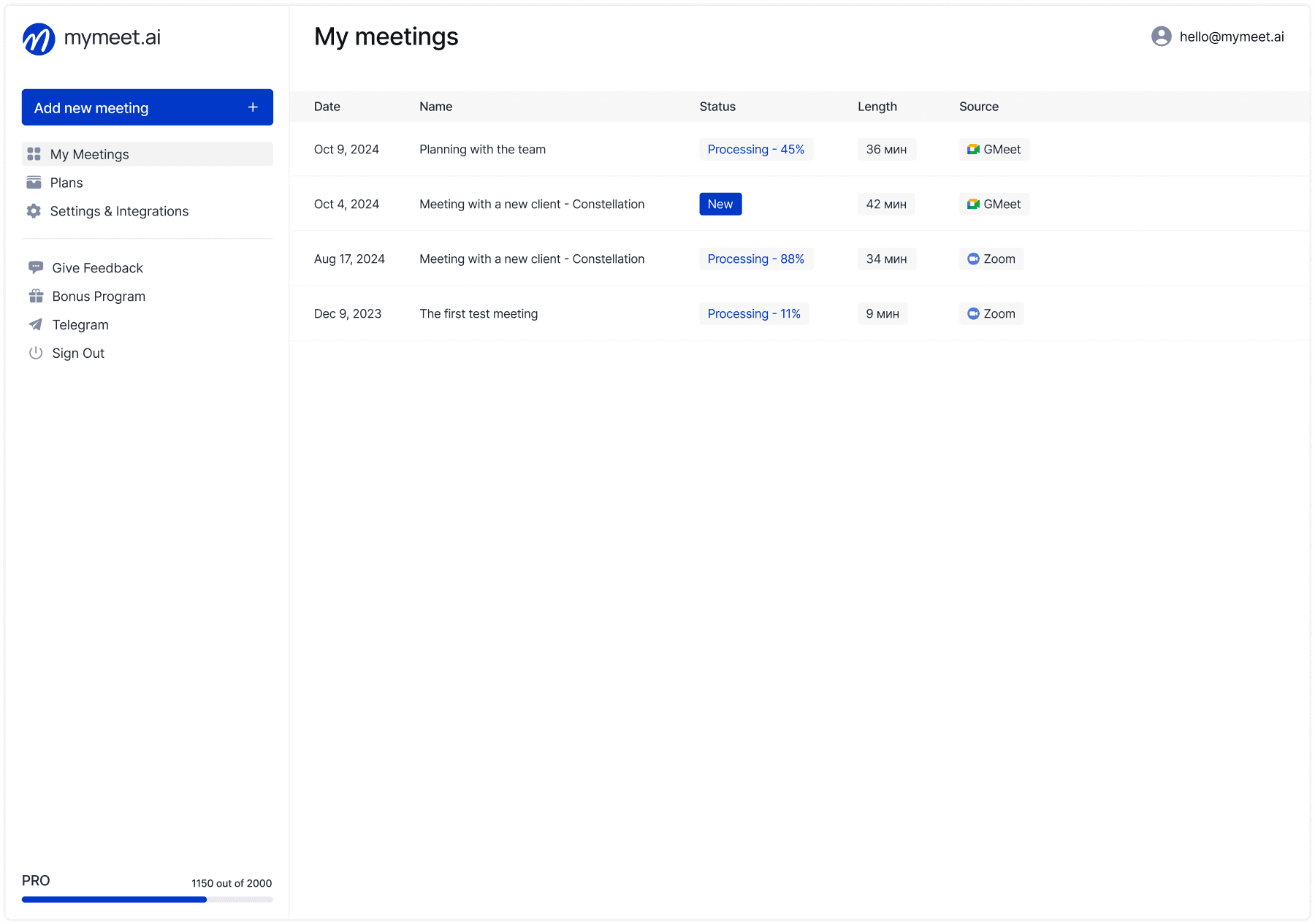

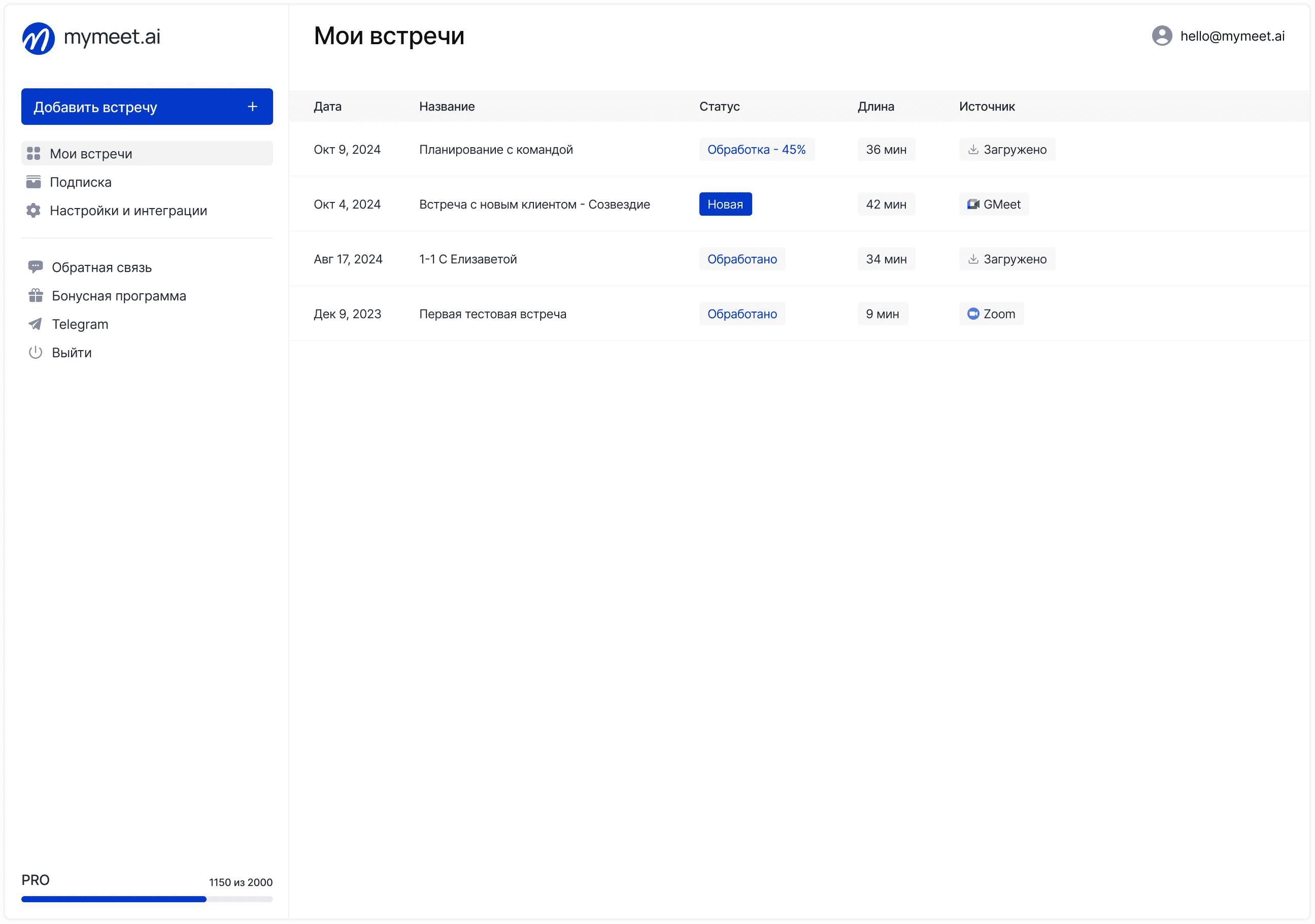

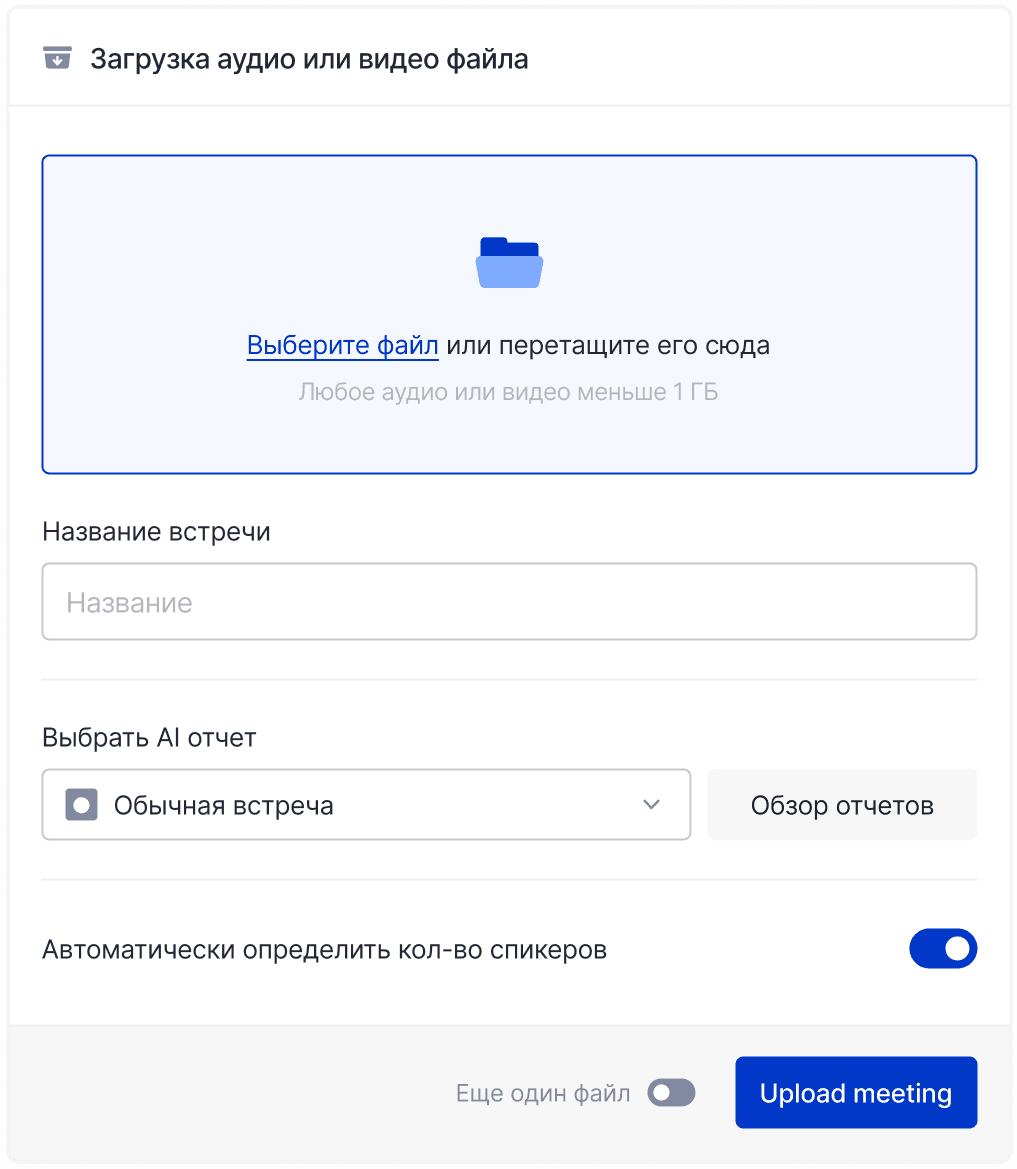

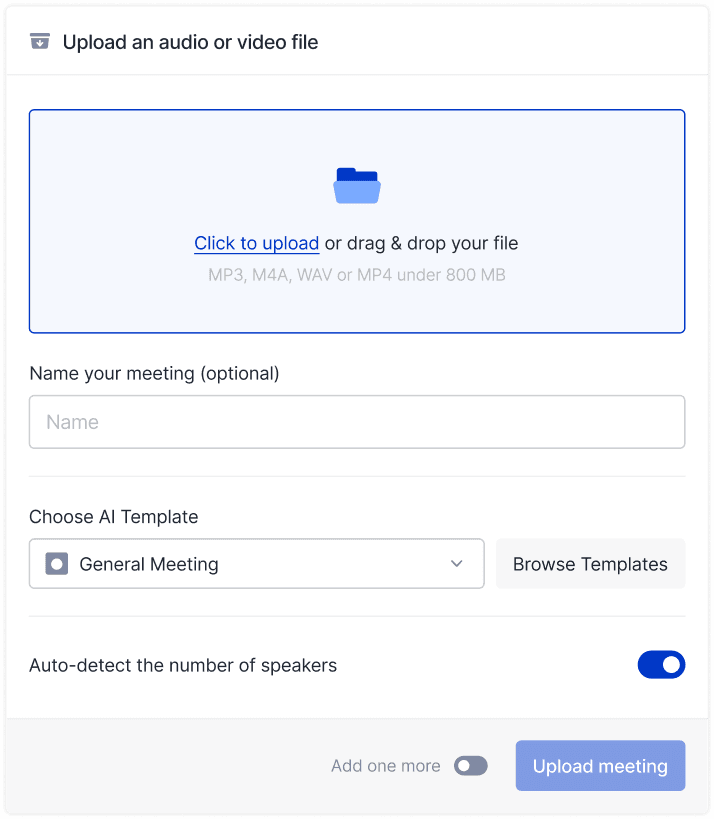

Enhancing O1 Mini with mymeet.ai for Technical Meeting Intelligence

While O1 Mini excels at STEM reasoning and problem-solving, it lacks the ability to participate in or analyze actual meetings. This is where integrating mymeet.ai creates a powerful workflow for technical teams and educational settings.

What is mymeet.ai?

mymeet.ai is a specialized AI meeting assistant that automatically joins, records, and transcribes online meetings across platforms like Zoom, Google Meet, and Телемост. Unlike conversational AI models that require manual input, mymeet.ai actively participates in meetings, creating detailed transcripts, extracting action items, and generating summaries without human intervention.

How mymeet.ai Complements O1 Mini

Feature | mymeet.ai | O1 Mini | Combined Workflow |

|---|---|---|---|

Meeting Attendance | Automatically joins meetings | Cannot attend meetings | mymeet.ai captures the meeting; O1 Mini analyzes its content |

Technical Transcription | Captures STEM discussions verbatim | N/A | Provides accurate technical content for analysis |

Action Item Extraction | Identifies tasks from conversations | N/A | Extracts technical tasks ready for O1 Mini processing |

Mathematical Content | Records equations and formulas | Solves complex math problems | Supports an end-to-end math problem workflow |

Code Discussion | Captures programming conversations | Excels at coding tasks | Enables a complete code development pipeline |

Technical Knowledge | N/A | Strong STEM reasoning applied to meeting content | Enhances overall analysis with robust technical reasoning capabilities |

Practical Applications of the mymeet.ai + O1 Mini Integration

This integration creates particularly powerful workflows for technical teams:

STEM Education: Record technical lectures and tutoring sessions with mymeet.ai, then use O1 Mini to analyze student questions, provide step-by-step solutions to problems discussed, and create additional practice materials based on lecture content.

Engineering Teams: Capture engineering meetings where technical problems are discussed, then leverage O1 Mini's extraordinary mathematical capabilities to solve the equations and optimization problems that emerged during the discussion.

Software Development: Use mymeet.ai to document technical requirements and architecture discussions, then employ O1 Mini to transform these discussions into functional code, test cases, and documentation.

Scientific Research: Record research meetings and seminars with mymeet.ai, then utilize O1 Mini to work through the complex scientific reasoning tasks identified during discussions.

Implementation Strategy

To maximize the benefits of combining these tools:

Configure mymeet.ai to automatically join scheduled technical meetings

Use its speaker identification features to track contributions from different team members

Export the transcript to a format suitable for processing with O1 Mini

Submit specific technical problems identified in the meeting to O1 Mini

Use O1 Mini's step-by-step reasoning to develop detailed solutions

This combination addresses a significant limitation in current AI workflows: while O1 Mini provides exceptional reasoning capabilities, it requires someone to input the problems. By using mymeet.ai to automatically capture technical discussions, organizations can identify problems and context organically from actual meetings, then leverage O1 Mini's specialized reasoning abilities for solutions.

For technical teams that spend significant time in meetings discussing STEM topics, this integration creates a seamless pipeline from problem identification to solution development, maximizing the value of both tools

O1 Mini Limitations: What You Need to Know

While o1 mini excels at STEM reasoning tasks, it has several important limitations you should be aware of when deciding whether it's the right tool for your needs.

Non-STEM Knowledge Constraints

O1 mini's most significant limitation is its reduced knowledge outside STEM domains:

Its factual knowledge on topics like history, culture, and current events is comparable to much smaller models like GPT-4o mini

It may provide less detailed or accurate information about non-technical subjects

For tasks requiring broad world knowledge, other models like GPT-4o are generally more suitable

Benchmark Performance Gaps

On certain benchmarks, o1 mini shows performance limitations:

Scores lower on MMLU (general knowledge) than both o1 and GPT-4o

Performs worse on GPQA than o1 preview, despite being stronger in mathematics

Shows reduced capabilities on tasks requiring cultural or historical context

Technical Boundaries

Even within its areas of strength, o1 mini has limitations:

May struggle with extremely complex or novel mathematical problems

Has the same knowledge cutoff as other OpenAI models, limiting awareness of recent developments

Cannot access the internet to gather additional information

Lacks multimodal capabilities (cannot process images or audio)

Future Improvements

OpenAI has acknowledged these limitations and indicated plans to address them:

Future versions may incorporate broader world knowledge

Potential extensions to other modalities beyond text

Ongoing improvements to reasoning capabilities

When deciding whether to use o1 mini, consider whether its specialized STEM reasoning strengths align with your primary needs or if broader capabilities would be more valuable.

Is O1 Mini Free? Understanding Access Options

O1 mini is available through multiple access options, each with different pricing structures and limitations.

ChatGPT Subscription Access

O1 mini is not available on the free tier of ChatGPT. It requires one of the following paid subscriptions:

ChatGPT Plus ($20/month): Provides access to o1 mini with higher rate limits than o1 preview

ChatGPT Team: Business-oriented plan that includes o1 mini access

ChatGPT Enterprise: Premium plan with maximum rate limits and advanced features

ChatGPT Edu: Educational subscription with o1 mini access

API Pricing

Through the OpenAI API, o1 mini is available at a cost that is 80% lower than o1 preview. This makes it the most cost-effective reasoning model in OpenAI's lineup. API access requires an OpenAI developer account with payment information. Usage is billed based on the number of tokens processed, and volume discounts may be available for high-usage applications.

Free Trial Options

While o1 mini itself isn't free, you may be able to test it through free API credits sometimes offered to new OpenAI API users, free trials occasionally offered for ChatGPT Plus, or educational access programs for qualified institutions.

Cost Comparison

When considering whether o1 mini is worth the cost, for regular STEM reasoning tasks, the ChatGPT Plus subscription often offers the best value. For applications requiring regular API access, o1 mini's 80% lower cost compared to o1 preview represents substantial savings. If you need only occasional access, paying for a single month of ChatGPT Plus might be the most economical option.

For users primarily interested in STEM reasoning capabilities, o1 mini offers the best performance-to-cost ratio among OpenAI's models, though it does require some form of paid access.

Is O1 Mini Better Than 4o? Choosing the Right Model

Determining whether o1 mini is "better" than GPT-4o depends entirely on your specific use case and priorities. Each model has distinct strengths that make it more suitable for different applications.

Comparison Between O1 Mini and GPT-4o

Category | O1 Mini | GPT-4o |

|---|---|---|

Technical & STEM | Consistently outperforms GPT-4o on mathematical benchmarks (90.0% vs 60.3% on MATH-500); provides more methodical solutions for complex coding challenges; offers thorough treatment of scientific reasoning tasks | Less specialized in STEM reasoning but still capable |

General Knowledge | Solid but not specialized (85.2% on MMLU) | Maintains an advantage in general knowledge (88.7% on MMLU); better for tasks requiring cultural context or nuance |

Creative Tasks | Less optimized for creative content generation | Better results for creative writing, personal writing, and editing |

Multimodal Capabilities | Currently text-only with no multimodal capabilities | Can process images and audio in addition to text; necessary for applications requiring visual understanding |

Speed & Cost | Processes reasoning tasks significantly faster; lower API costs for comparable usage; beneficial for high throughput requirements | Generally more expensive and potentially slower for certain reasoning tasks |

Decision Framework

To choose the right model:

For primarily STEM and technical applications, o1 mini likely offers better performance

For general-purpose applications requiring broad knowledge, GPT-4o is usually preferable

Consider using both models in tandem, leveraging each for its strengths

The "better" model is the one that most effectively meets your specific requirements rather than being universally superior.

The Future of O1 Mini: What's Coming Next

OpenAI has indicated several development directions for o1 mini and its reasoning model family. Understanding these potential future enhancements helps in planning for how the model might evolve.

Broader Knowledge Integration

OpenAI has explicitly acknowledged o1 mini's limitations in non-STEM knowledge and indicated plans to address this:

Future versions may incorporate enhanced factual knowledge while maintaining reasoning strengths

This would reduce the current tradeoff between technical reasoning and broader knowledge

The goal appears to be maintaining o1 mini's cost-efficiency while expanding its capabilities

Multimodal Extensions

While currently text-only, o1 mini may expand to include other modalities:

OpenAI mentioned "experimenting with extending the model to other modalities"

This could potentially include image understanding capabilities

Voice interaction might be added to enhance accessibility and user experience

Specialization Beyond STEM

OpenAI noted plans to "experiment with extending the model to other specialties outside of STEM":

This suggests future versions might include specialized reasoning for domains like law, medicine, or business

Such expansions would broaden o1 mini's utility across more professional fields

The approach would likely maintain the efficiency-focused design philosophy

Integration With Other OpenAI Products

As the OpenAI ecosystem continues to evolve:

Better integration between o1 mini and other specialized models might emerge

Automated model routing could select the optimal model for each task

Enhanced developer tools may make it easier to build applications leveraging o1 mini's strengths

Industry Implications

O1 mini's development approach signals broader trends in AI:

Movement toward more specialized, efficient models rather than one-size-fits-all solutions

Focus on practical deployment considerations like speed and cost-efficiency

Growing emphasis on reasoning capabilities rather than just pattern recognition

These developments suggest that o1 mini represents not just a single product but a shift in OpenAI's approach to creating more practical, specialized AI tools optimized for real-world deployment.

Conclusion

O1 mini represents a significant evolution in OpenAI's approach to AI model development—focusing on specialized, efficient models rather than just scaling up general-purpose systems.

For users with STEM-focused needs, o1 mini provides exceptional reasoning capabilities at significantly lower cost than previous options. Its performance on mathematical and coding benchmarks shows specialized training can deliver superior results without requiring massive computational resources.

The model processes reasoning tasks 3-5x faster than o1 preview, making it more practical for interactive applications and high-throughput systems while improving user experience and reducing infrastructure requirements.

Most impressively, o1 mini achieves these gains while being 80% cheaper than its predecessor, making advanced reasoning capabilities accessible to a much broader range of applications and organizations.

While o1 mini's limitations in non-STEM knowledge should be considered, its exceptional performance within its specialization makes it optimal for many technical applications. O1 mini demonstrates that the future of AI likely lies not just in ever-larger general models, but in purpose-built systems optimized for specific tasks.

O1 Mini FAQ: Answering Your Most Common Questions

What is o1 mini?

O1 mini is a cost-efficient reasoning model from OpenAI optimized specifically for STEM tasks like mathematics and coding. It delivers performance comparable to larger reasoning models while being significantly faster and cheaper.

How does o1 mini differ from o1 and o1 preview?

O1 mini is a smaller, more efficient model than the full o1. It maintains comparable performance on STEM reasoning tasks while being 80% cheaper and 3-5x faster than o1 preview. However, it has more limited knowledge outside technical domains.

What are the key differences between o3-mini and o1-mini?

O1 mini is specialized for deep STEM reasoning and excels at mathematical and coding tasks. O3-mini is designed for faster general reasoning across broader domains. Choose o1 mini for technical depth and o3-mini for speed across varied tasks.

Is o1 mini free to use?

No, o1 mini is not available on the free tier of ChatGPT. It requires a ChatGPT Plus, Team, Enterprise, or Edu subscription, or access through the OpenAI API with associated costs.

What is ChatGPT o1 mini used for?

ChatGPT o1 mini is primarily used for solving complex mathematical problems, coding challenges, scientific reasoning, technical education, and other STEM-related tasks that benefit from systematic step-by-step reasoning.

How much does o1 mini cost on the API?

O1 mini is priced at 80% less than o1 preview on the OpenAI API. The exact per-token pricing is available in OpenAI's current documentation and may change over time.

What are o1 mini's limitations?

O1 mini's main limitations include reduced knowledge outside STEM domains (comparable to much smaller models), lack of multimodal capabilities, and the same knowledge cutoff as other OpenAI models limiting awareness of recent developments.

Is o1 mini better than GPT-4o?

For STEM reasoning, mathematics, and coding tasks, o1 mini typically outperforms GPT-4o. However, GPT-4o is superior for general knowledge, creative tasks, and multimodal capabilities. The "better" model depends on your specific needs.

Can I use o1 mini for coding?

Yes, o1 mini excels at coding tasks, achieving 92.4% on the HumanEval benchmark and a 1650 Elo rating on Codeforces (approximately 86th percentile of programmers). It's particularly effective for algorithm design, debugging, and optimization.

What is the token limit for o1 mini?

OpenAI hasn't publicly specified o1 mini's exact token limit, but it's expected to be similar to other OpenAI models. For the most current information, check OpenAI's documentation or your API settings.

Fedor Zhilkin

Mar 5, 2025